Title: Deep Architectures for Visual Reasoning, Multimodal Learning, and Decision-Making

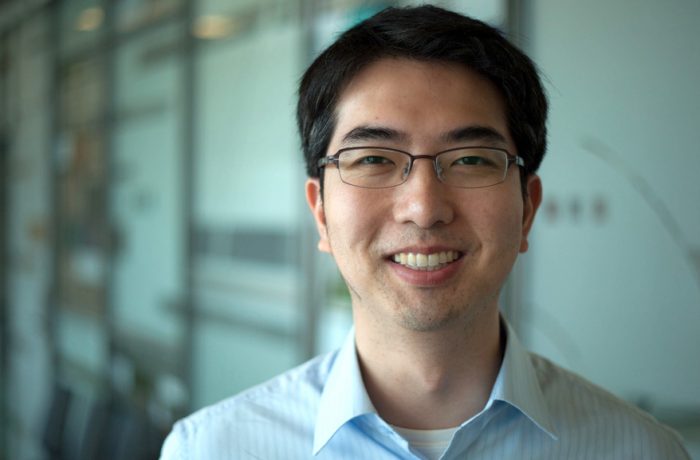

Speaker: Honglak Lee

Abstract: Over the recent years, deep learning has emerged as a powerful method for learning feature representations from complex input data, and it has been greatly successful in computer vision, speech recognition, and language modeling. While many deep learning algorithms focus on a discriminative task and extract only task-relevant features that are invariant to other factors, complex sensory data is often generated from intricate interaction between underlying factors of variations (for example, pose, morphology and viewpoints for 3d object images). In this work, we tackle the problem of learning deep representations that disentangle underlying factors of variation and allow for complex visual reasoning and inference. We present several successful instances of deep architectures and their learning methods in supervised and weakly-supervised settings. Further, I will talk about visual analogy making with disentangled representations, as well as a connection between disentangling and unsupervised learning. In the second part of the talk, I will describe my work on learning deep representations from multiple heterogeneous input modalities. Specifically, I will talk about multimodal learning via conditional prediction that explicitly encourages cross-modal associations. This framework provides a theoretical guarantee about learning a joint distribution and explains recent progress in deep architectures that interface vision and language, such as caption generation and conditional image synthesis. I will also describe other related work on learning joint embedding from images and text for fine-grained recognition and zero-shot learning.

Finally, I will describe my work on combining deep learning and reinforcement learning. Specifically, I will talk about how learning a predictive generative model from video data can be useful for reinforcement learning via better exploration. I also will talk about a new memory-based architecture that helps sequential decision making in a first-person view and active perception setting.

Bio: Honglak Lee is an Associate Professor of Computer Science and Engineering at the University of Michigan, Ann Arbor. He received his Ph.D. from Computer Science Department at Stanford University in 2010, advised by Prof. Andrew Ng. His research focuses on deep learning and representation learning, which spans over unsupervised and semi-supervised learning, supervised learning, transfer learning, structured prediction, graphical models, optimization, and reinforcement learning. His methods have been successfully applied to computer vision and other perception problems. He received best paper awards at ICML 2009 and CEAS 2005. He has served as a guest editor of IEEE TPAMI Special Issue on Learning Deep Architectures, an editorial board member of Neural Networks, and he is currently an associate editor of IEEE TPAMI. He also has served as area chairs of ICML, NIPS, ICLR, ICCV, CVPR, ECCV, AAAI, and IJCAI. He received the Google Faculty Research Award (2011), NSF CAREER Award (2015), and was selected as one of AI’s 10 to Watch by IEEE Intelligent Systems (2013) and a research fellow by Alfred P. Sloan Foundation (2016).