Introduction

Publication

- Alexandre Robicquet, Alexandre Alahi, Amir Abbas Sadeghian, Bryan Anenberg, Eli Wu, Silvio Savarese. Forecasting Social Navigation in Crowded Complex Scenes

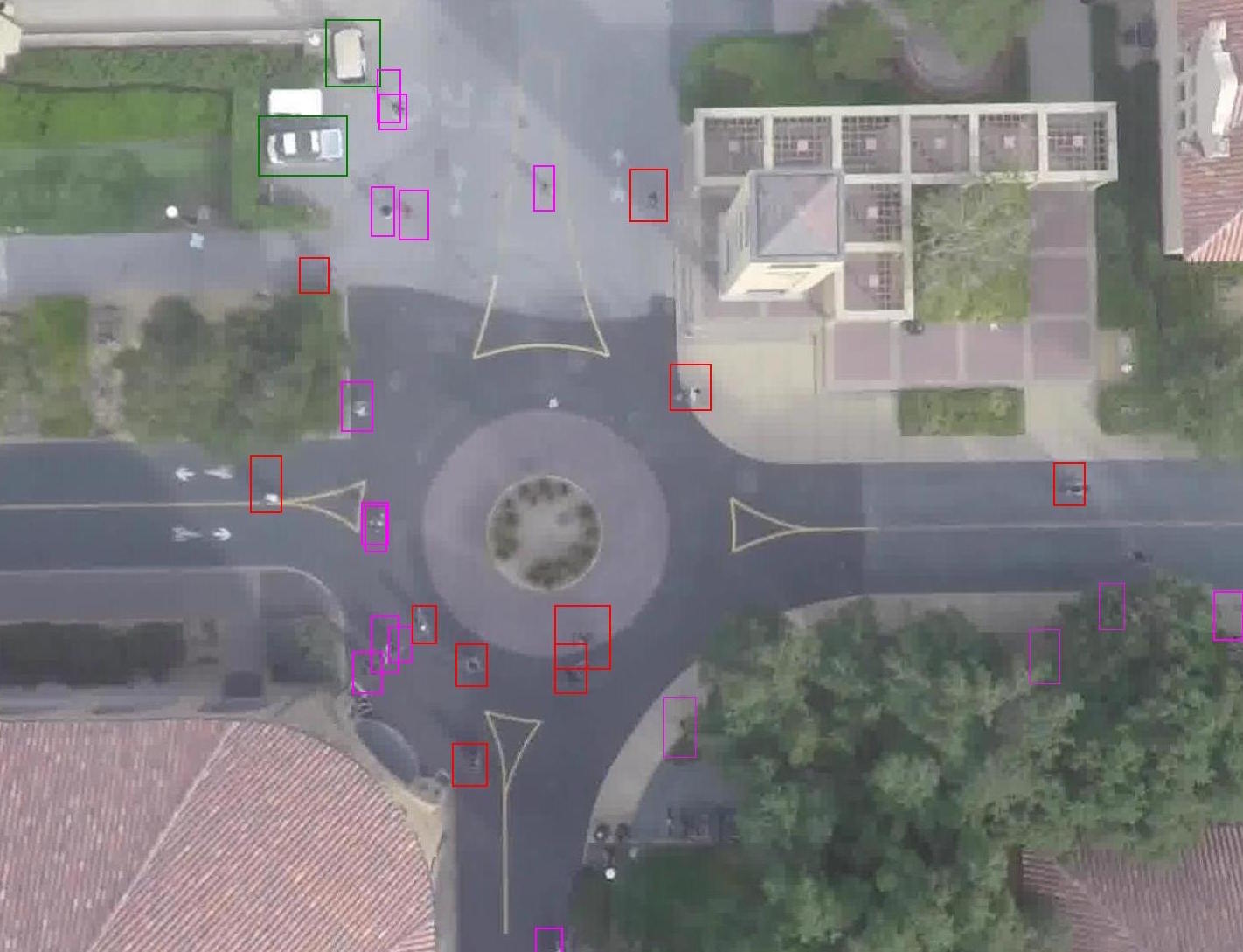

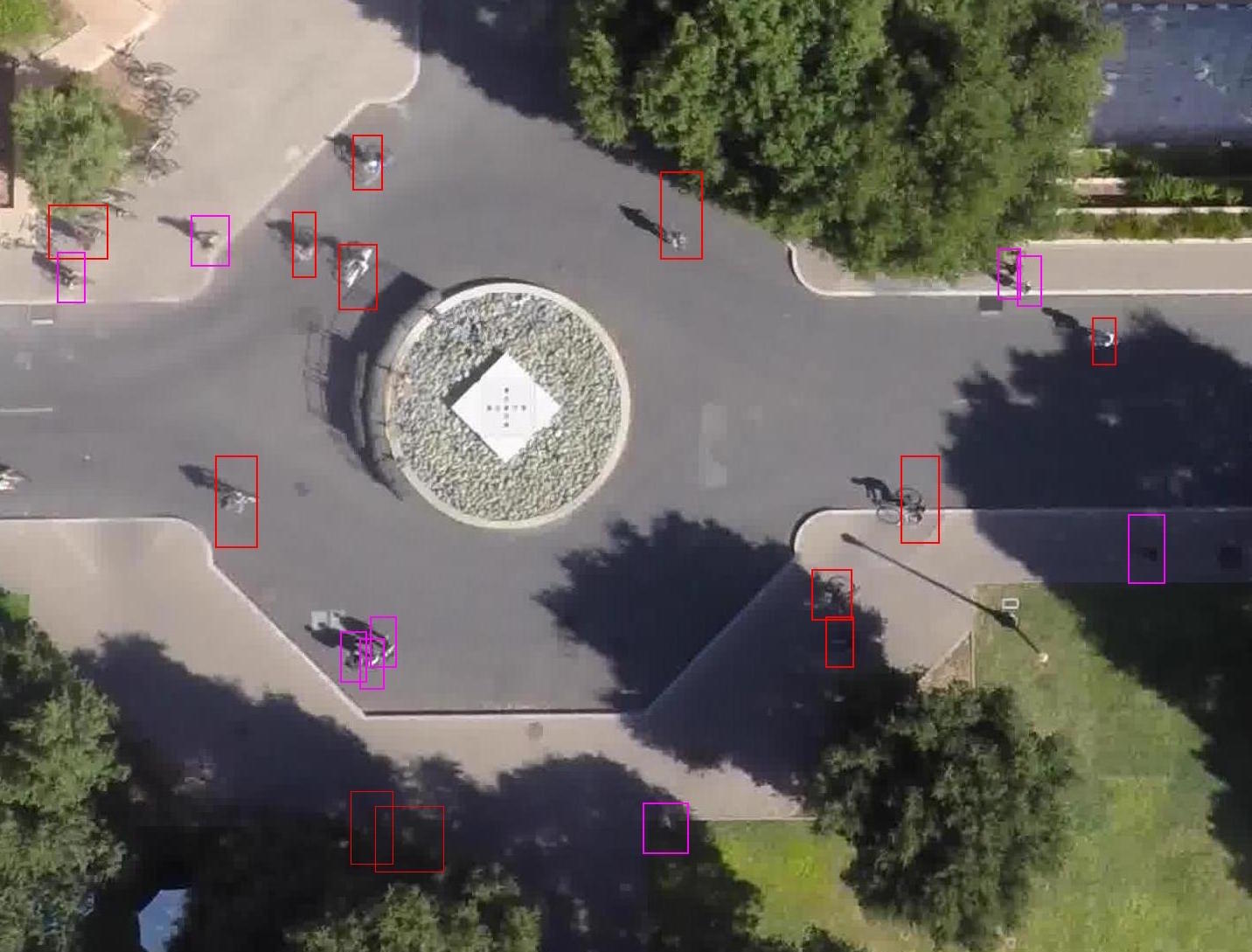

Dataset

Statistics

The dataset consists of eight unique scenes. The number of videos in each scene and the percentage of each agent in each scene is reported below.

| Scenes | Videos | Bicyclist | Pedestrian | Skateboarder | Cart | Car | Bus |

|---|---|---|---|---|---|---|---|

| gates | 9 | 51.94 | 43.36 | 2.55 | 0.29 | 1.08 | 0.78 |

| little | 4 | 56.04 | 42.46 | 0.67 | 0 | 0.17 | 0.67 |

| nexus | 12 | 4.22 | 64.02 | 0.60 | 0.40 | 29.51 | 1.25 |

| coupa | 4 | 18.89 | 80.61 | 0.17 | 0.17 | 0.17 | 0 |

| bookstore | 7 | 32.89 | 63.94 | 1.63 | 0.34 | 0.83 | 0.37 |

| deathCircle | 5 | 56.30 | 33.13 | 2.33 | 3.10 | 4.71 | 0.42 |

| quad | 4 | 12.50 | 87.50 | 0 | 0 | 0 | 0 |

| hyang | 15 | 27.68 | 70.01 | 1.29 | 0.43 | 0.50 | 0.09 |

Annotation samples

Contact : anenberg at stanford dot edu

Last update : 12/19/2015