Stephen Gould, Joakim Arfvidsson, Adrian Kaehler, Benjamin Sapp, Marius Meissner, Gary Bradski, Paul Baumstarck, Sukwon Chung and Andrew Y. Ng

abstract

Human object recognition in a physical 3-d environment

is still far superior to that of any robotic

vision system. We believe that one reason (out

of many) for this—one that has not heretofore

been significantly exploited in the artificial vision

literature—is that humans use a fovea to fixate on,

or near an object, thus obtaining a very high resolution

image of the object and rendering it easy to recognize.

In this paper, we present a novel method for

identifying and tracking objects in multi-resolution

digital video of partially cluttered environments.

Our method is motivated by biological vision systems

and uses a learned "attentive" interest map

on a low resolution data stream to direct a high

resolution "fovea." Objects that are recognized in

the fovea can then be tracked using peripheral vision.

Because object recognition is run only on

a small foveal image, our system achieves performance

in real-time object recognition and tracking

that is well beyond simpler systems.

demonstrations

The following videos demonstrate the experiments outlined in the paper

Peripheral-Foveal Vision for Real-time Object Recognition and Tracking in Video.

The videos show four panes organized as follows:

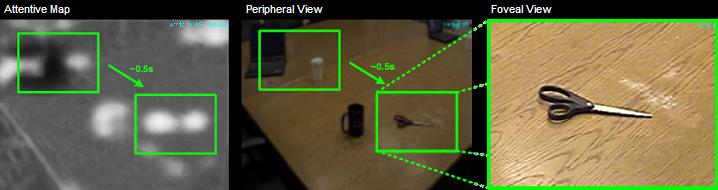

| Attentive Map |

Peripheral View |

| Foveal View |

Results |

In the results pane, red boxes represent groundtruth and green boxes represent objects detected and tracked (which includes both true- and false-positives). The rectangle in the peripheral view pane shows where the fovea is being directed. The border is green during frames for which objects are tracked and changes color when the classifiers are run.

- Foveal gaze fixed to the center of view

(avi |

wmv)

- Linearly scanning over the scene from top-left to bottom-right

(avi |

wmv)

- Randomly moving the fovea around the scene

(avi |

wmv)

- Attention driven fovea (our method)

(avi |

wmv)

View the poster from our NIPS 2006 conference demonstration.

papers

- Peripheral-Foveal Vision for Real-time Object Recognition and Tracking in Video, Stephen Gould, Joakim Arfvidsson, Adrian Kaehler, Benjamin Sapp, Marius Meissner, Gary Bradski, Paul Baumstarck, Sukwon Chung and Andrew Y. Ng. In Proceedings of the Twentieth International Joint Conference on Artificial Intelligence (IJCAI-07), 2007. [pdf | ps ]