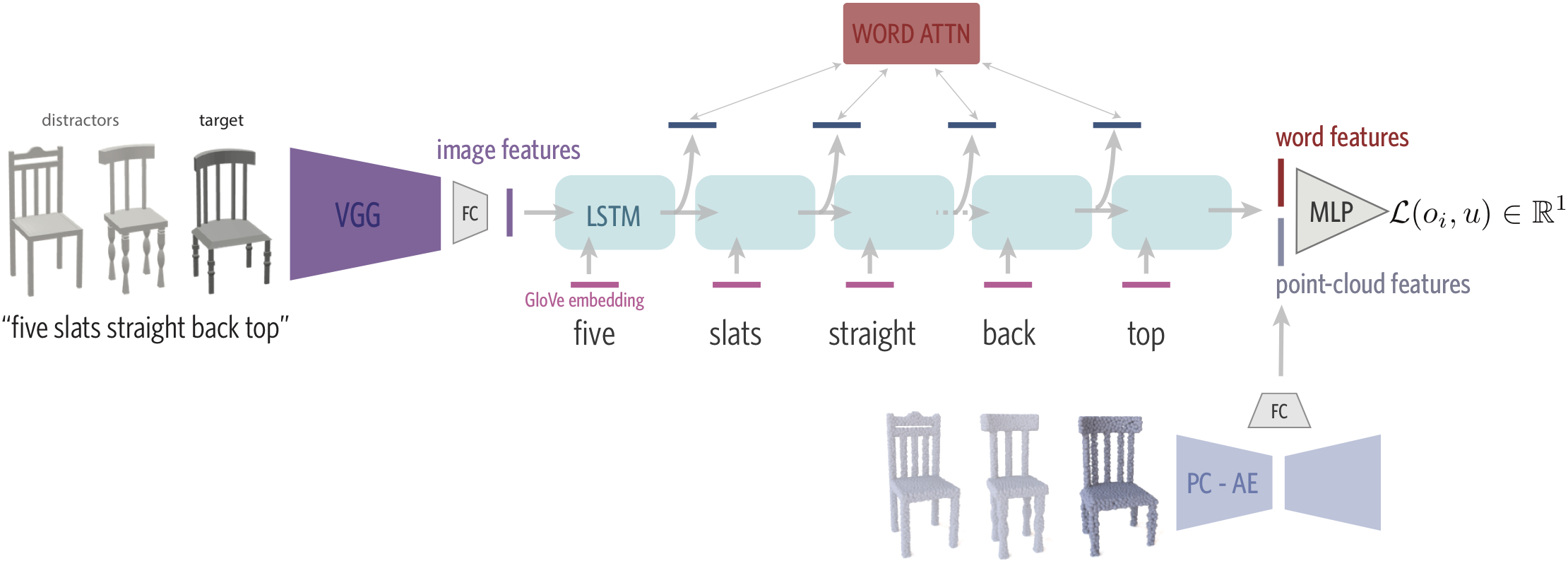

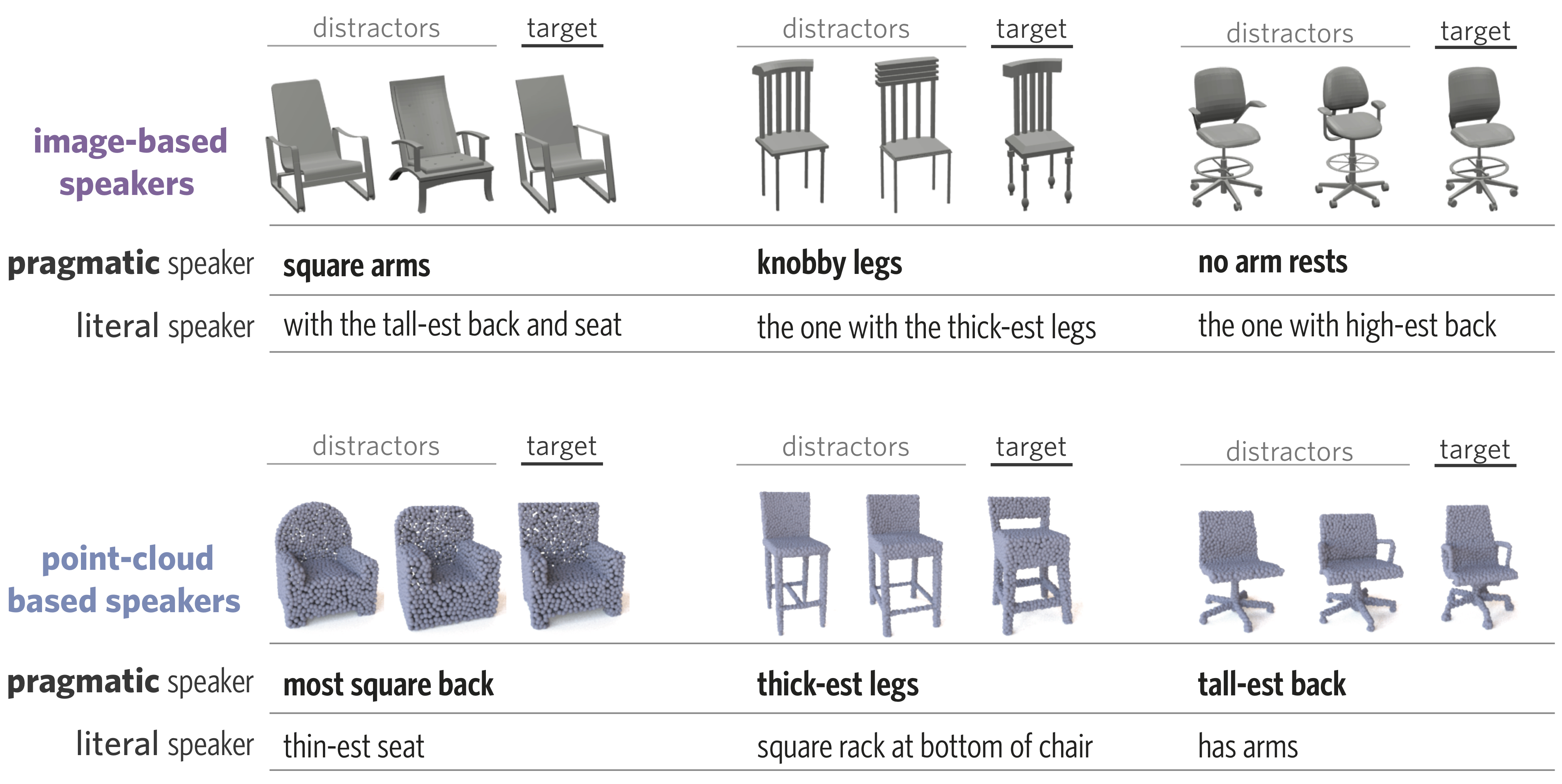

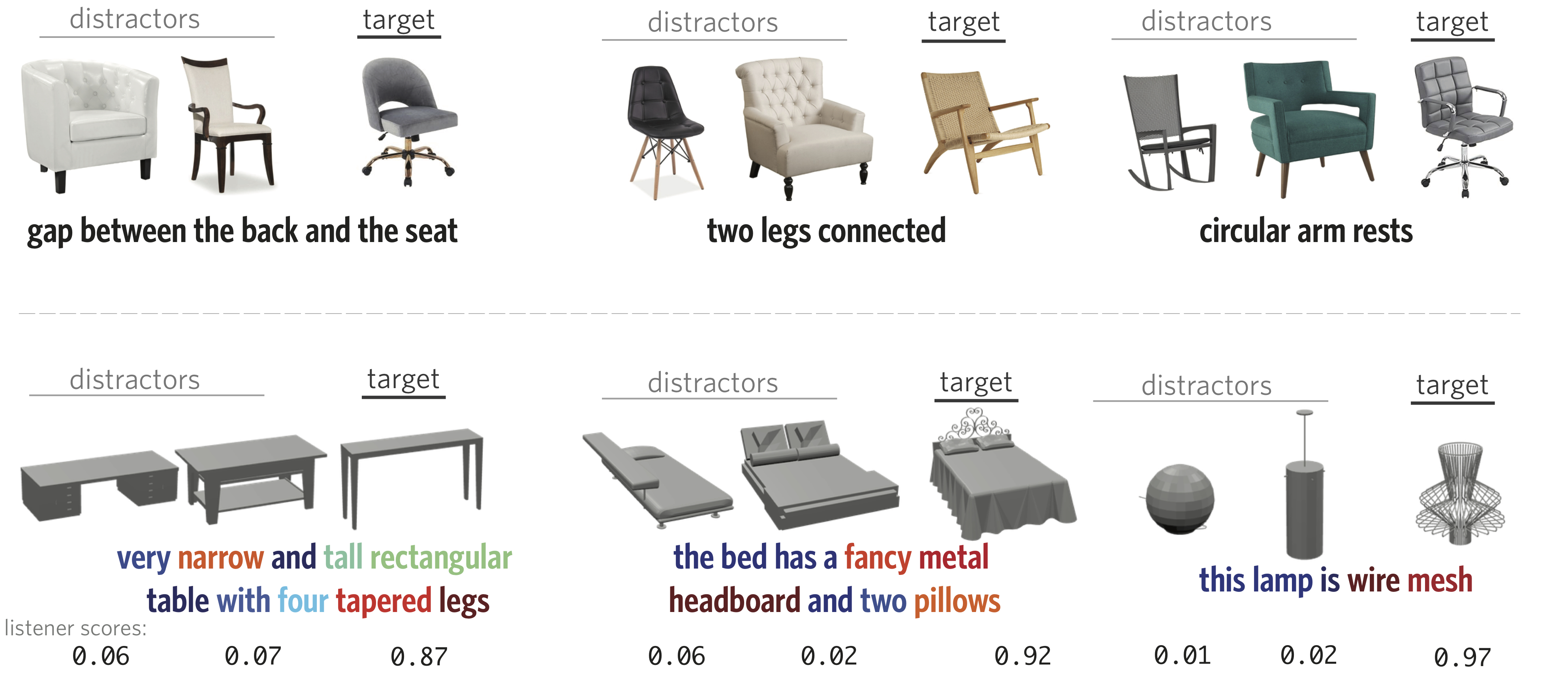

People understand visual objects in terms of parts and their relations. Language for referring to objects can reflect this structure, allowing us to indicate fine-grained shape differences. In this work we focus on grounding referential language in the shape of common objects. We first build a large scale, carefully controlled dataset of human utterances that each refer to a 2D rendering of a 3D CAD model within a set of shape-wise similar alternatives. Using this dataset, we develop neural language understanding and production models that vary in their grounding (pure 3D forms via point-clouds vs. rendered 2D images), the degree of pragmatic reasoning captured (e.g. speakers that reason about a listener or not), and the neural architecture (e.g. with or without attention). We find models that perform well with both synthetic and human partners, and with held out utterances and objects. We also find that these models have surprisingly strong generalization capacity to novel object classes (e.g. transfer from training on chairs to test on lamps), as well as to real images drawn from furniture catalogs. Lesion studies suggest that the neural listeners depend heavily on part-related words and associate these words correctly with visual parts of objects (without any explicit training on object parts), and that transfer to novel classes is most successful when known part-words are available. This work illustrates a practical approach to language grounding, and provides a case study in the relationship between object shape and linguistic structure when it comes to object differentiation.

|

Figure 1. Listening with 3D Point-Clouds & 2D Images. Our listener uses state-of-the-art neural architectures for image-based and point-cloud-based processing to 'mix' geometry with language. |

Figure 2. Pragmatic vs. Literal Neural Speakers. We develop speakers that reason about a target object with or without the introspection of a neural listener. |

Figure 3. Zero-Shot Transfer Learning. Our models can produce referential language for real-world data (top-row), and comprehend human language for novel object categories (bottom-row), without any training data from these domains. |

|

|

|

|

The authors wish to acknowledge the support of a Sony Stanford Graduate Fellowship, a NSF grant CHS-1528025, a Vannevar Bush Faculty Fellowship and gifts from Autodesk and Amazon Web Services for Machine Learning Research.