Bilingual Word Embeddings for Phrase-Based Machine Translation

Will Y. Zou, Richard Socher, Daniel Cer and Christopher D. Manning

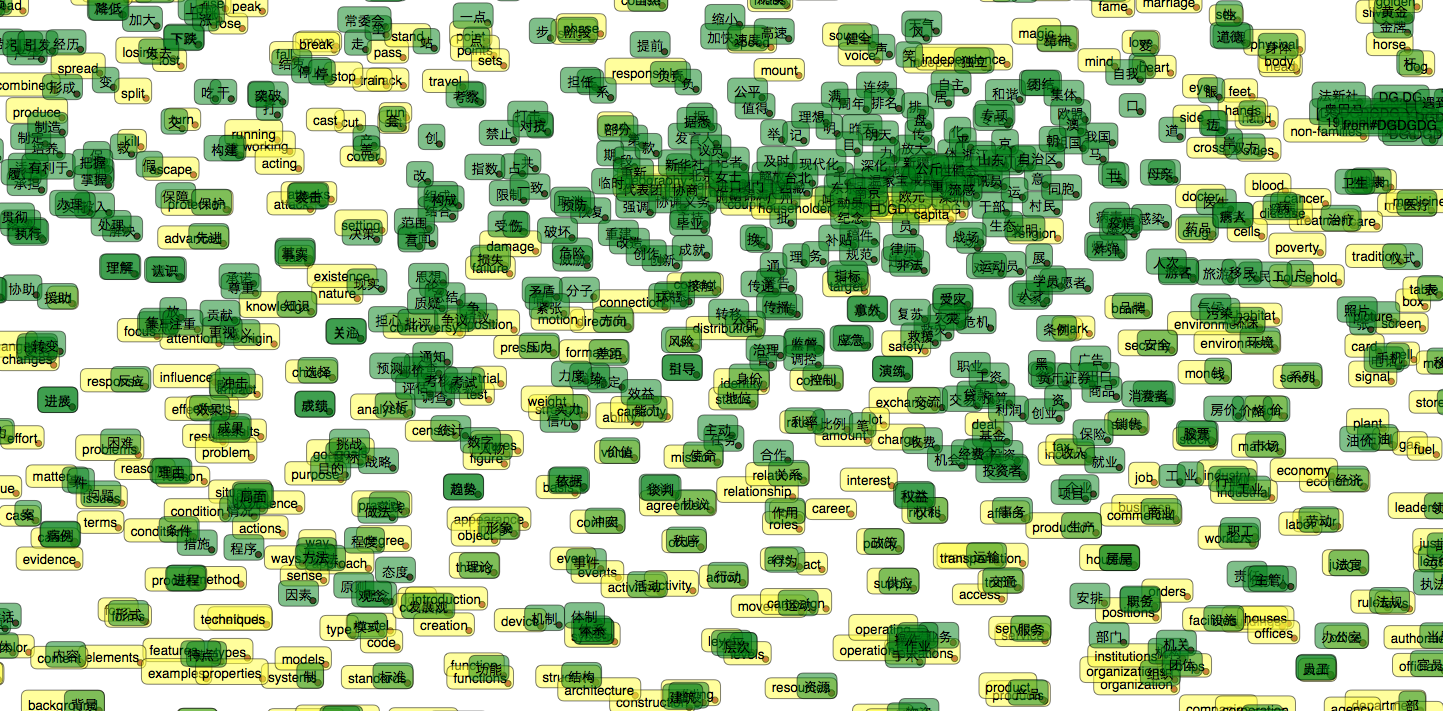

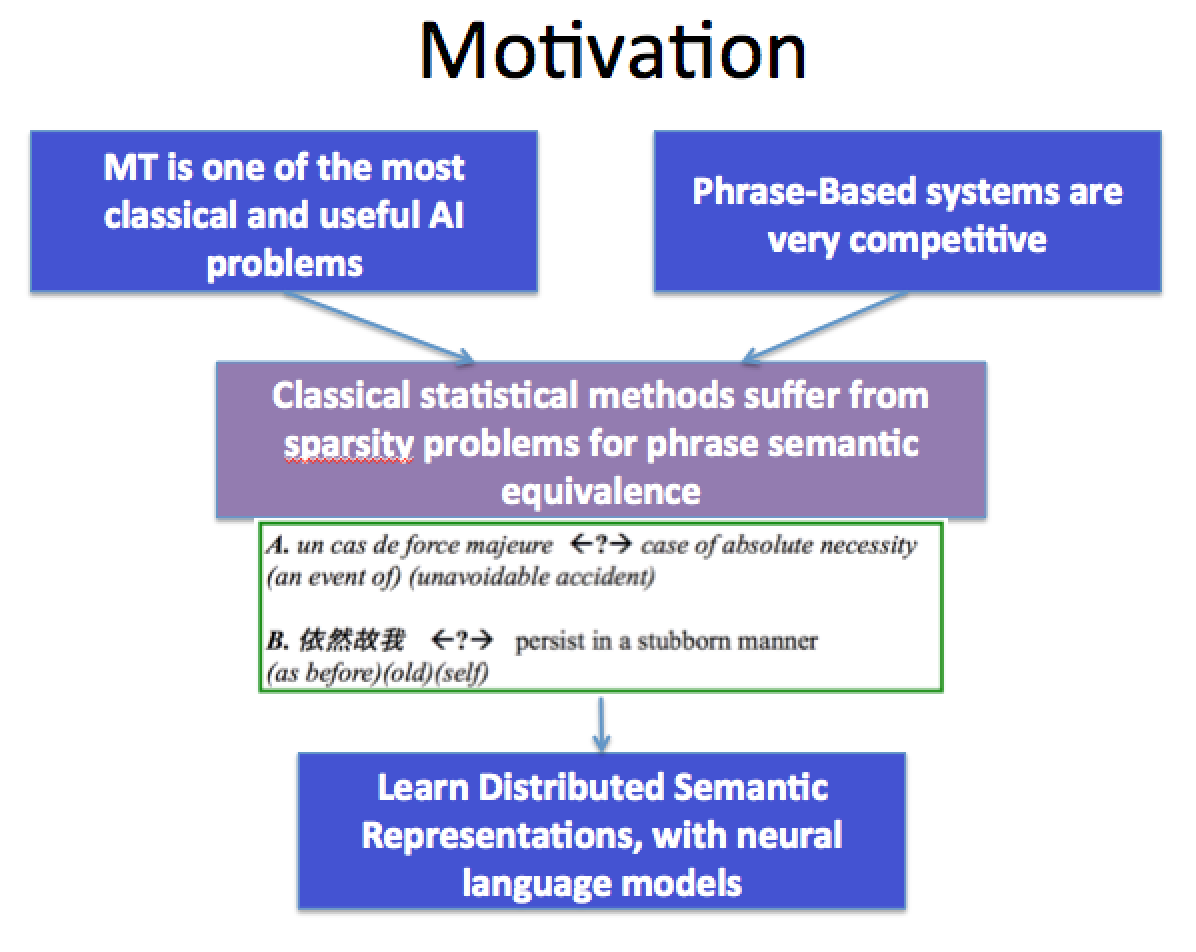

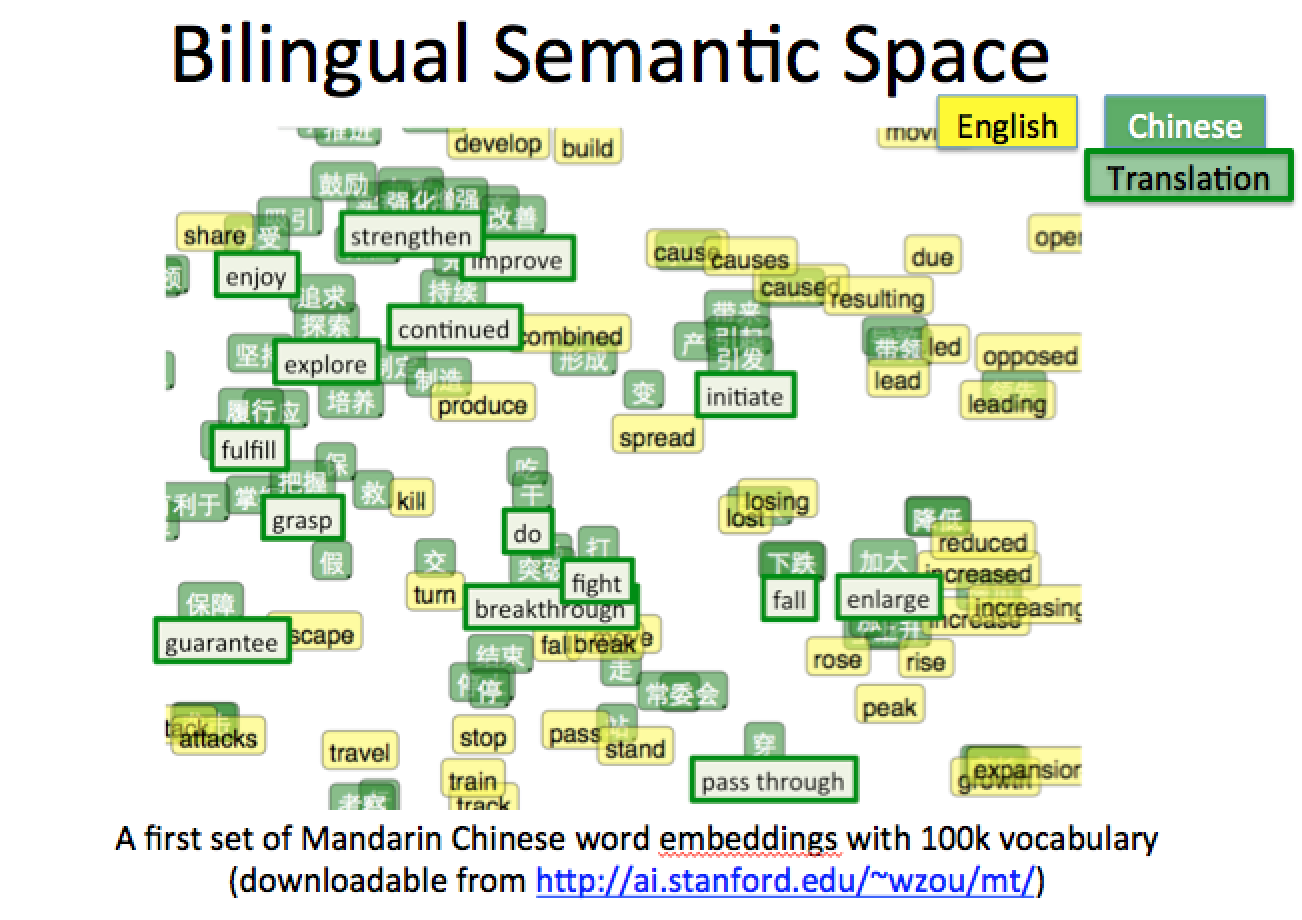

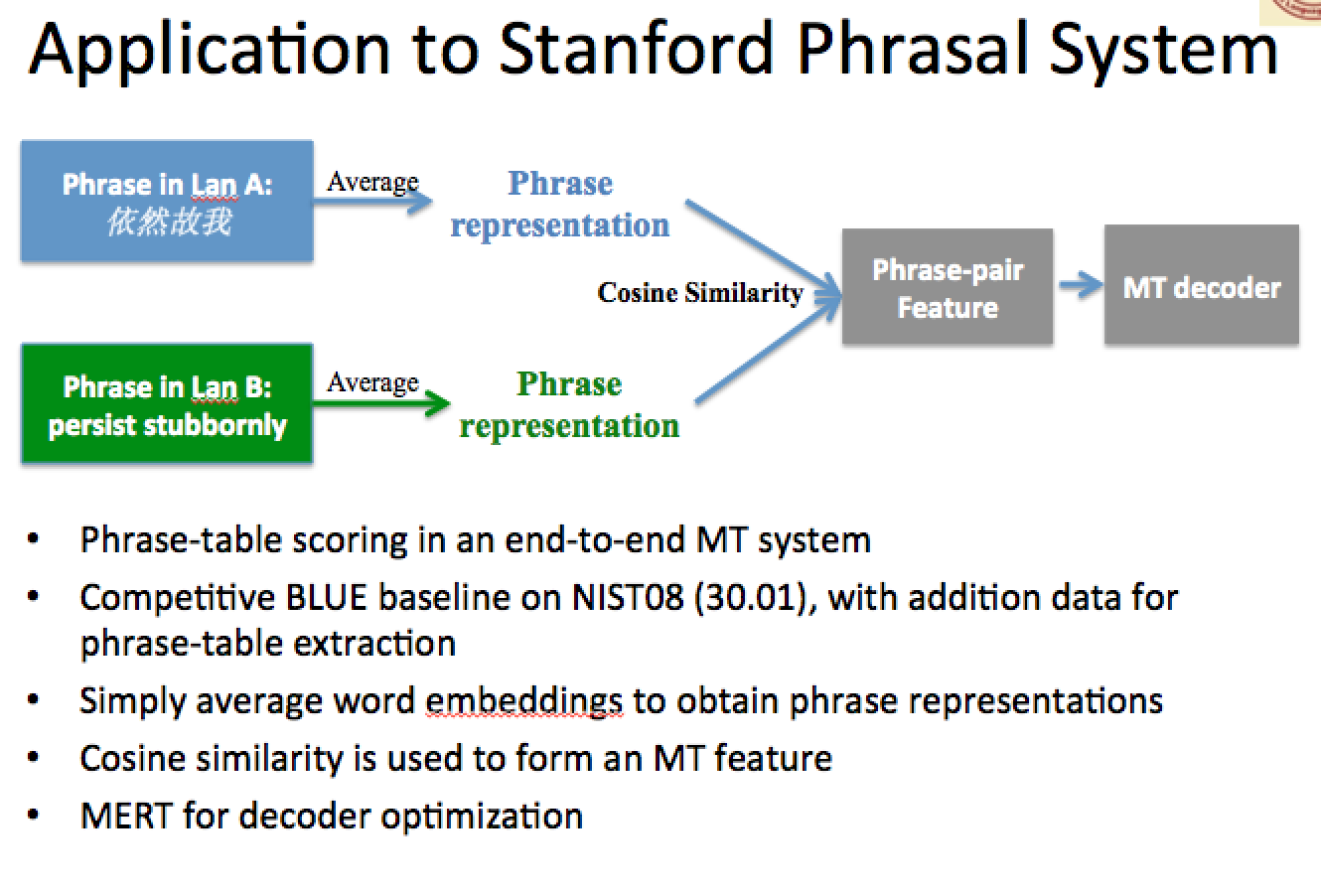

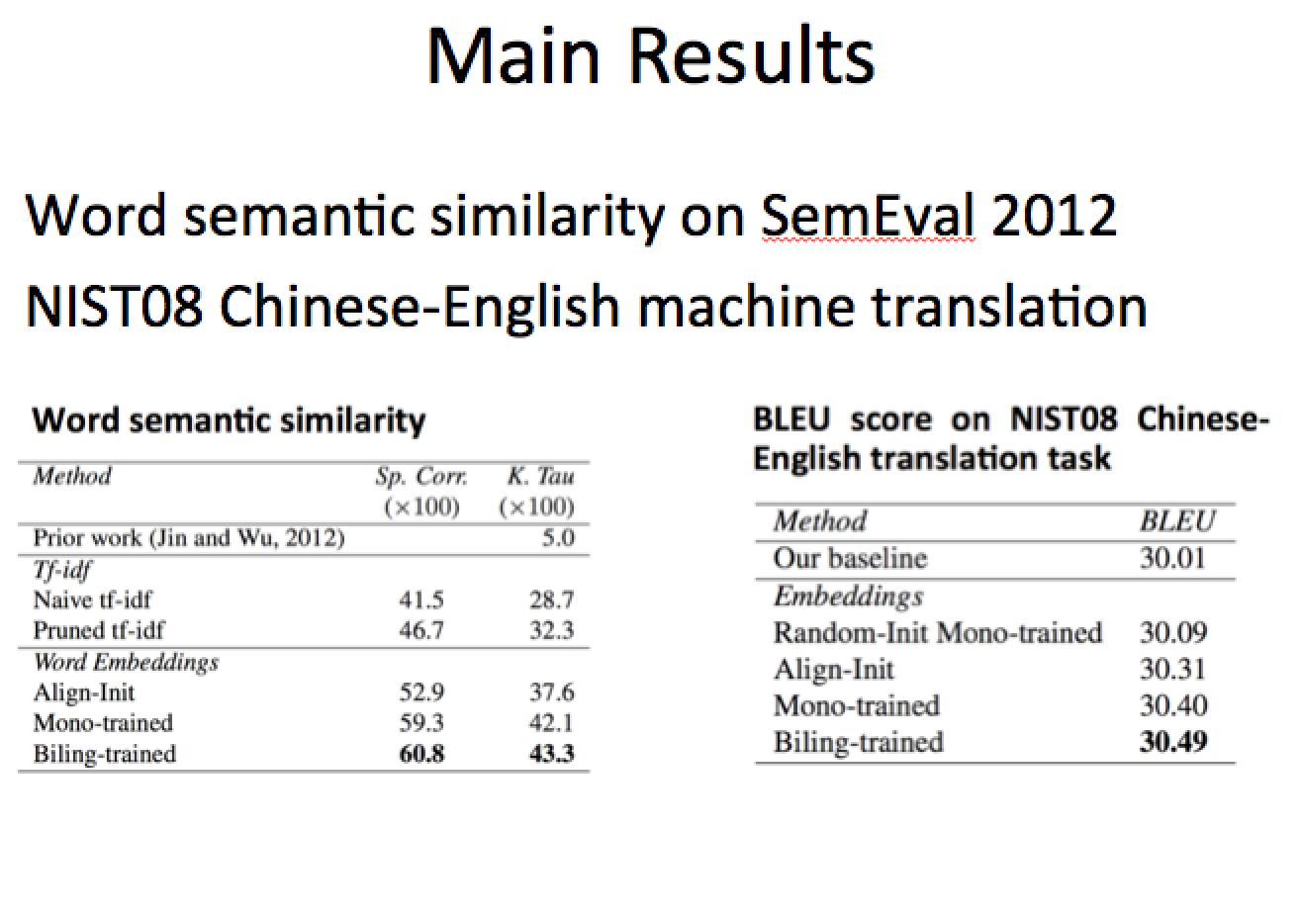

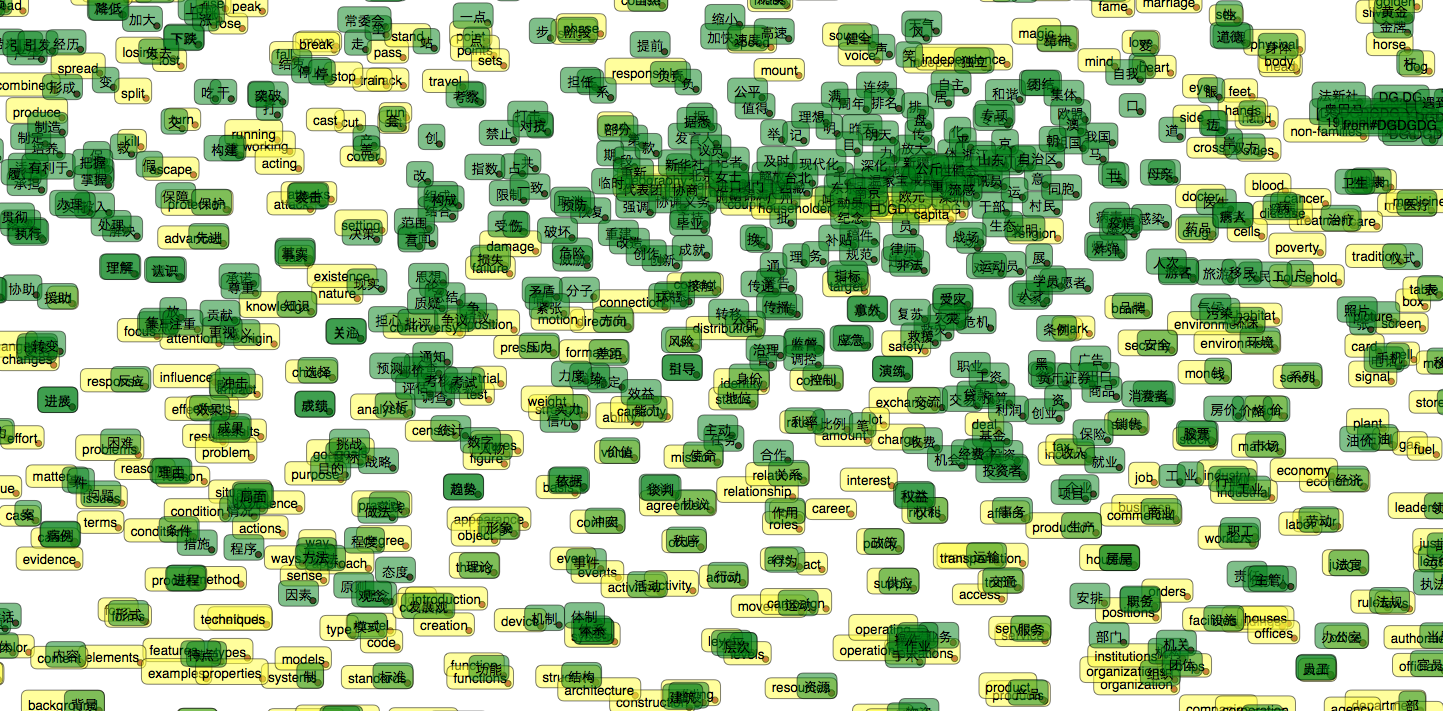

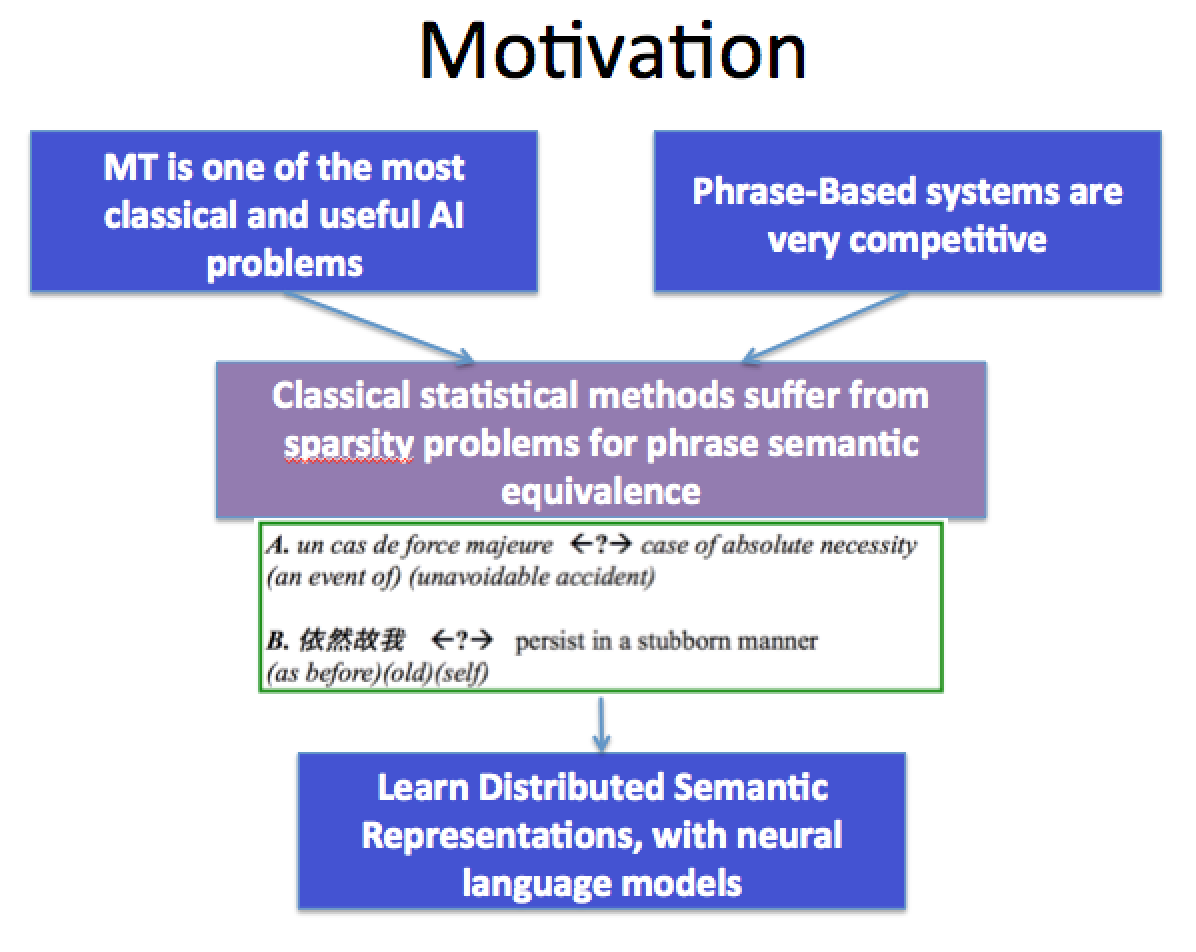

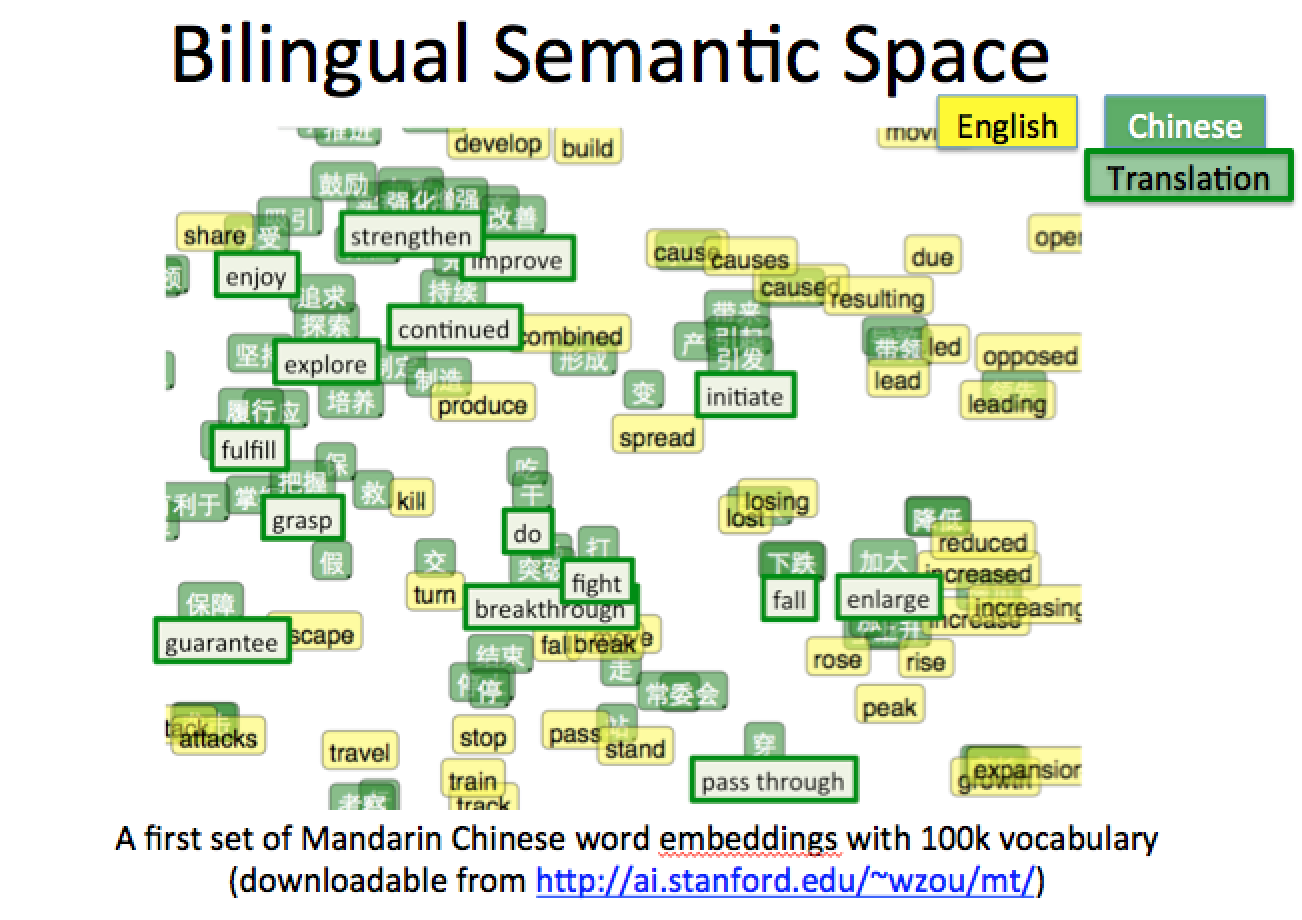

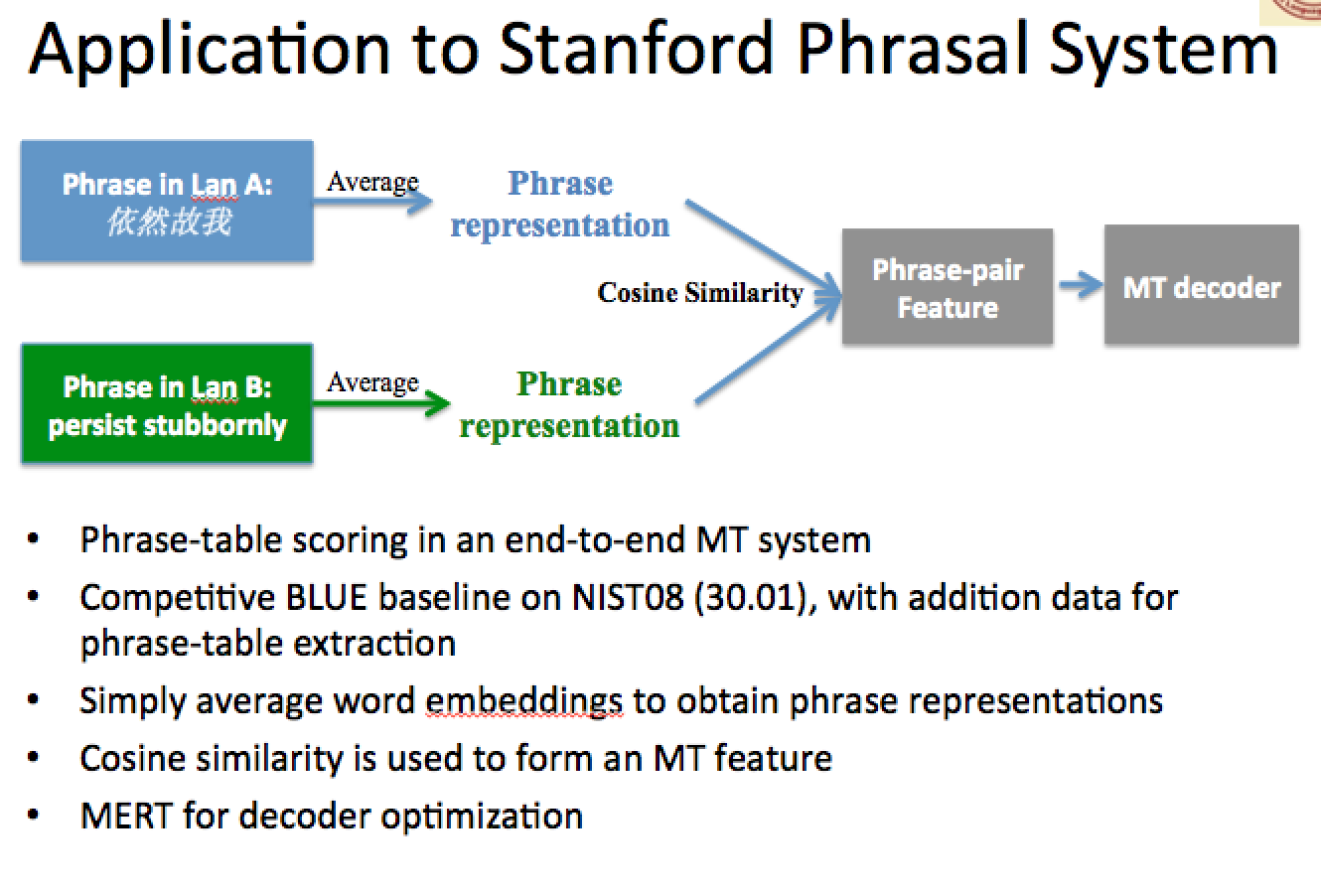

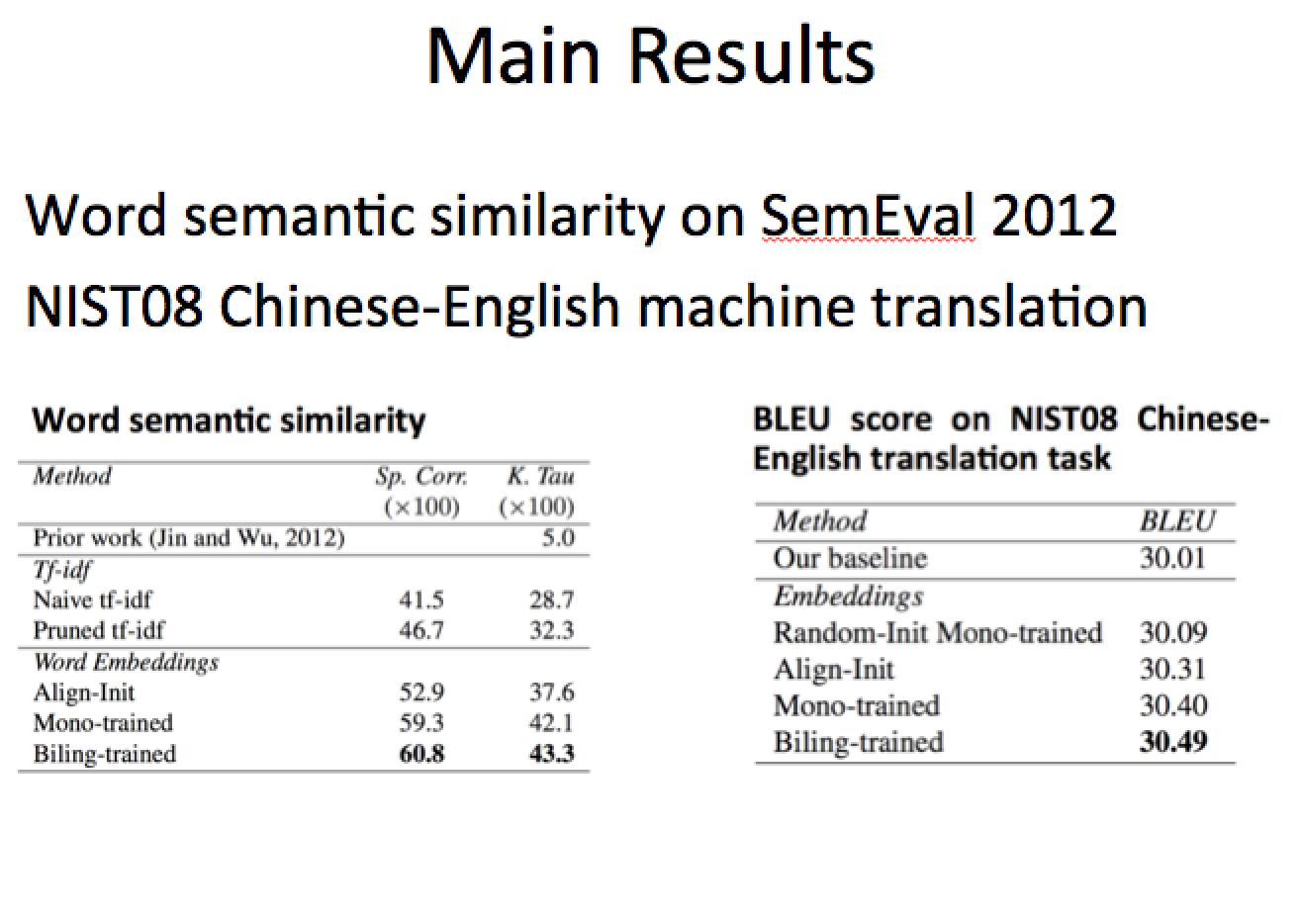

We introduce bilingual word embeddings: semantic embeddings associated across two languages in the context of neural language models. We propose a method to learn bilingual embeddings from a large unlabeled corpus, while utilizing MT word alignments to constrain translational equivalence. The new embeddings significantly out-perform baselines in word semantic similarity. A single semantic similarity feature induced with bilingual embeddings adds near half a BLEU point to the results of NIST08 Chinese-English machine translation task.

Downloads

EMNLP Paper

EMNLP Poster

[Slides]

Bilingual Embeddings