The Thirty-ninth International Conference on Machine Learning (ICML) 2022 is being hosted July 17th - 23th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

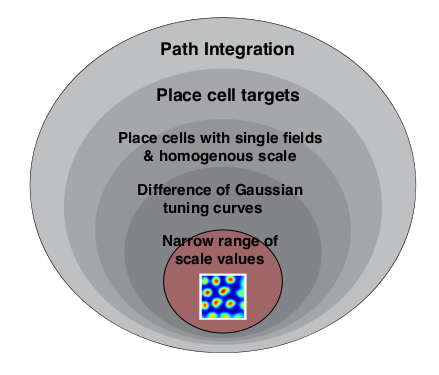

No Free Lunch from Deep Learning in Neuroscience: A Case Study through Models of the Entorhinal-Hippocampal Circuit

Contact: rschaef@cs.stanford.edu

Keywords: deep learning, neuroscience

Self-Destructing Models: Increasing the Costs of Harmful Dual Uses in Foundation Models

Contact: phend@stanford.edu

Keywords: foundation models, ai safety, meta-learning

Streaming Inference for Infinite Feature Models

Contact: rschaef@cs.stanford.edu

Keywords: variational inference, combinatorial stochastic processes, bayesian nonparametrics

A General Recipe for Likelihood-free Bayesian Optimization

Contact: jiaming.tsong@gmail.com

Links: Paper | Website

Keywords: bayesian optimization, likelihood-free inference

A State-Distribution Matching Approach to Non-Episodic Reinforcement Learning

Contact: architsh@stanford.edu

Links: Paper | Website

Keywords: reinforcement learning, continual learning, adversarial learning

Connect, Not Collapse: Explaining Contrastive Learning for Unsupervised Domain Adaptation

Contact: kshen6@cs.stanford.edu

Award nominations: Long talk

Links: Paper

Keywords: pre-training, representation learning, domain adaptation, contrastive learning, spectral graph theory

Contrastive Adapters for Foundation Model Group Robustness

Contact: mzhang@cs.stanford.edu

Links: Paper

Keywords: robustness, foundation models, lightweight tuning, adapters

Correct-N-Contrast: a Contrastive Approach for Improving Robustness to Spurious Correlations

Contact: mzhang@cs.stanford.edu

Award nominations: Long Talk (Oral)

Links: Paper

Keywords: spurious correlations, robustness, contrastive learning

How to Leverage Unlabeled Data in Offline Reinforcement Learning

Contact: tianheyu@cs.stanford.edu

Links: Paper

Keywords: offline rl, deep rl

Improving Out-of-Distribution Robustness via Selective Augmentation

Contact: huaxiu@cs.stanford.edu

Links: Paper | Video

Keywords: out-of-distribution robustness, domain generalization, spurious correlation, distribution shifts, selective augmentation

Inducing Causal Structure for Interpretable Neural Networks

Contact: atticusg@gmail.com

Links: Paper | Website

Keywords: causality, interpretability,

Integrating Reward Maximization and Population Estimation: Sequential Decision-Making for Internal Revenue Service Audit Selection

Contact: phend@cs.stanford.edu

Links: Paper

Keywords: bandits,real world ml,sampling

Joint Entropy Search For Maximally-Informed Bayesian Optimization

Contact: lnardi@stanford.edu

Links: Paper | Website

Keywords: bayesian optimization, hyperparameter optimization, entropy search

Meaningfully debugging model mistakes using conceptual counterfactual explanations

Contact: merty@stanford.edu

Links: Paper

Keywords: interpretability, counterfactual explanations, concept-based explanations, reliable machine learning

Perfectly Balanced: Improving Transfer and Robustness of Supervised Contrastive Learning

Contact: mfchen@stanford.edu, danfu@cs.stanford.edu

Links: Paper | Blog Post | Video | Website

Keywords: contrastive learning, transfer learning, robustness

Understanding Dataset Difficulty with V-Usable Information

Contact: kawin@stanford.edu

Award nominations: long talk

Links: Paper

Keywords: dataset,interpretability,data-centric ai,information theory

We look forward to seeing you at ICML 2022!