The International Conference on Machine Learning (ICML) 2023 is being hosted July 23th - 29th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

Main Conference

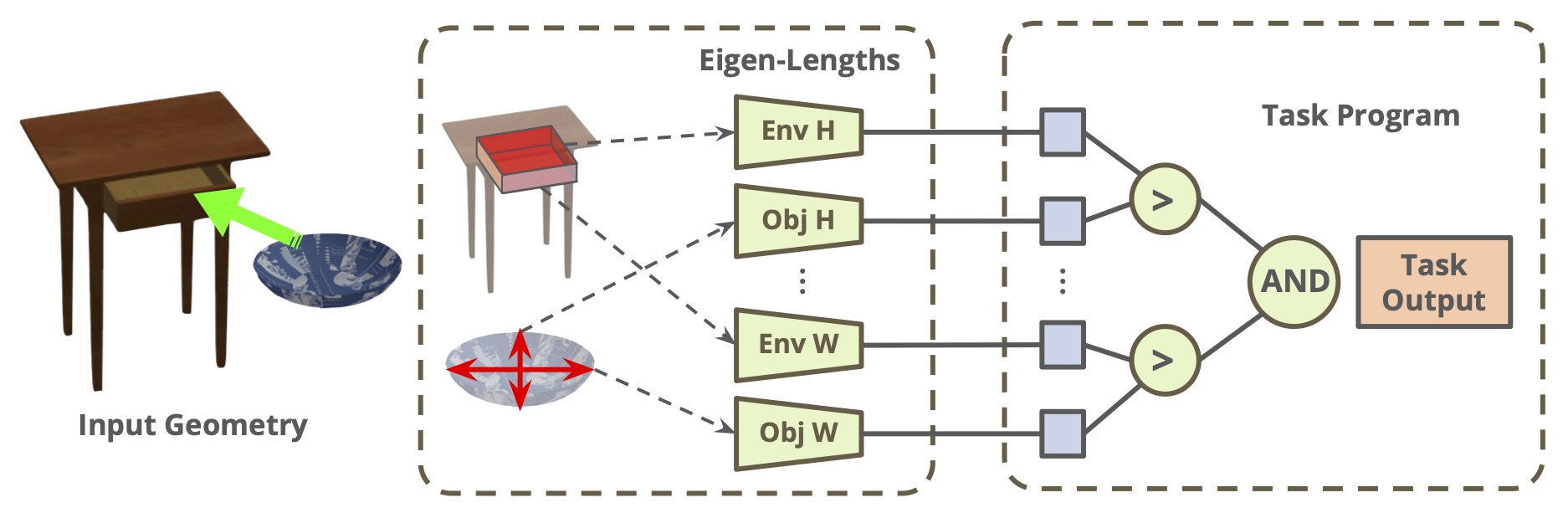

Towards Learning Geometry Eigen-Lengths Crucial for Fitting Tasks

Contact: yijiaw@stanford.edu

Keywords: geometric learning, eigen-length

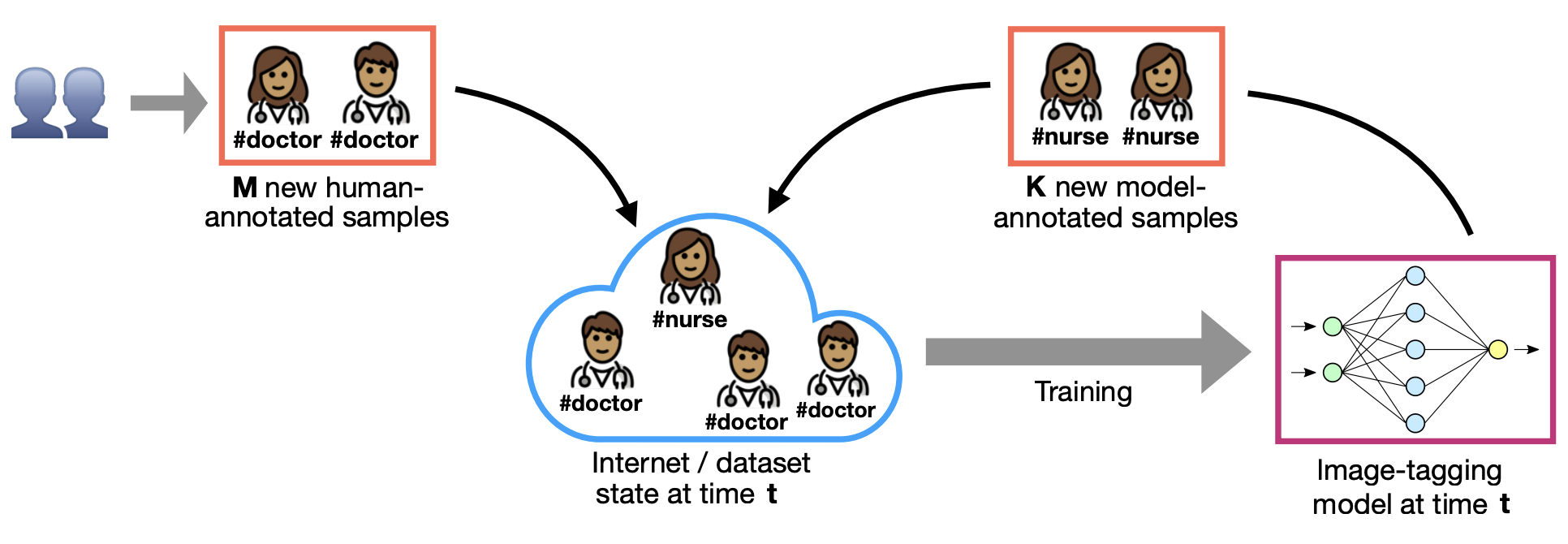

Data Feedback Loops: Model-driven Amplification of Dataset Biases

Contact: rtaori@stanford.edu

Award nominations: Oral

Links: Paper | Website

Keywords: feedback loops, bias amplification, deep learning, self-supervised learning, cv, nlp

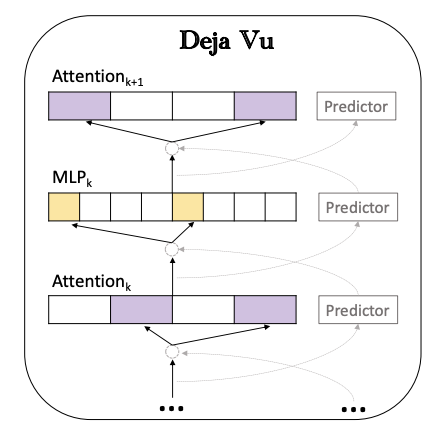

Deja Vu: Contextual Sparsity for Efficient LLMs at Inference Time

Contact: beidic@andrew.cmu.edu

Links: Paper

Keywords: large language models, efficient inference

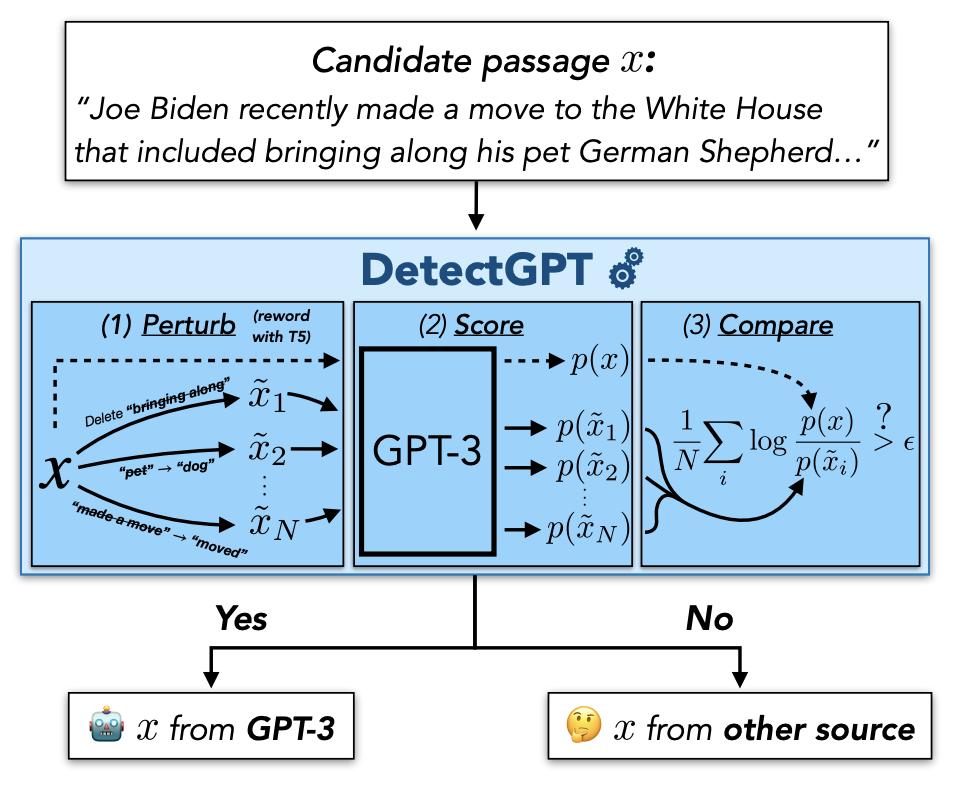

DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature

Contact: eric.mitchell@cs.stanford.edu

Award nominations: Selected for oral presentation at the conference

Links: Paper | Website

Keywords: detection; zero-shot; language generation; curvature; deepfake

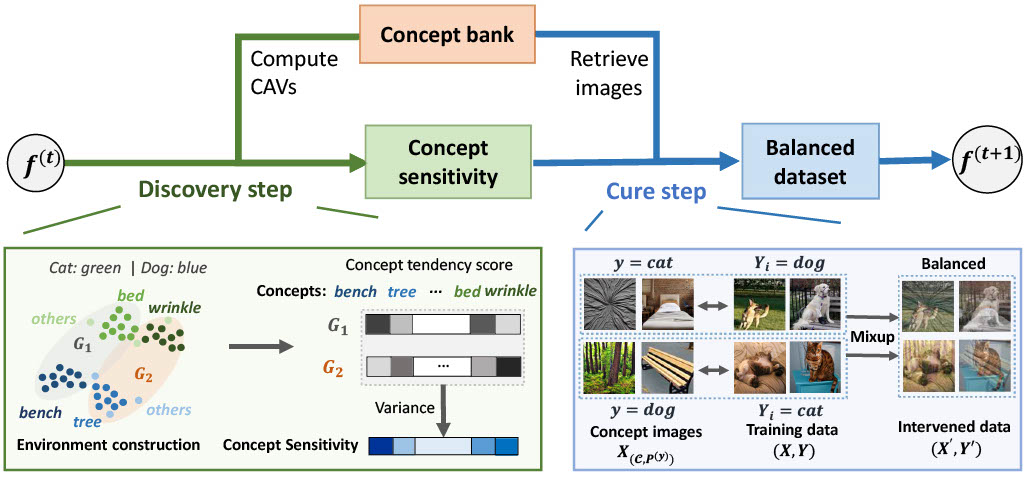

Discover and Cure: Concept-aware Mitigation of Spurious Correlation

Contact: shirwu@cs.stanford.edu

Links: Paper

Keywords: spurious correlation, generalization, interpretability, concept

Long Horizon Temperature Scaling

Contact: andyshih@stanford.edu

Links: Paper | Website

Keywords: temperature scaling, long horizon, nonmyopic, autoregressive models, diffusion models, inference, tractability

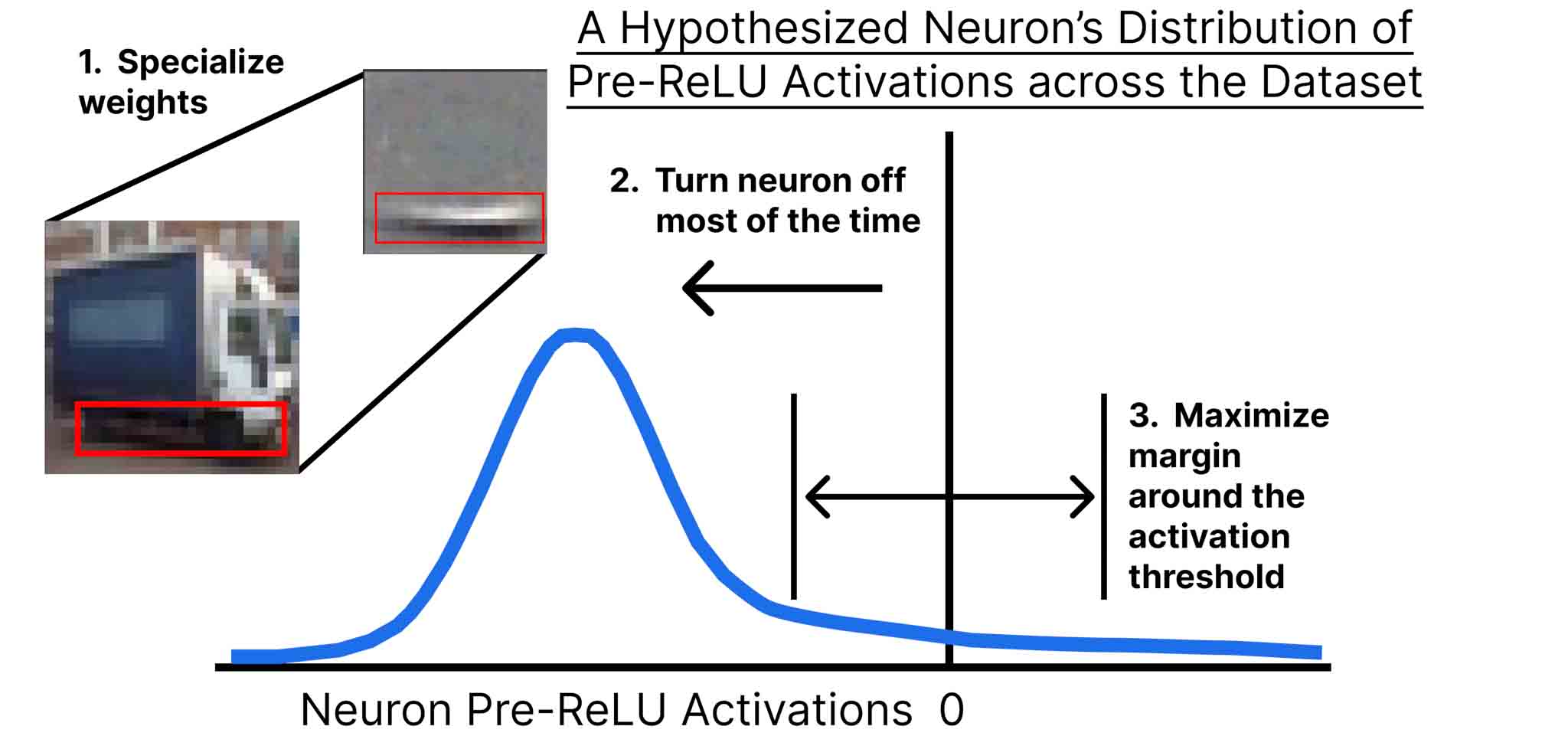

Emergence of Sparse Representations from Noise

Contact: trenton- bricken@g.harvard.edu

Links: Paper

Keywords: sparsity, neural networks, neuroscience

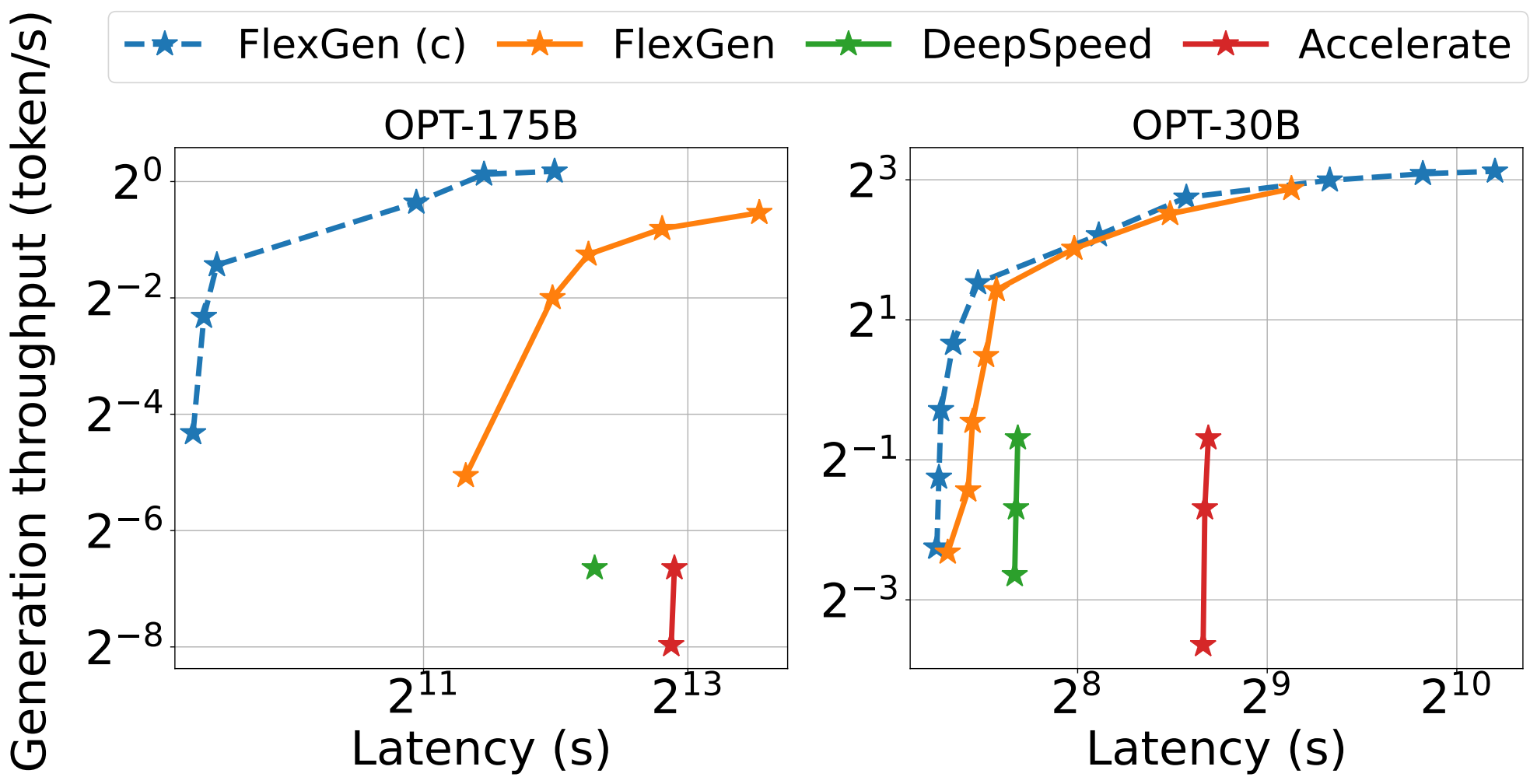

FlexGen: High-Throughput Generative Inference of Large Language Models with a Single GPU

Contact: ying1123@stanford.edu

Links: Paper | Website

Keywords: large language models, memory optimizations, offloading, compression, generative pre-trained transformers

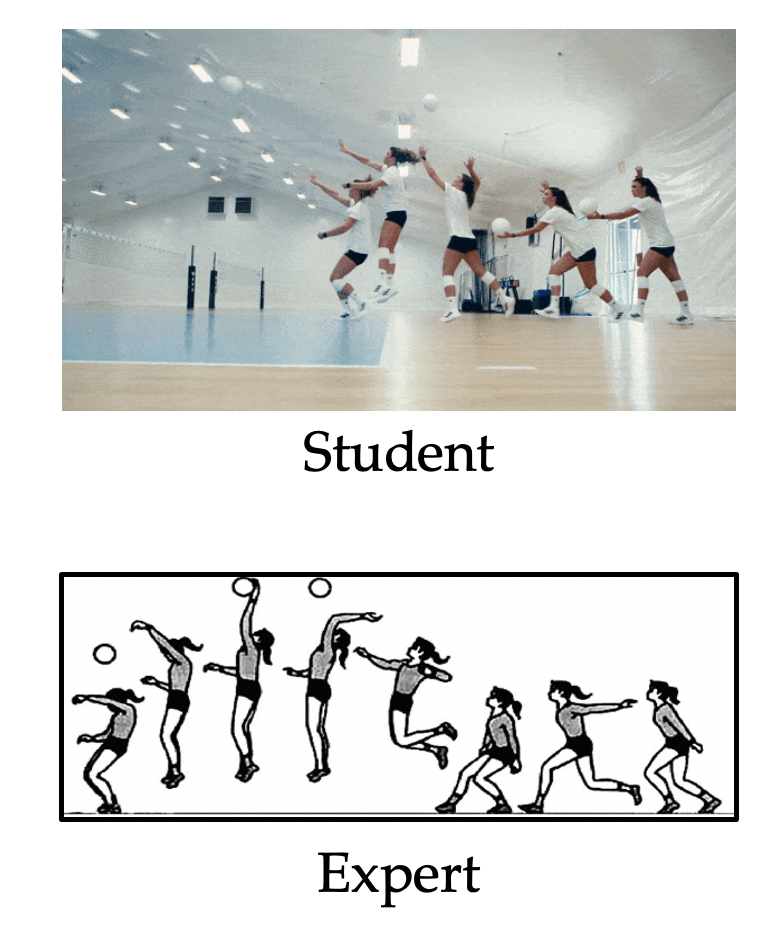

Generating Language Corrections for Teaching Physical Control Tasks

Contact: meghas@stanford.edu

Links: Paper

Keywords: education, language, human-ai interaction

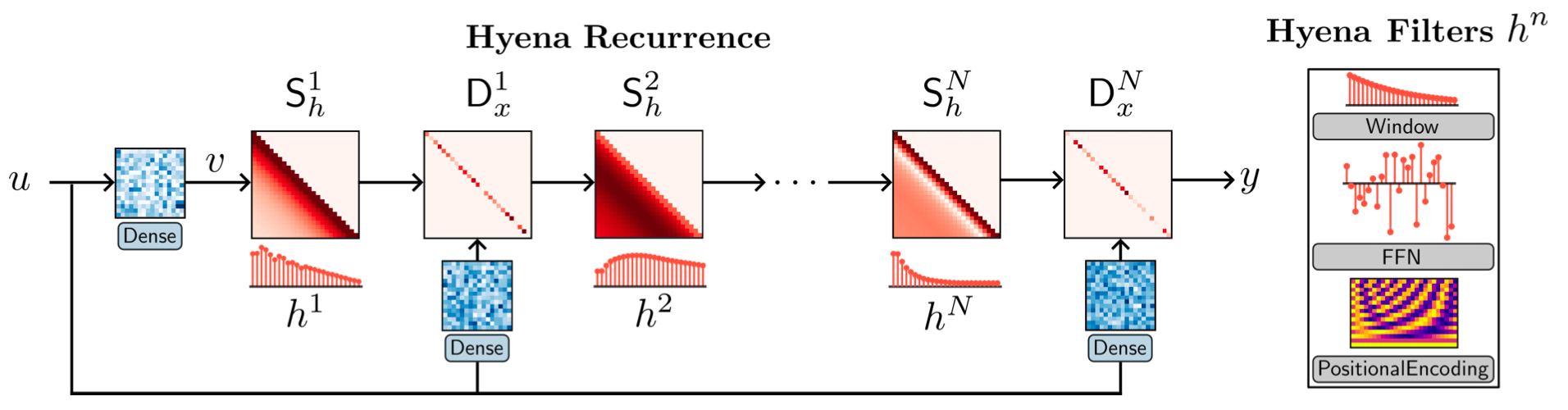

Hyena Hierarchy: Towards Larger Convolutional Language Models

Contact: poli@stanford.edu

Award nominations: Oral

Links: Paper | Blog Post

Keywords: long context, long convolution, large language models

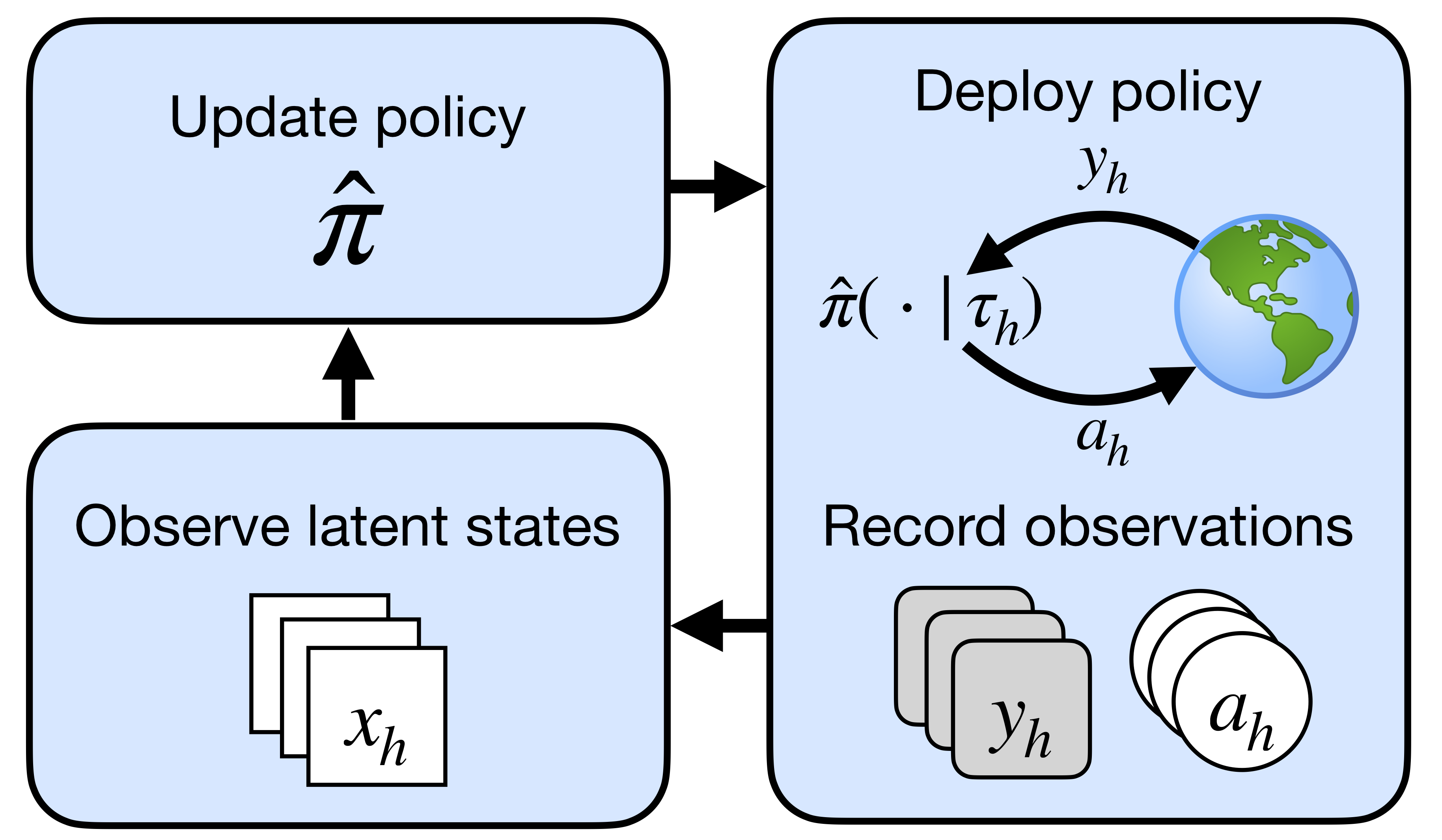

Learning in POMDPs is Sample-Efficient with Hindsight Observability

Contact: jnl@stanford.edu

Links: Paper

Keywords: reinforcement learning, partial observability, hindsight observability, pomdp, homdp, regret, learning theory, theory, sample complexity

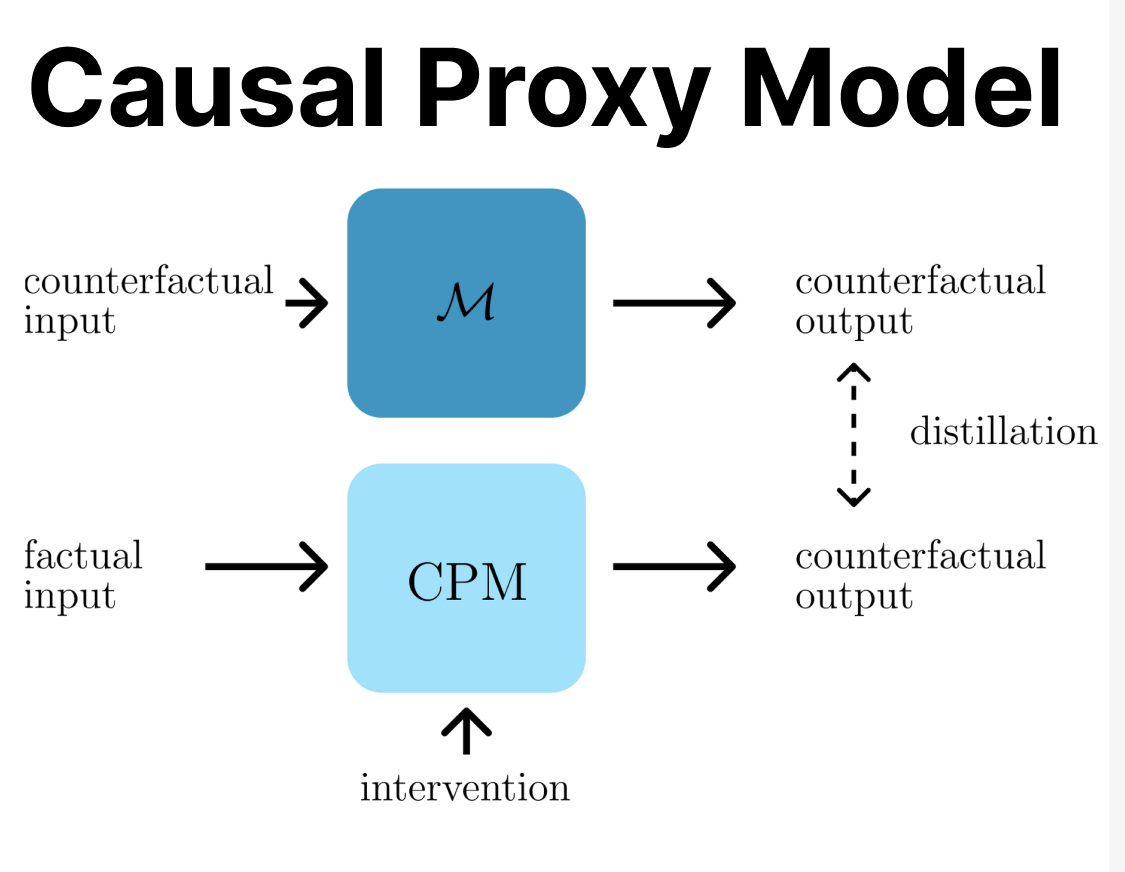

Causal Proxy Models For Concept-Based Model Explanations

Contact: kldooste@stanford.edu

Links: Paper | Website

Keywords: explainability, causality, concept-based explanations, causal explanations

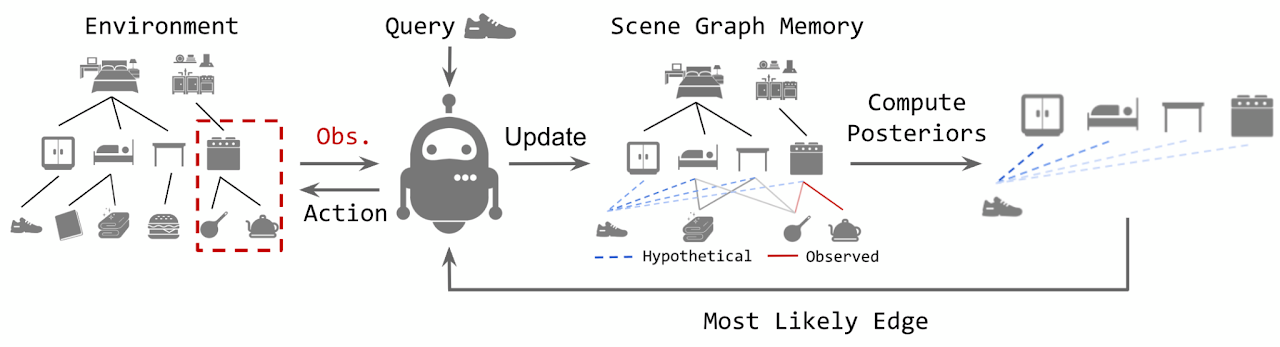

Modeling Dynamic Environments with Scene Graph Memory

Contact: andreyk@stanford.edu

Links: Paper

Keywords: graph neural network, embodied ai, link prediction

Motion Question Answering via Modular Motion Programs

Contact: markendo@stanford.edu

Links: Paper | Website

Keywords: question answering, human motion understanding, neuro-symbolic learning

One-sided matrix completion from two observations per row

Contact: shcao@stanford.edu

Links: Paper

Keywords: machine learning, icml, matrix completion, subspace estimation, high-dimensional statistics, random matrix theory

Optimal Sets and Solution Paths of ReLU Networks

Contact: mishkin@stanford.edu

Links: Paper

Keywords: convex optimization, relu networks, regularization path

Out-of-Domain Robustness via Targeted Augmentations

Contact: irena@cs.stanford.edu, ssagawa@cs.stanford.edu

Links: Paper

Keywords: robustness, data augmentation

PhD

Contact: minkai@cs.stanford.edu

Links: Paper | Website

Keywords: generative models, geometric representation learning, drug discovery

Reflected Diffusion Models

Contact: aaronlou@stanford.edu

Links: Paper | Blog Post

Keywords: diffusion models

Sequence Modeling with Multiresolution Convolutional Memory

Contact: ishijiaxin@gmail.com

Links: Paper | Website

Keywords: long-range, sequence modeling, convolution, multiresolution, wavelets, parameter-efficient

Simple Embodied Language Learning as a Byproduct of Meta-Reinforcement Learning

Contact: evanliu@cs.stanford.edu

Links: Paper

Keywords: meta-reinforcement learning, language learning, reinforcement learning

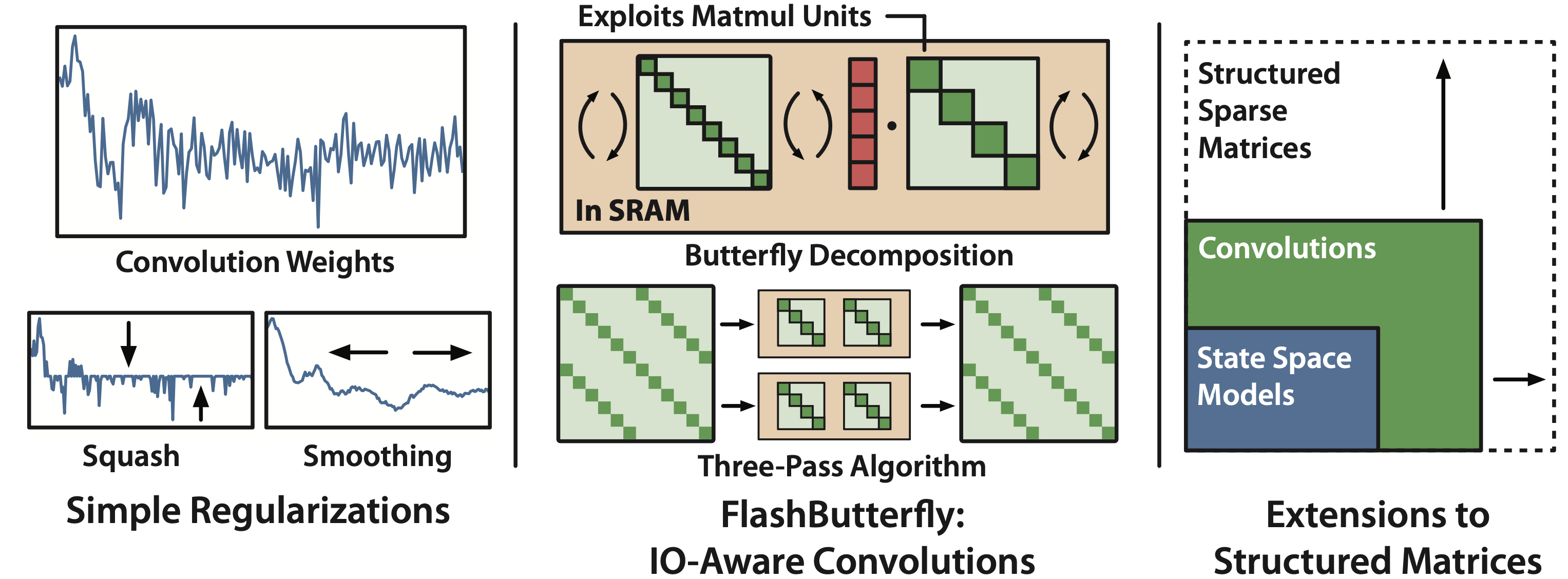

Simple Hardware-Efficient Long Convolutions for Sequence Modeling

Contact: danfu@cs.stanford.edu

Links: Paper | Blog Post

Keywords: convolutions, sequence modeling

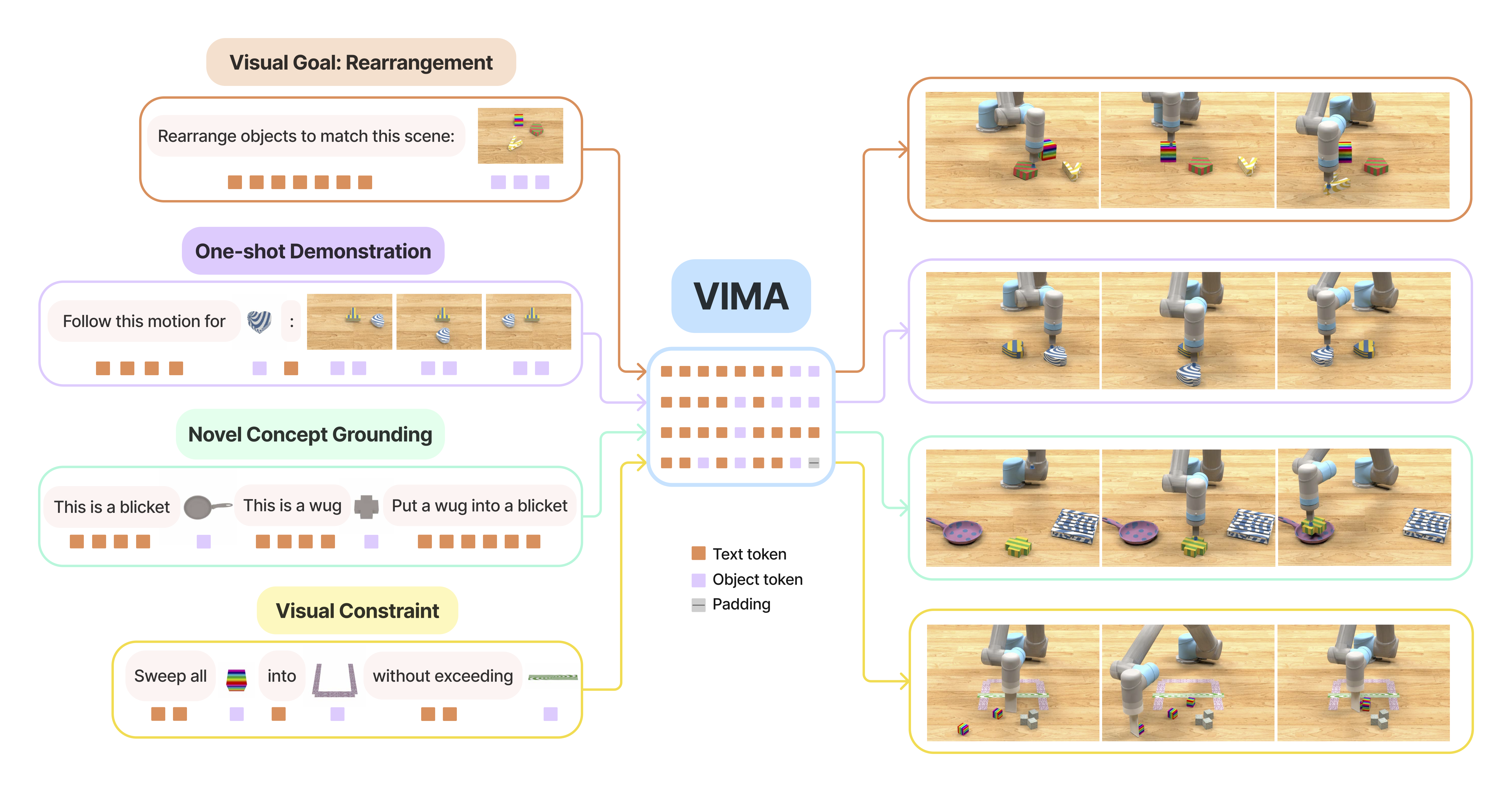

VIMA: General Robot Manipulation with Multimodal Prompts

Contact: yunfanj@cs.stanford.edu

Links: Paper | Website

Keywords: robot learning, foundation model, transformer, multi-task learning

Workshops

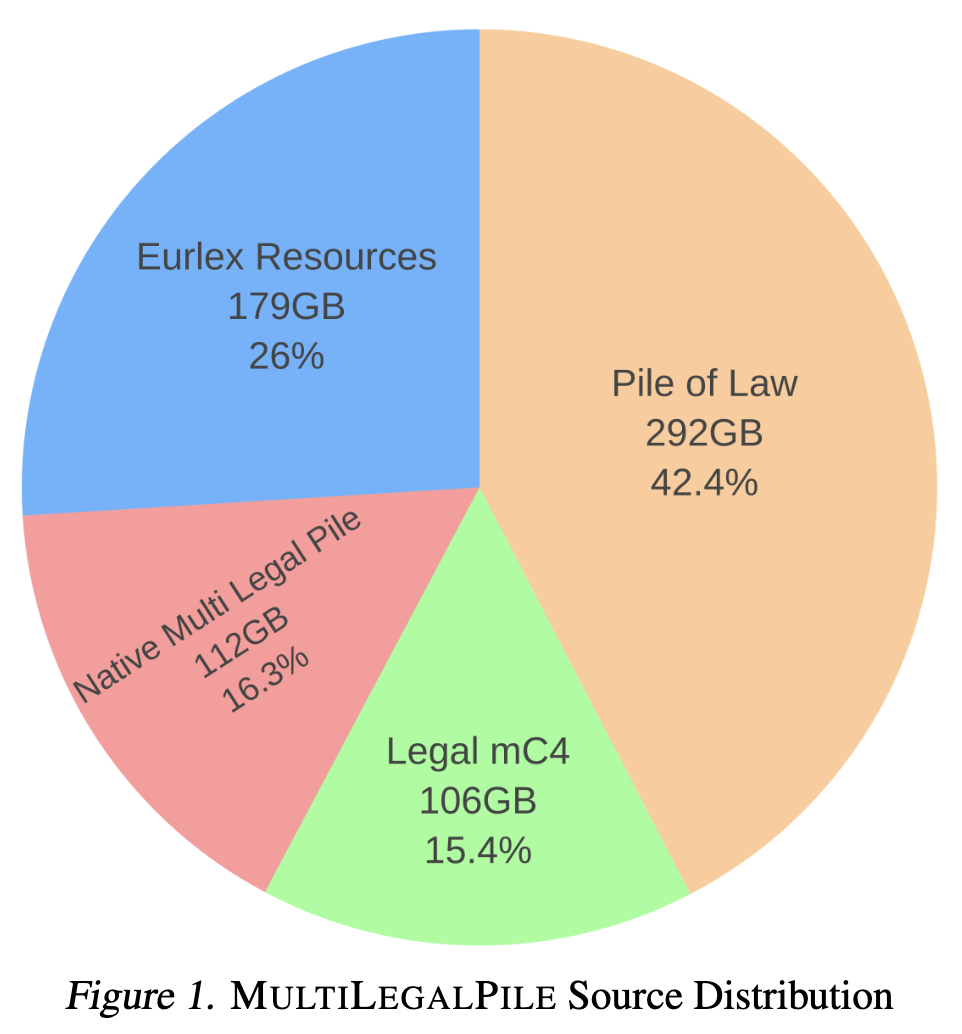

MultiLegalPile: A 689GB Multilingual Legal Corpus

Contact: jniklaus@stanford.edu

Workshop: DMLR

Links: Paper

Keywords: legal, law, corpus, language models, pretraining, large

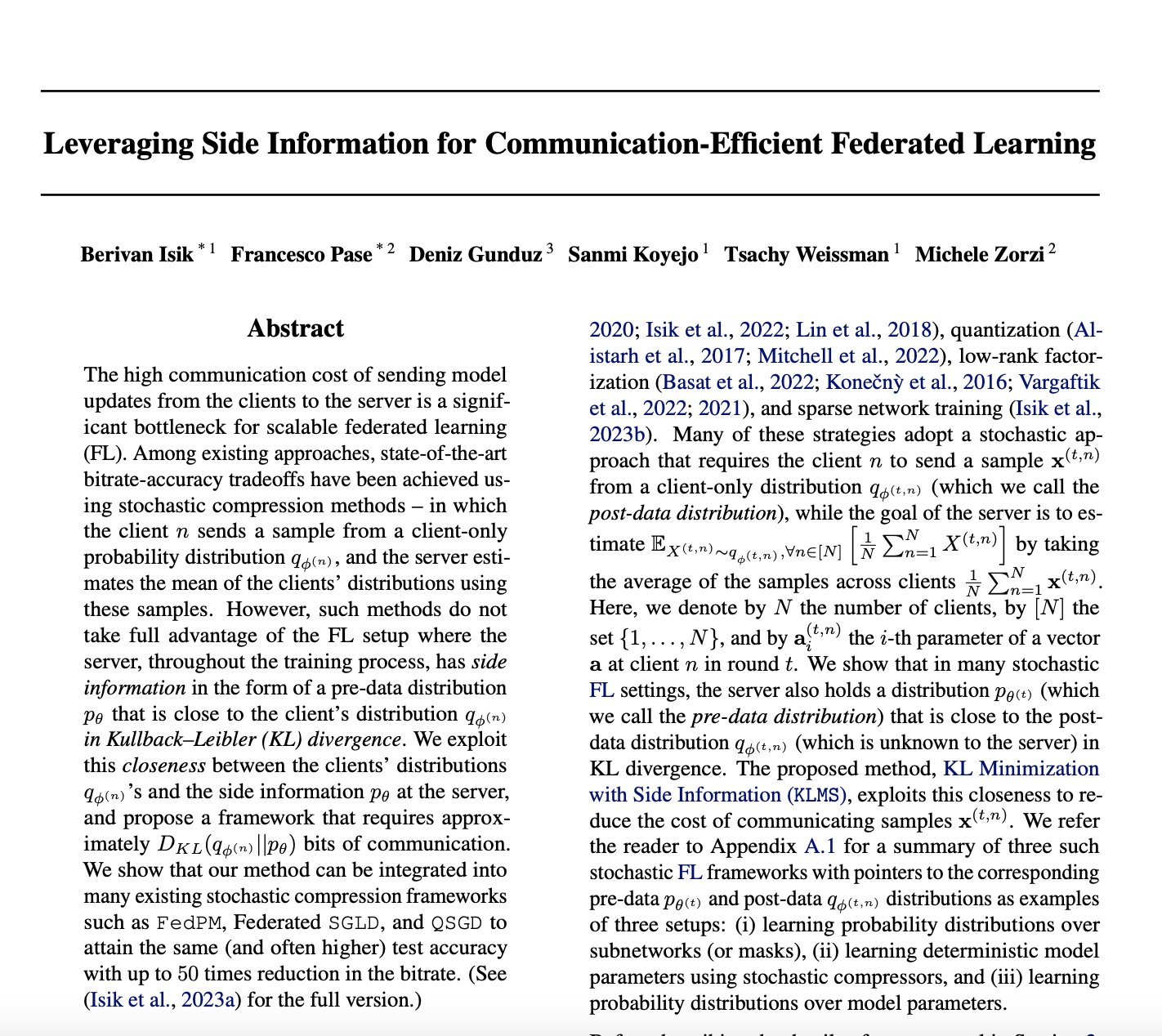

Leveraging Side Information for Communication-Efficient Federated Learning

Contact: berivan0@stanford.edu

Workshop: Workshop on Federated Learning and Analytics,

Links: Paper

Keywords: federated learning, compression, importance sampling

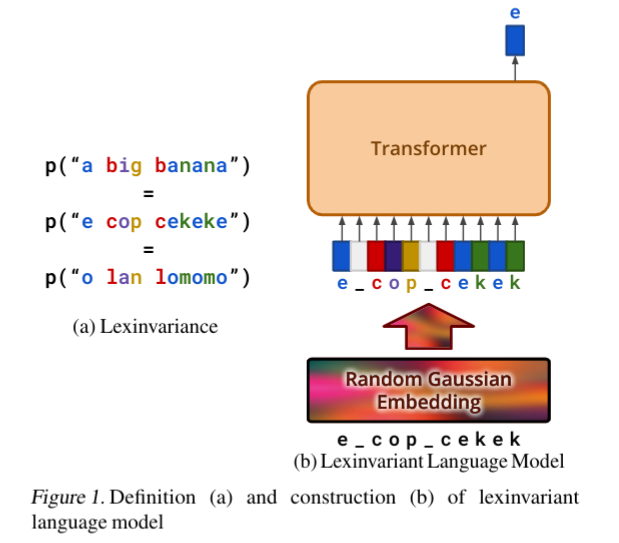

Lexinvariant Language Models

Contact: qhwang@stanford.edu

Workshop: ICML 2023 Workshop on Structured Probabilistic Inference & Generative Modeling

Links: Paper

Keywords: large language model, in-context learning, pretraining

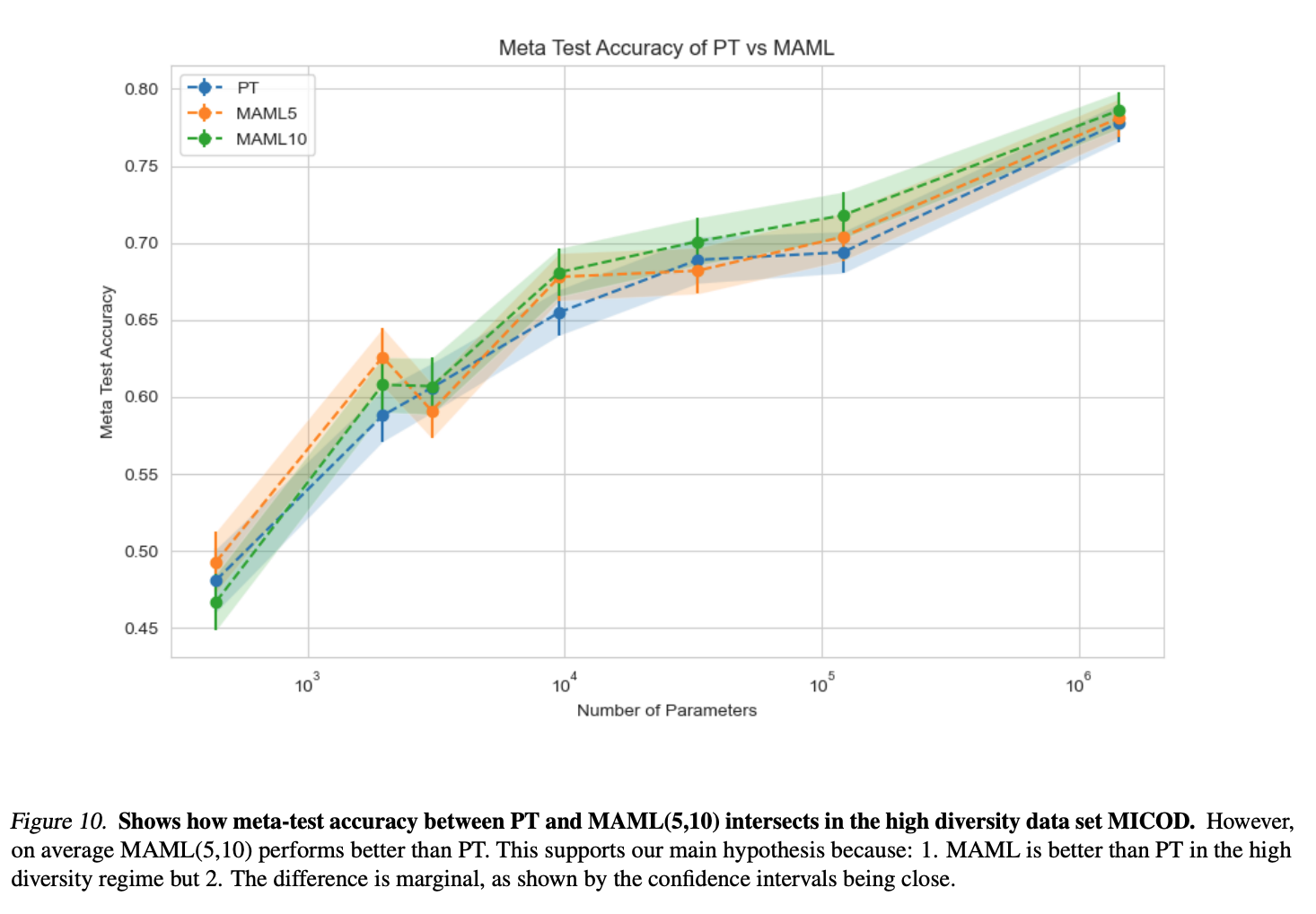

Is Pre-training Truly Better Than Meta-Learning?

Contact: brando9@stanford.edu

Workshop: ICML data centric workshop

Links: Paper

Keywords: meta-learning, general intelligence, machine learning, llm, pre-training, few-shot learning

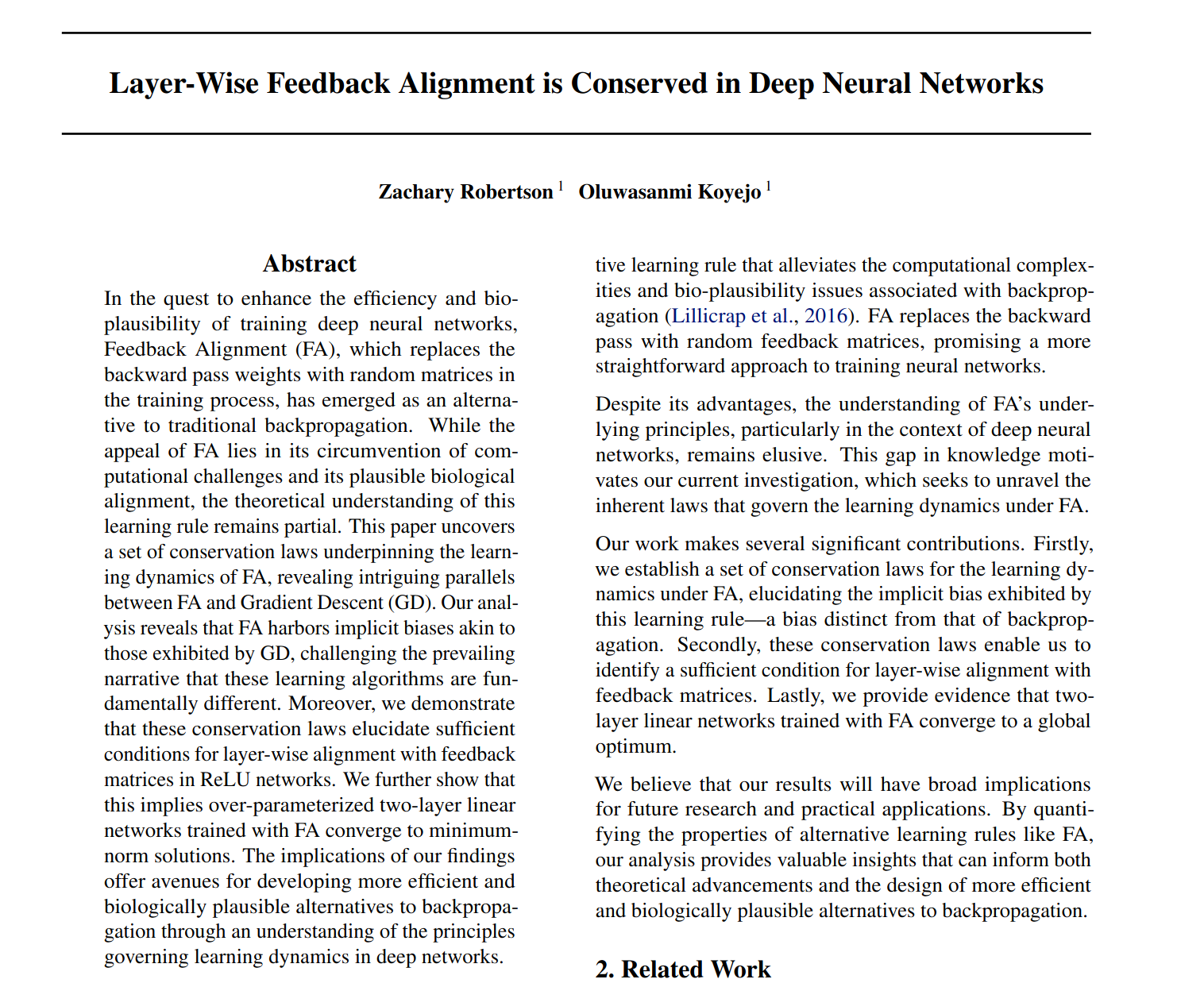

Layer-Wise Feedback Alignment is Conserved in Deep Neural Networks

Contact: zroberts@stanford.edu

Workshop: Localized Learning Workshop

Links: Paper

Keywords: deep learning, bio-plausible, implicit bias

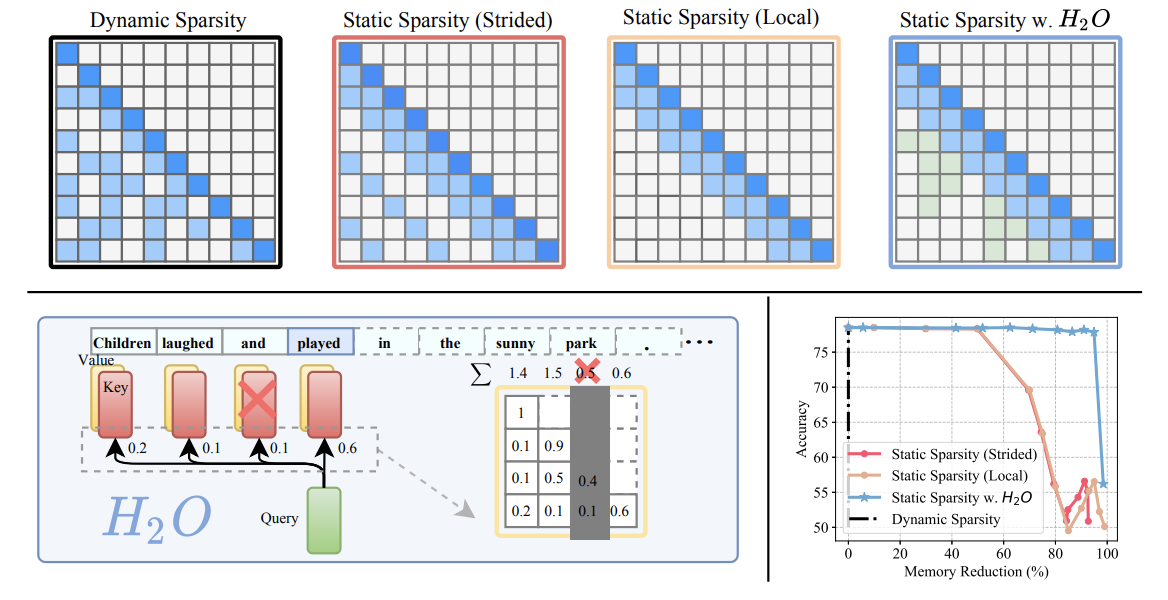

H2O: Heavy-Hitter Oracle for Efficient Generative Inference of Large Language Models

Contact: zhenyu.zhang@utexas.edu

Workshop: Efficient Systems for Foundation Models (ES-FoMo) Workshop

Links: Paper | Website

Keywords: large language models; efficient generative inference

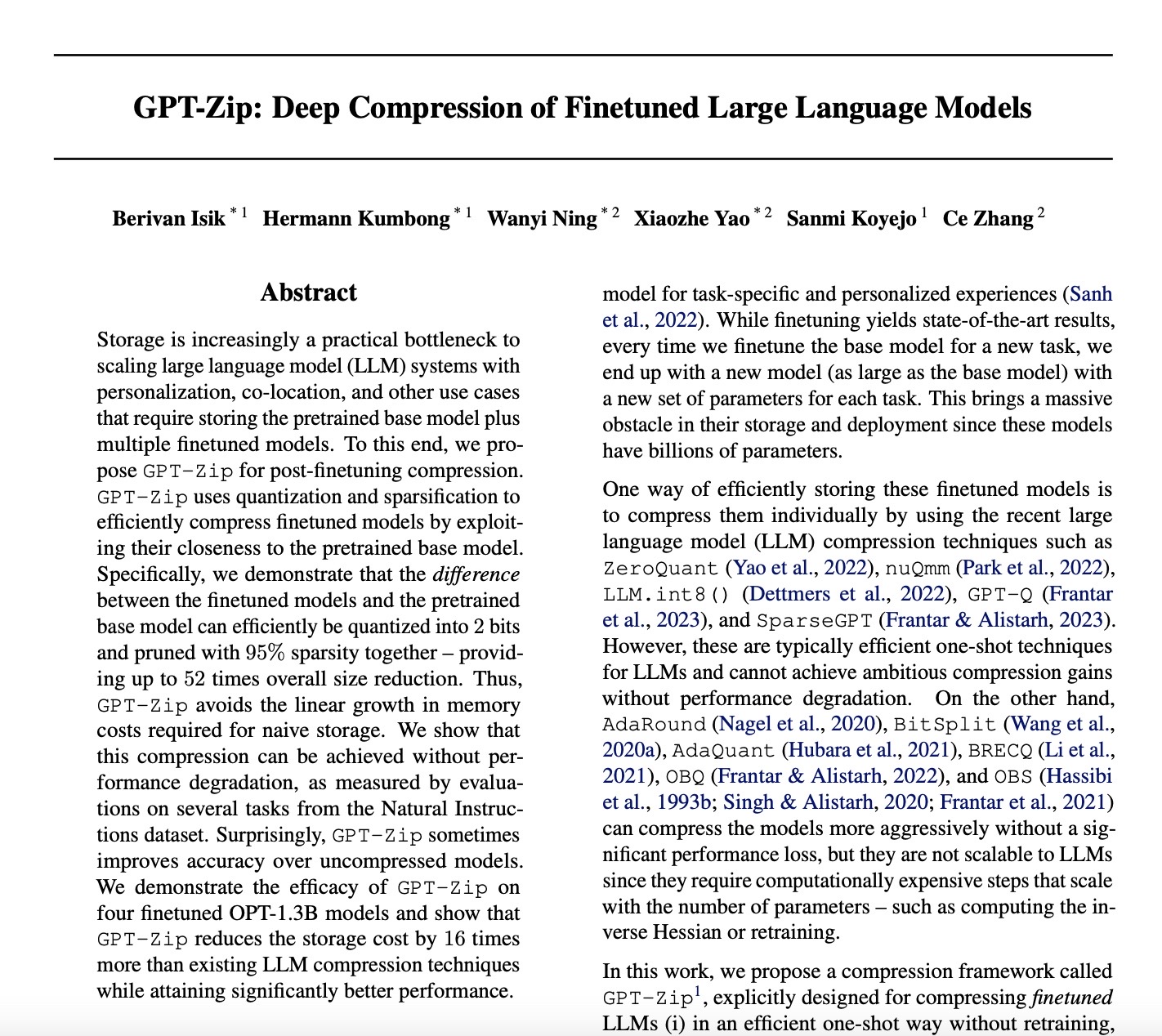

GPT-Zip: Deep Compression of Finetuned Large Language Models

Contact: berivan0@stanford.edu

Workshop: Workshop on Efficient Systems for Foundation Models

Links: Paper

Keywords: large language models, model compression, finetuning, scalable machine learning

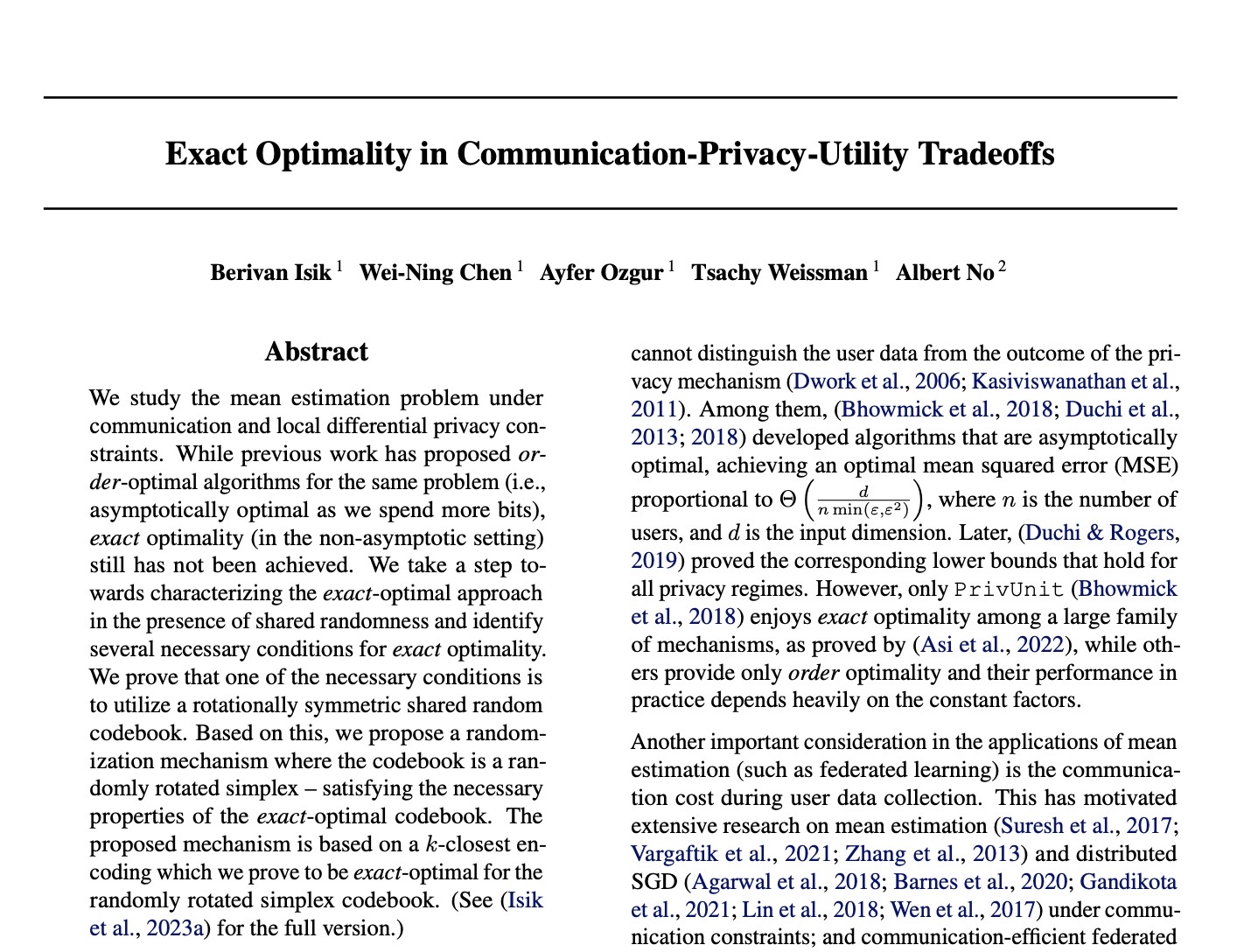

Exact Optimality in Communication-Privacy-Utility Tradeoffs

Contact: berivan0@stanford.edu

Workshop: Workshop on Federated Learning and Analytics in Practice

Links: Paper

Keywords: distributed mean estimation, differential privacy, compression

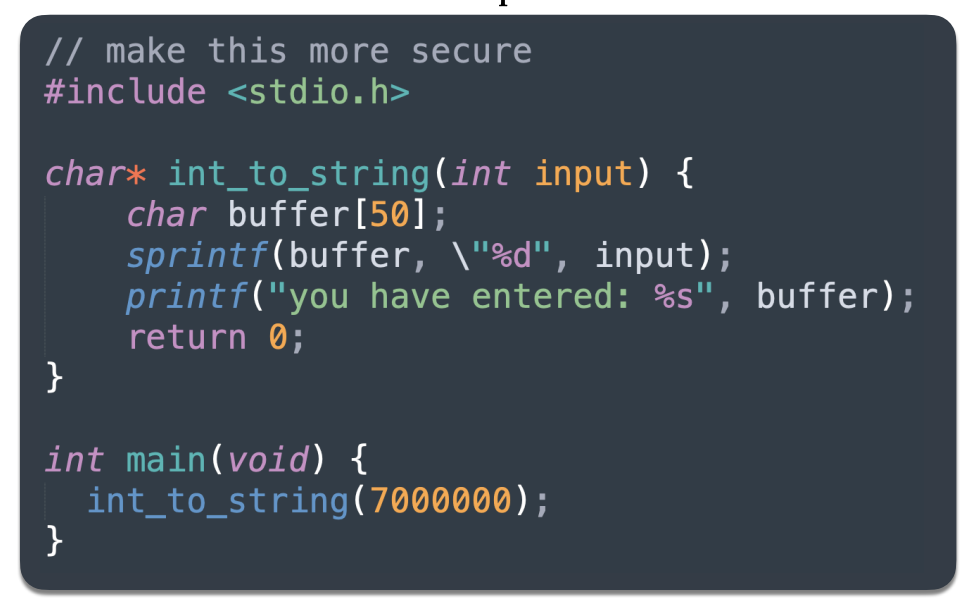

Do Users Write More Insecure Code with AI Assistants?

Contact: meghas@stanford.edu

Workshop: Challenges in Deployable Generative AI Workshop

Links: Paper

Keywords: generative ai, code generation, human-ai interaction

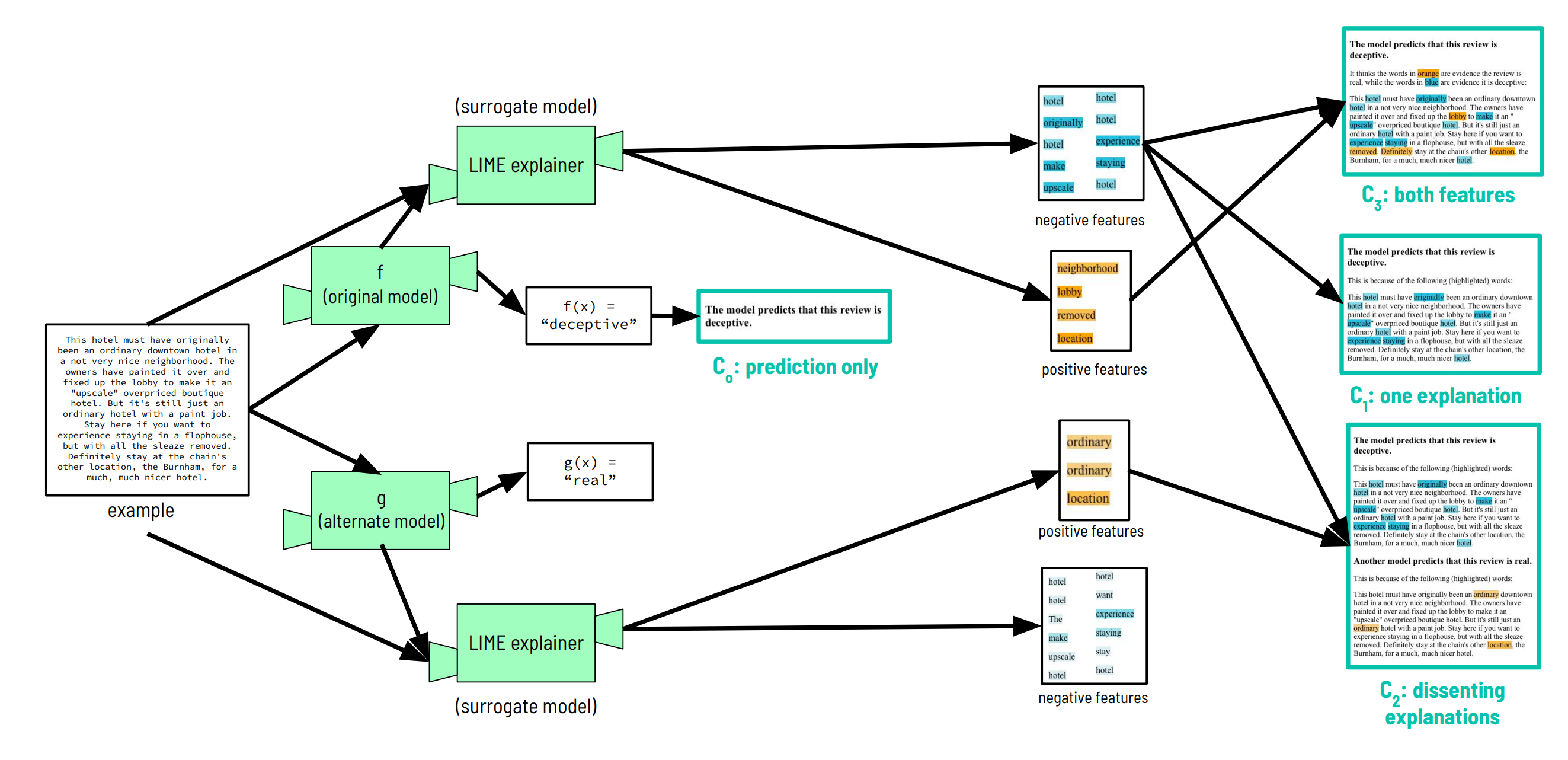

Dissenting Explanations: Leveraging Disagreement to Reduce Model Overreliance

Contact: jhshen@stanford.edu

Workshop: HCI & AI Workshop

Links: Paper

Keywords: explainability, model multiplicity

PRODIGY: Enabling In-context Learning Over Graphs

Contact: qhwang@stanford.edu

Workshop: ICML 2023 Workshop on Structured Probabilistic Inference & Generative Modeling

Links: Paper | Website

Keywords: graph ml, in-context learning, pretraining

PRODIGY: Enabling In-context Learning Over Graphs

Contact: qhwang@stanford.edu

Workshop: ICML 2023 Workshop on Structured Probabilistic Inference & Generative Modeling

Links: Paper | Website

Keywords: graph ml, in-context learning, pretraining

Thomas: Learning to Explore Human Preference via Probabilistic Reward Model

Contact: sttruong@cs.stanford.edu

Workshop: ICML 2023 Workshop: The Many Facets of Preference-Based Learning

Keywords: preference learning, active learning, bayesian neural networks, thompson sampling

Beyond Scale: the Diversity Coefficient as a Data Quality Metric Demonstrates LLMs are Pre-trained on Formally Diverse Data

Contact: brando9@stanford.edu

Workshop: ICML data centric workshop

Links: Paper

Keywords: data centric, diversity, llm, foundation model

We look forward to seeing you at ICML!