SIGGRAPH 2014

Hao Su1

Qixing Huang1

Niloy J. Mitra2

Yangyan Li1

Leonidas Guibas1

1Stanford University, USA

2University College London, UK

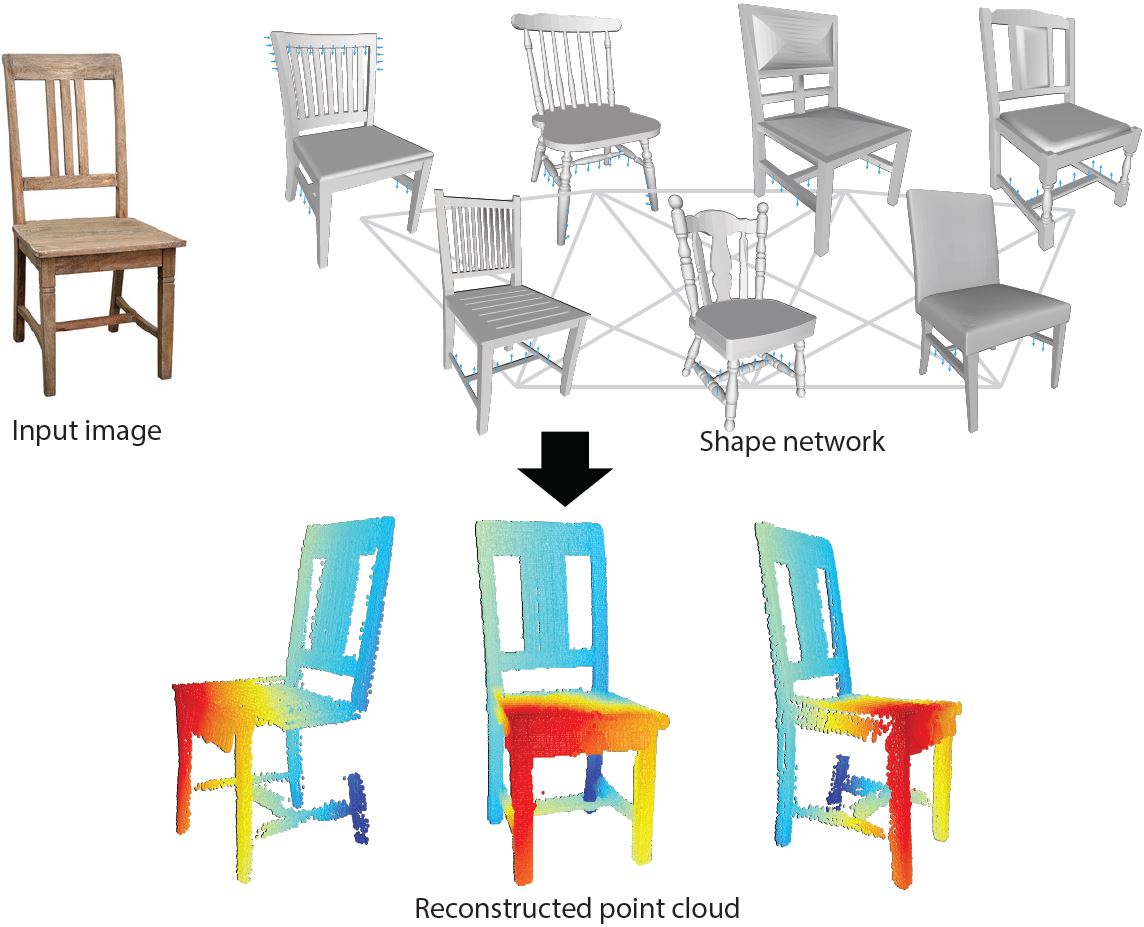

Figure 1: We attribute a single 2D image of an object (upper left) with depth by transporting information from a 3D shape deformation subspace learned by analyzing a network of related but different shapes (upper right). For visualization, we color code the estimated depth with values increasing from red to blue (bottom).

Video

Abstract

Images, while easy to acquire, view, publish, and share, they lack critical depth information. This poses a serious bottleneck for many image manipulation, editing, and retrieval tasks. In this paper we consider the problem of adding back depth to an image of an object, effectively “lifting” it back to 3D, by exploiting a collection of aligned 3D models of related object shapes. Our key insight is that, even when the imaged object is not contained in the shape collection, the network of shapes implicitly characterizes a shape-specific deformation subspace that regularizes the problem and enables robust diffusion of depth information from the shape collection to the input image. We evaluate our fully automatic approach on diverse and challenging input images, validate the results against Kinect depth readings (when available), and demonstrate several smart image applications including depth-enhanced image editing and image relighting.

Paper

Pipeline

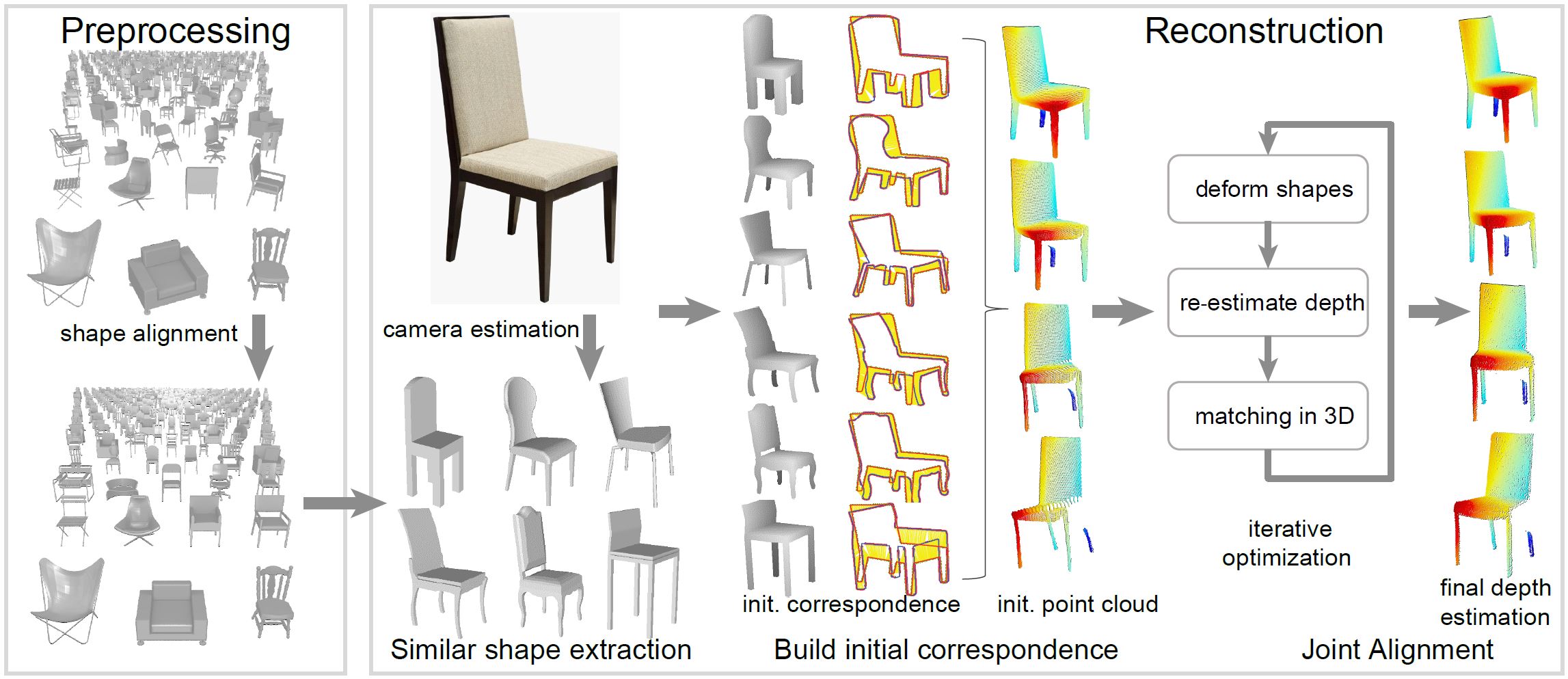

Figure 2: We reconstruct a 3D point cloud from an image object by utilizing a collection of related shapes. In the preprocessing stage, we jointly align the input shape collection and learn structure-preserving deformation models for the shapes. Then, in the reconstruction stage, we lift a single image to 3D in three steps. The first step initializes the camera pose in the coordinate system associated with the aligned shapes and extracts a set of similar shapes. The second step performs image-image matching to build dense correspondences between the image object and the similar shapes, and generate an initial 3D point cloud. The final step jointly aligns the initial point cloud and the selected shapes by simultaneously optimizing the depth of each image pixel, the camera pose, and the shape deformations.

Results

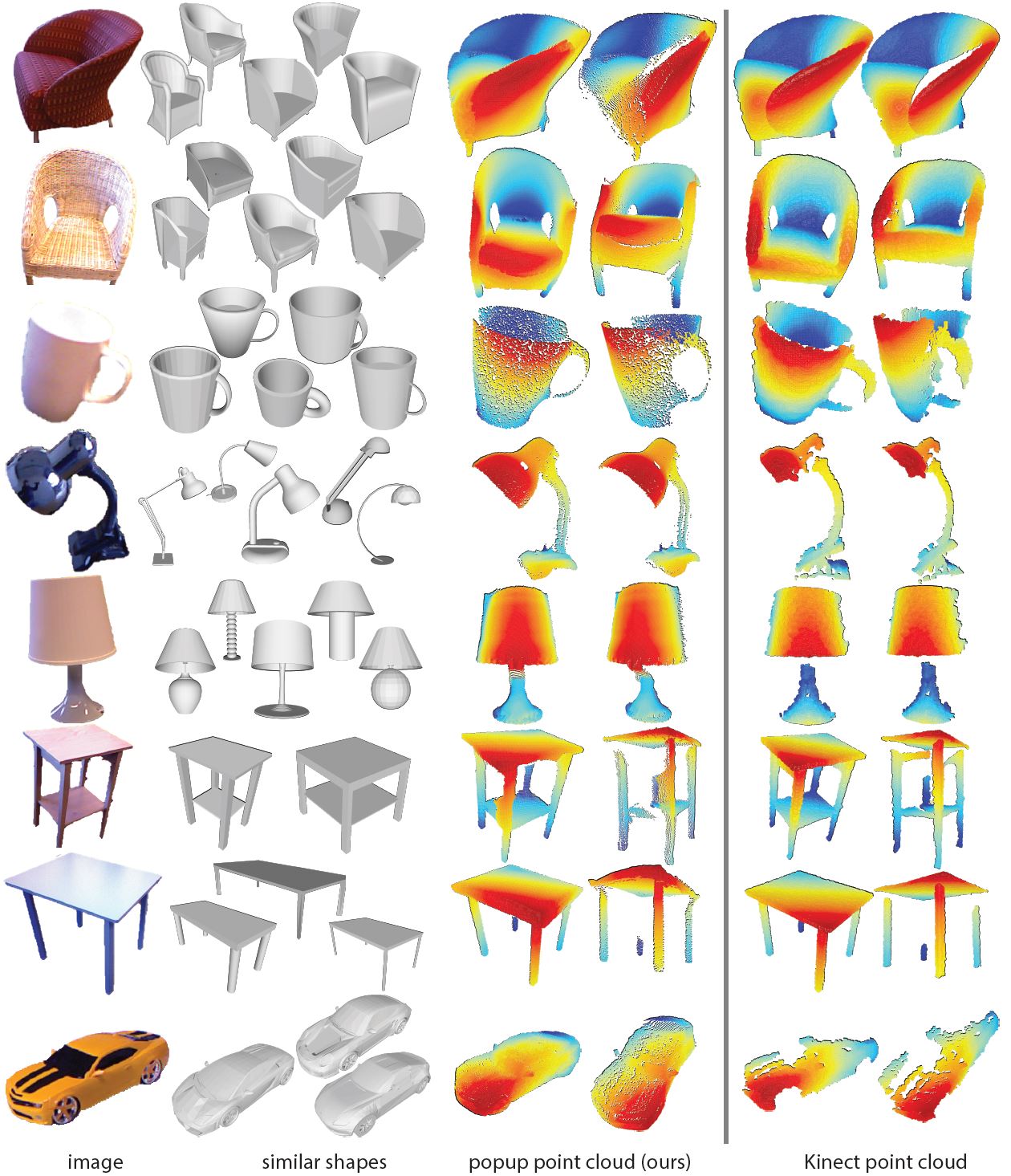

Figure 3: Representative point cloud reconstruction results. We have evaluated our approach on five categories of objects. This figure shows representative results in each category. For every object we show the input 2D image, the extracted similar shapes, the reconstructed point cloud and finally the ground truth Kinect scan.

Figure 4: Relighting. Using the inferred depth information, we can build the normal map and simulate different lighting conditions. Leftmost column is the input image and the three columns on the right are the simulated illumination. A directional light source moves from left to right (top row), or up to down (bottom row).

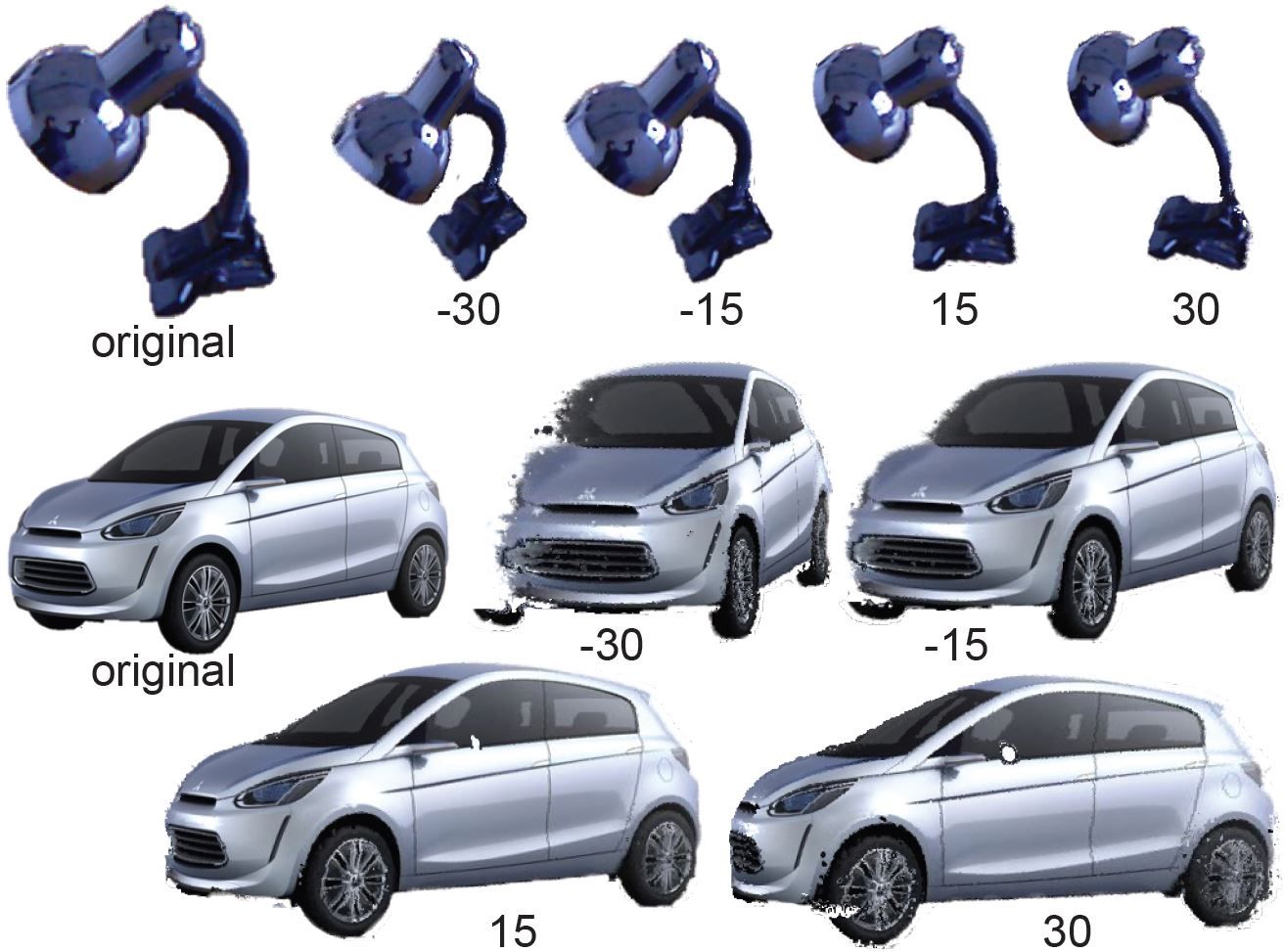

Figure 5:Novel View Synthesize. Simulated images by rotating cameras around the y-axis.

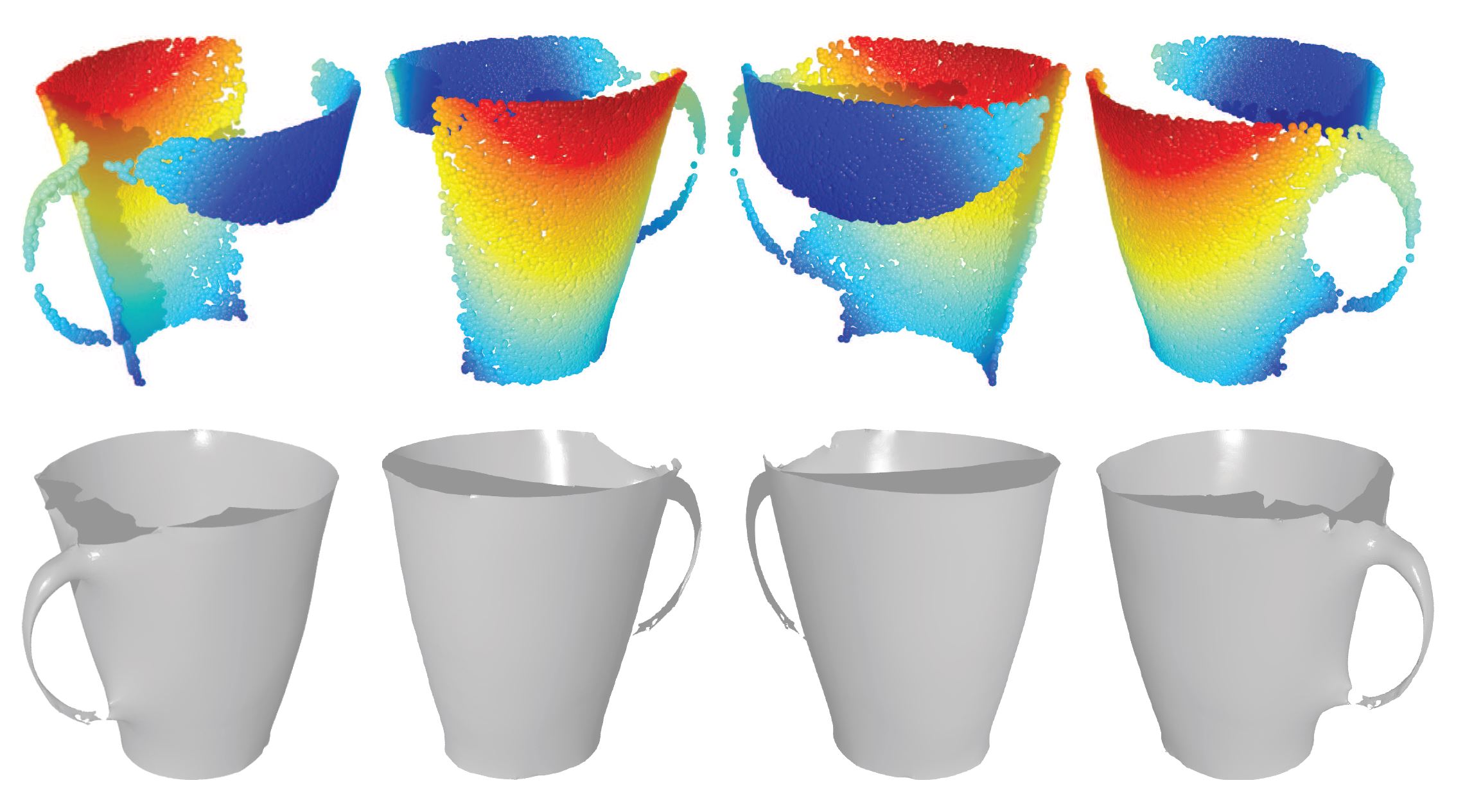

Figure 6: Symmetry-based Completion and Surface Reconstruction. Top: point clouds viewed from different angles. Bottom: surface reconstruction results with symmetry-based completion. Original image is from the third row of Figure 3.

Code and Data

Code for model retrieval module, please refer to Joint Embedding (my SIGGRAPH ASIA 2015 paper), for viewpoint estimation module, please refer to RenderForCNN project (my ICCV2015 paper).

Data please refer to ShapeNet project