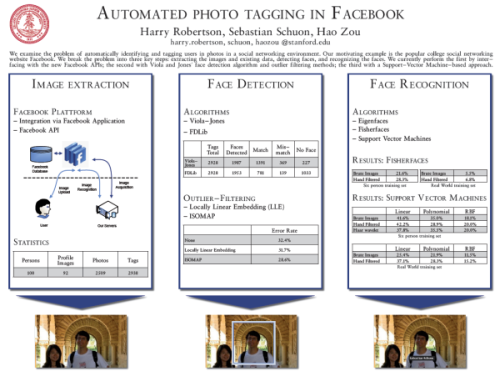

The web 2.0 community Facebook offers among others, the possibility to upload images to photo albums. On these pictures persons can be tagged, meaning their position in the image is marked. Currently users have to do this manually, which is a cumbersome task.

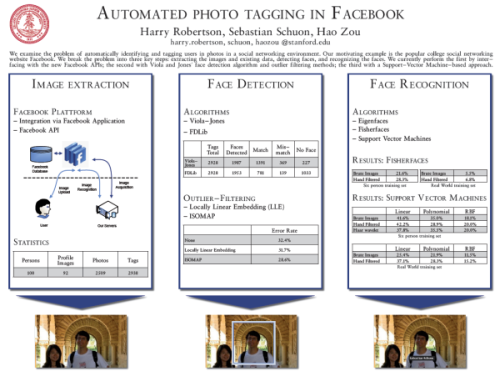

We looked into, whether this task could be performed by a computer. The presented automatic facial tagging system is split into three subsystems: obtaining image data from Facebook, detecting faces in the images and recognizing the faces to match faces to individuals. Firstly, image data is extracted from Facebook by interfacing with the Facebook API. Secondly, the Viola-Jones’ algorithm is used for locating and detecting faces in the obtained images. Furthermore an attempt to filter false positives within the face set is made using LLE and Isomap. Finally, facial recognition (using Fisherfaces and SVM) is performedon the resulting face set. This allows us to match faces to people, and therefore tag users on images in Facebook. The proposed system accomplishes a recognition accuracy of close to 40%, hence rendering such systems feasible for real world usage.

Download

project report (with Harry Robertson, Hao Zou)

Cite as:

@ARTICLE{schuon_fb07,

title={Automated Photo Tagging in Facebook},

author={Schuon, Sebastian and Robertson, Harry and Zou, Hao},

journal={Stanford CS229 Fall 2007 Project Report},

year={2007}}

The previous work mon motion deblurring, namely comparing several methods with real captures images with well known properties has recently been accepted as a journal paper. Even though significant progress has been made since the paper was written in the field, the paper contains some good reference data, others might be interested to work with. They are available on the

The previous work mon motion deblurring, namely comparing several methods with real captures images with well known properties has recently been accepted as a journal paper. Even though significant progress has been made since the paper was written in the field, the paper contains some good reference data, others might be interested to work with. They are available on the

A way to illustrate the magnetic field on surfeces, the Magneto-Optic Kerr Effect can be used. During my time at the

A way to illustrate the magnetic field on surfeces, the Magneto-Optic Kerr Effect can be used. During my time at the