The Conference on Robot Learning (CoRL) 2020 is being hosted virtually from November 16th - November 18th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

Learning 3D Dynamic Scene Representations for Robot Manipulation

Contact: jiajunwu@cs.stanford.edu

Links: Paper | Video | Website

Keywords: scene representations, 3d perception, robot manipulation

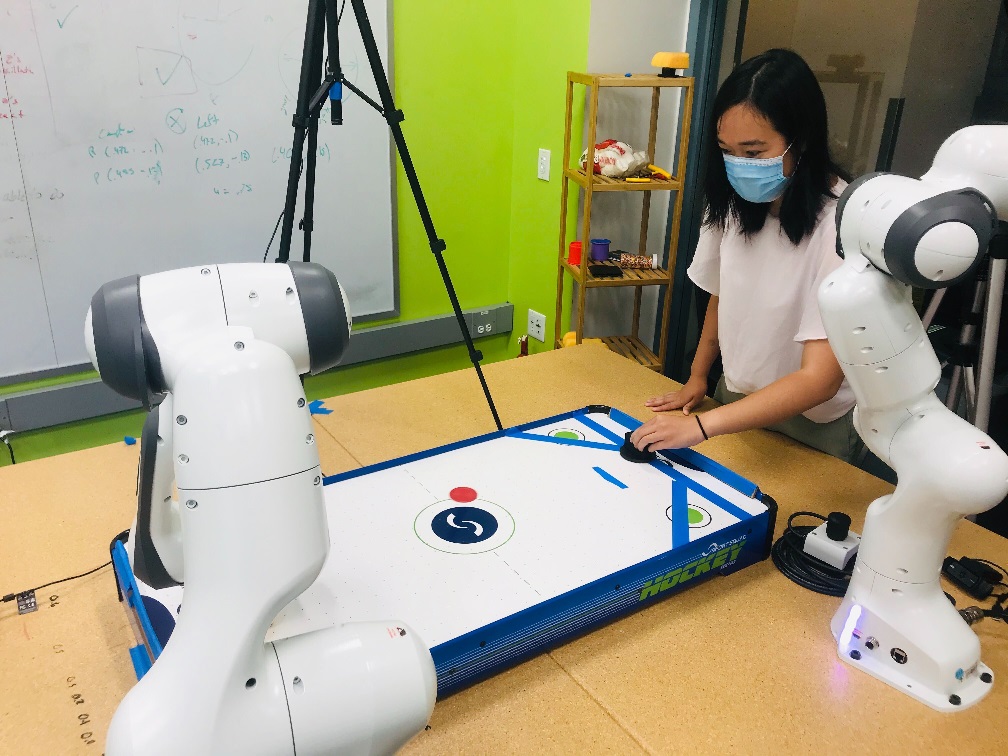

Learning Latent Representations to Influence Multi-Agent Interaction

Contact: anniexie@stanford.edu

Links: Paper | Blog Post | Website

Keywords: multi-agent systems, human-robot interaction, reinforcement learning

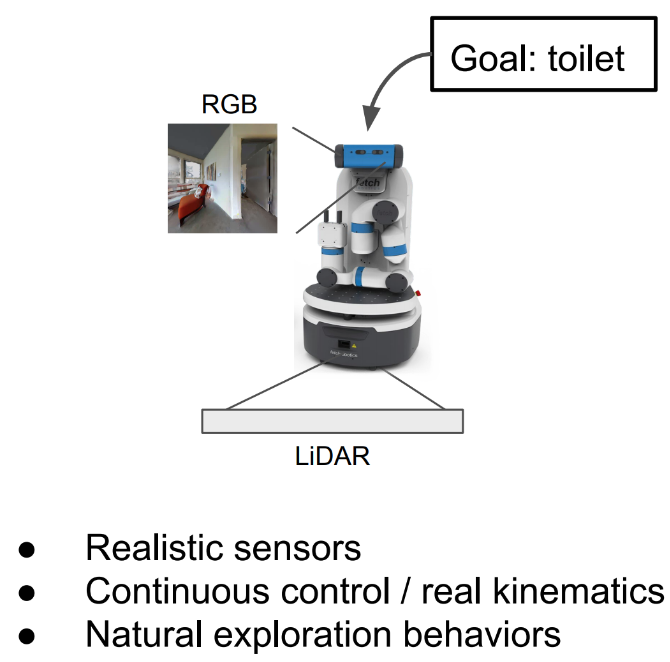

Learning Object-conditioned Exploration using Distributed Soft Actor Critic

Contact: ayzaan@google.com

Links: Paper

Keywords: object navigation, visual navigation

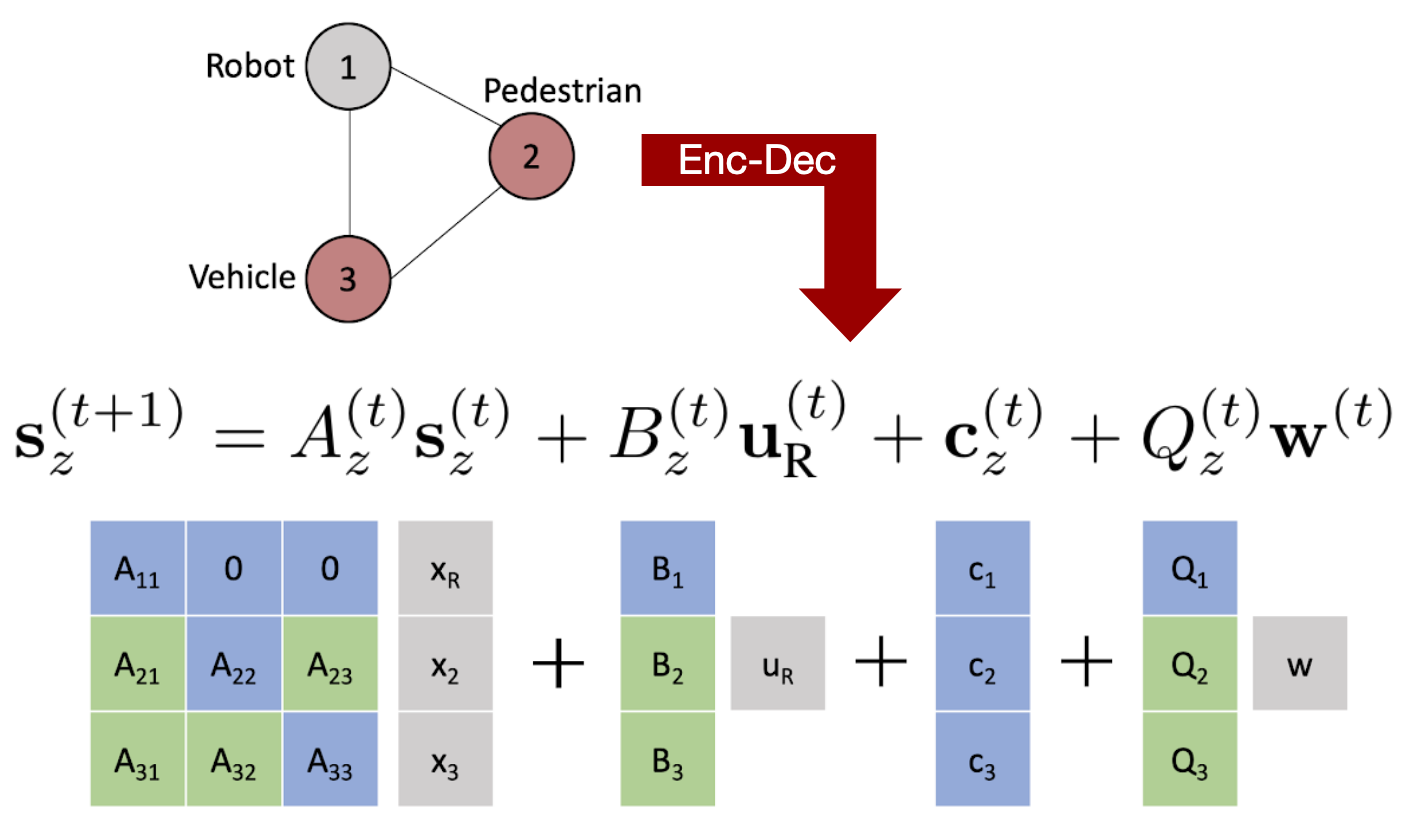

MATS: An Interpretable Trajectory Forecasting Representation for Planning and Control

Contact: borisi@stanford.edu

Links: Paper | Video

Keywords: trajectory forecasting, learning dynamical systems, motion planning, autonomous vehicles

Model-based Reinforcement Learning for Decentralized Multiagent Rendezvous

Contact: rewang@stanford.edu

Links: Paper | Video | Website

Keywords: multiagent systems; model-based reinforcement learning

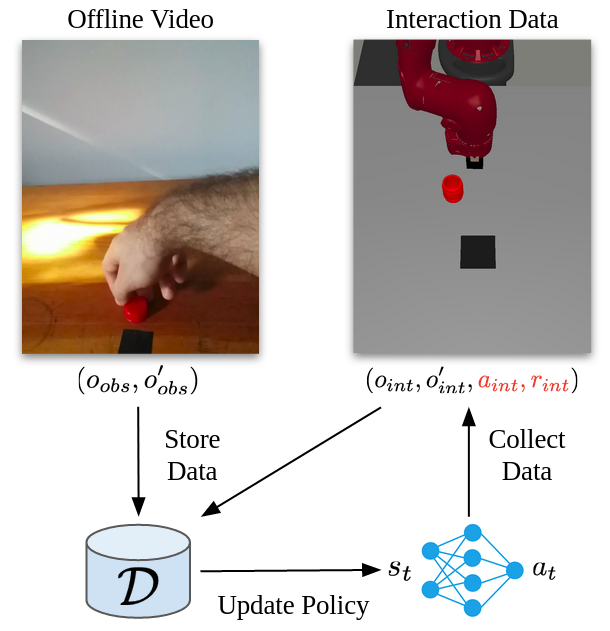

Reinforcement Learning with Videos: Combining Offline Observations with Interaction

Contact: karls@seas.upenn.edu

Links: Paper | Website

Keywords: reinforcement learning, learning from observation

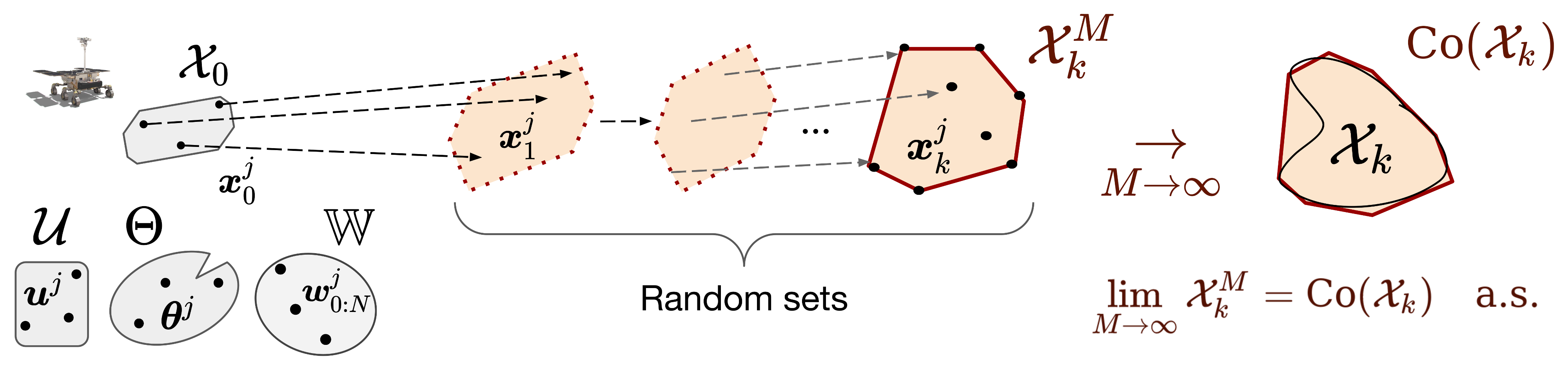

Sampling-based Reachability Analysis: A Random Set Theory Approach with Adversarial Sampling

Contact: thomas.lew@stanford.edu

Links: Paper

Keywords: reachability analysis, robust planning and control, neural networks

Keynote

Walking the Boundary of Learning and Interaction (Dorsa Sadigh)

Overview: There have been significant advances in the field of robot learning in the past decade. However, many challenges still remain when considering how robot learning can advance interactive agents such as robots that collaborate with humans. This includes autonomous vehicles that interact with human-driven vehicles or pedestrians, service robots collaborating with their users at homes over short or long periods of time, or assistive robots helping patients with disabilities. This introduces an opportunity for developing new robot learning algorithms that can help advance interactive autonomy.

In this talk, I will discuss a formalism for human-robot interaction built upon ideas from representation learning. Specifically, I will first discuss the notion of latent strategies— low dimensional representations sufficient for capturing non-stationary interactions. I will then talk about the challenges of learning such representations when interacting with humans, and how we can develop data-efficient techniques that enable actively learning computational models of human behavior from demonstrations, preferences, or physical corrections. Finally, I will introduce an intuitive controlling paradigm that enables seamless collaboration based on learned representations, and further discuss how that can be used for further influencing humans.

Live Event: November 17th, 7:00AM - 7:45AM PST

We look forward to seeing you at CoRL!