Disclaimer: this is an opinion piece that represents the views of its authors, and not all of SAIL. We would like to thank SAIL professors Fei-Fei Li, Christopher Manning, and Dorsa Sadigh for providing invaluable comments and feedback on this post.

What are the ethical responsibilities of AI researchers? Or to put it in more pragmatic terms, what are best practices AI researchers can follow to avoid unintended consequences of their research?

Despite the meteoric rise of AI research over the

past decade, our research community still does not

regularly and openly discuss questions of ethics and responsibility.

Every researcher learns a set of best practices for doing

impactful research – research published at conferences and

in journals – but not all of us are asked or expected to

develop best practices for ethical research that prevents potential

misuse. This is already or increasingly the norm for other influential

disciplines such as law,

medicine,

and engineering, and we believe it should be normalized in the education and

research of AI as well.

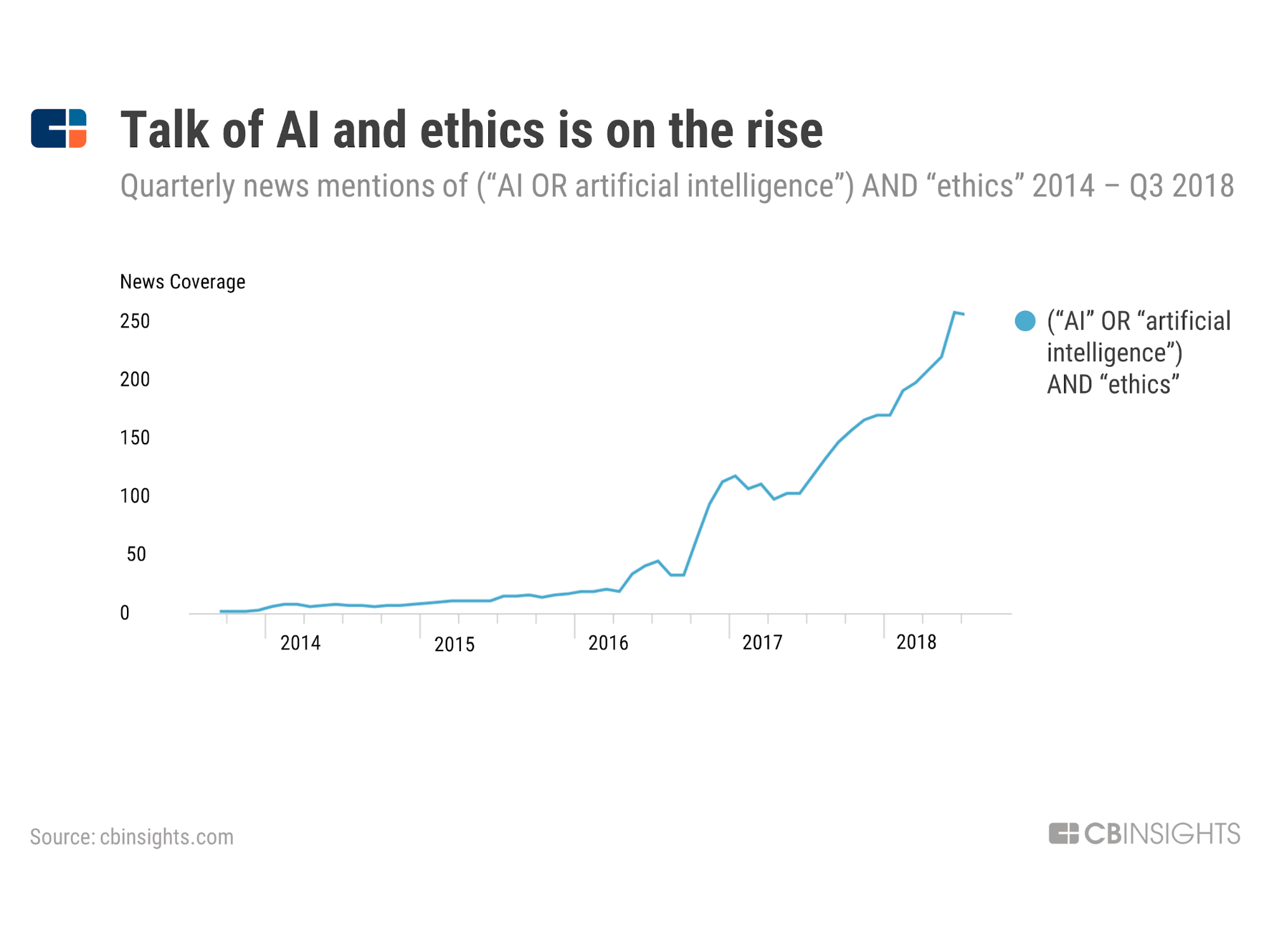

Evidence over growing concerns about ethics and AI. Source: cbiinsights.com

Yes, the topic has received growing attention, particularly within the subfield of research focused on Fairness, Accountability, and Transparency for AI (see FAT ML and FAT*). However, as PhD students working in AI, we have never been explicitly instructed or even incentivized to speak openly and enthusiastically about this topic. This, despite a documented growing concern about AI and ethics in the general public (see above).

This needs to change. Academic AI researchers are now routinely having an impact in industry, journalists are now increasingly turning to researchers for quotes, and people in general can agree that AI as a field has never had as much influence on society as it does today. At the very least, all AI researchers and engineers should be aware of the sorts of ethical hypotheticals and contingencies they may encounter in their work and how to respond to them.

In this piece, we intend to promote several best practices that we think AI researchers and engineers should be aware of. We focus primarily on what researchers can do to avoid unintended negative consequences of their work, and do not go into as much depth on the topics of what those negative consequences can be or how to deal with intentionally bad actors. The ideas we promote are not new (in fact, most of them are ideas suggested by prominent researchers who we shall credit), nor are they comprehensive, but they are practices we would like to highlight as a beginning to a larger discussion on this topic. Our hope is to promote awareness of these concepts and to inspire other researchers to join this discussion.

Education

If you have read this far into the article, you hopefully agree that it is reasonable to expect researchers to think about the broader implications of their research. However, perhaps you do not know where to begin to embark on this seemingly daunting task. Fortunately, the first step you can take, as all of us should who care about this issue, is rather straightforward - to become more informed about the ethical concerns of AI, at least in your subfield.

Practice: Familiarize yourself with the basics of AI ethics

The legal and policy communities have thought about the concerns of AI as extensively as the technical community has thought about its development. Even a cursory search on the web will yield some thought-provoking and well-researched works:

- Machine learning researchers and practitioners may find it insightful to ponder on the opacity of machine learning algorithms (even more relevant in the present age of learning with deep neural networks).

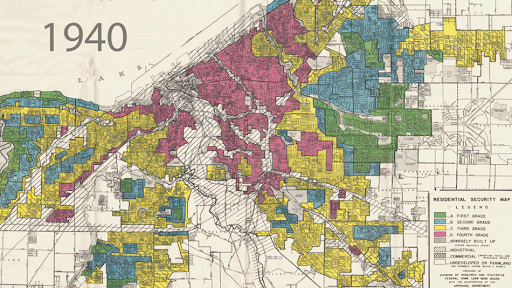

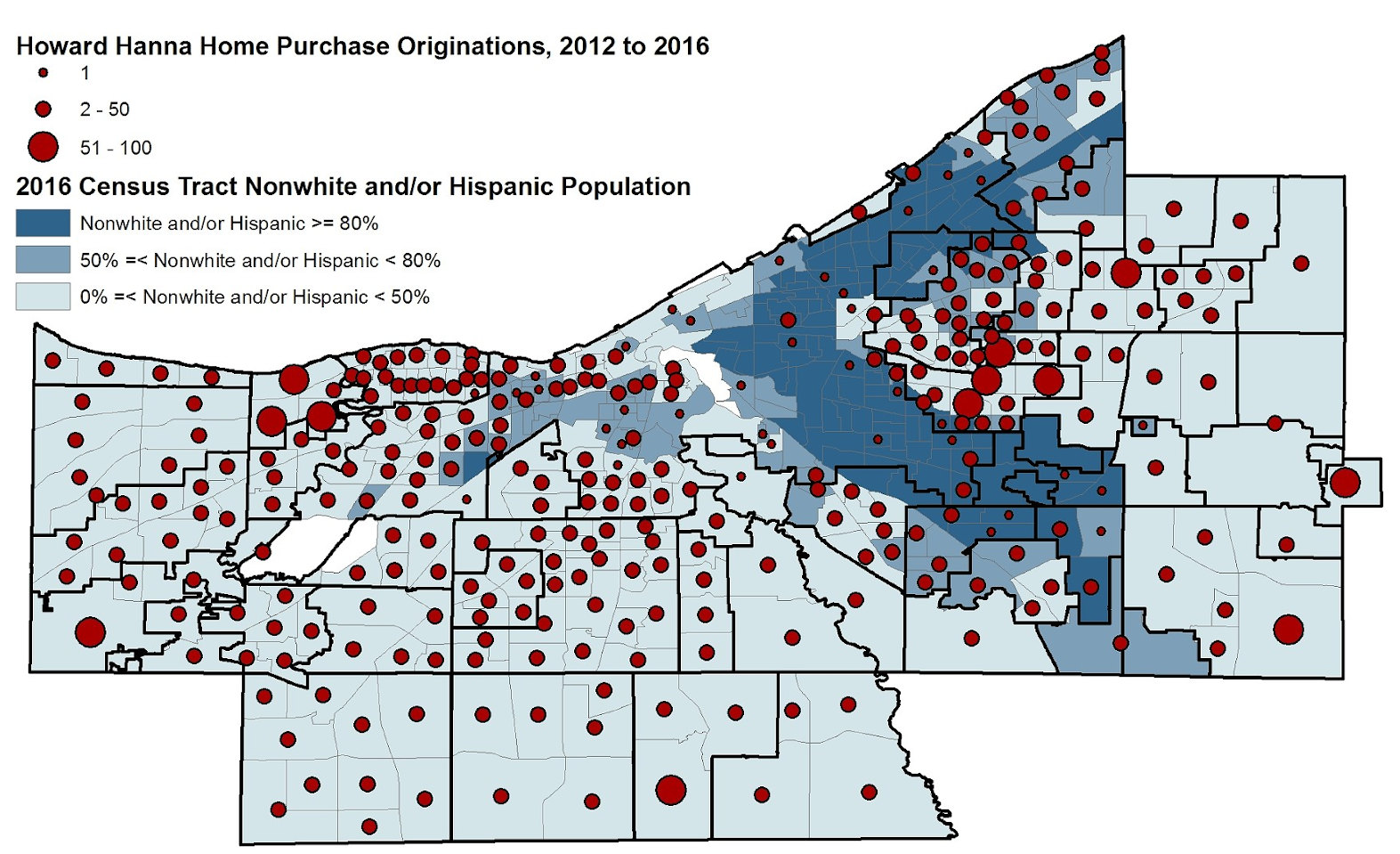

- For those working in big data analysis, many of the ethical issues, particularly stemming from disparate impact, have been outlined in a recent paper in Big Data & Society as well as the report “Unfairness By Algorithm: Distilling the Harms of Automated Decision-Making” by the future of privacy forum.

- Researchers in robotics, i.e. embodied AI systems, can start with a discussion on the issues of robot ethics in a mechanized world.

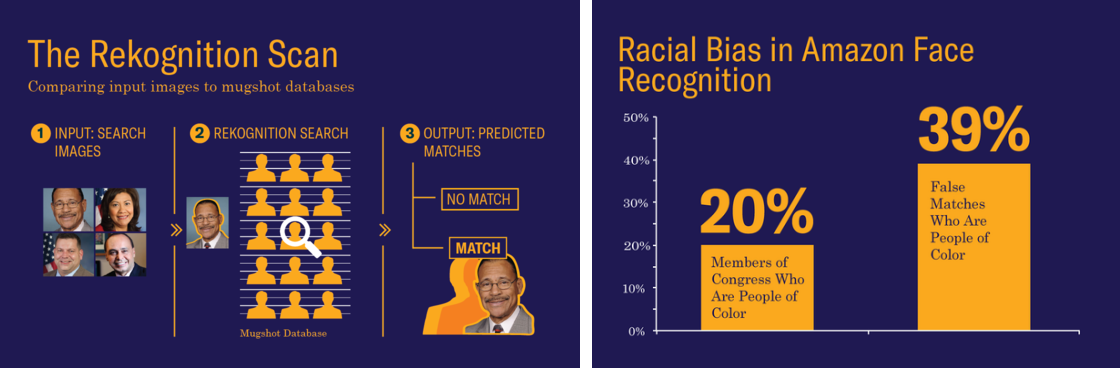

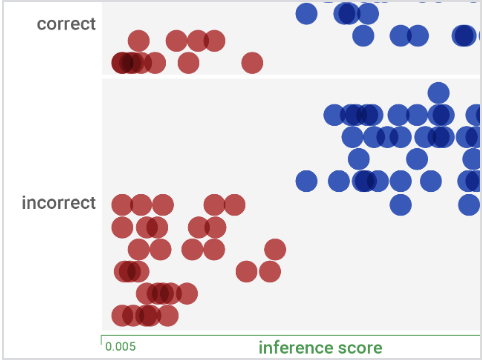

The biased results found by ACLU - people of color who are members of congress were found to be disproportionately incorrectly classified as being criminals 39% of the time with facial recognition technology from Amazon. (source)

- Facial recognition systems, a prominent application of computer vision, have a host of related ethical concerns. The issue of emotional privacy while decoding facial pain expressions is also relevant. This broader survey highlights a range of additional ethical challenges for computer vision.

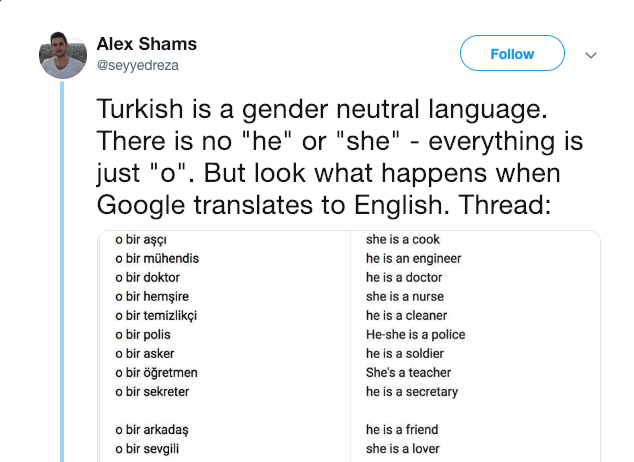

- For those who think about Natural Language Processing, it would be instructive to become familiar with some of the the significant social impacts of NLP, such as demographic misrepresentation, reinforcing linguistic biases, and topic overexposure and underexposure. Additionally, there has already been a paper titled “Ethical by Design: Ethics Best Practices for Natural Language Processing” that is of course relevant to the topic of the present article.

- More broadly, many AI algorithms and developments are undeniably ‘dual-use’ technologies (technologies which are designed for civilian purposes but which may have military applications, or more broadly designed for certain beneficial uses but can be abused for negative impacts). This concept is far from new and discussion over how to deal with it has long been established in fields such as software security, and given its relevance to AI it is important that we are aware of it as well.

These are just a few useful starting points to demonstrate that AI researchers can (and we think, should) actively educate themselves on these topics. For a more comprehensive overview, Eirini Malliaraki has compiled a list of books and articles to get you up to speed on many of the relevant subjects (such as algorithmic transparency, data bias, and the social impact of AI). The list is long and may appear intimidating, but we recommend starting with a few topics that are directly or even indirectly related to your own research and start adding them to your reading list. A number of recent papers have also done fantastic reviews of a large amount of information, and are longer reads worth looking at:

- AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations

- 50 Years of Test (Un)fairness: Lessons for Machine Learning

- The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation

- Perspectives on Issues in AI Governance

- Thinking About Risks From AI: Accidents, Misuse and Structure

In addition to individual research works, there is a growing amount of institutional interest. Rachel Thomas, herself a prominent AI and AI ethics researcher, has compiled a list of researchers and research institutes working on fairness and ethics in AI. If you are at a university, or live close to one, it might be worthwhile to take or audit a course on ethics in AI. Casey Fiesler has crowdsourced over 200 courses on technology and ethics in universities all around the world, along with their class syllabi for reference. Besides classes, there are also easy-to-digest online compilations of information, such as Ethics in Technology Practice from the Markkula Center for Applied Ethics at Santa Clara University.

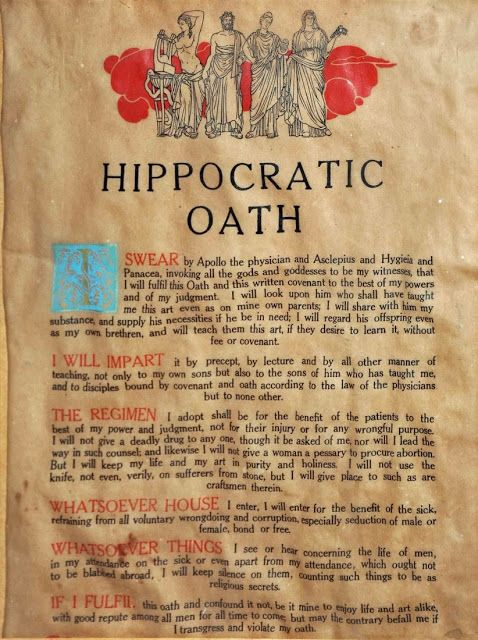

Practice: Codes of ethics and pledges

The vast amount of information online can sometimes feel unwieldy to the uninitiated. Luckily, AI is far from the first context in which academics interested in knowledge have had to deal with ethical questions. Nor is the need to think about ethical questions new to AI itself. Therefore, a number of distilled codes of ethics exist that concisely summarize the key points one should keep in mind:

- CS professionals

Though not specific to AI, the codes of ethics of both IEEE and ACM are quick to read and entirely relevant. Many of the principles in these codes, such as being honest or not taking bribes, represent common sense. But, whenever you may be in doubt as to the ethical nature of possible research actions, it is a good idea to review them.

Attendees at the Beneficial AI Conference 2017, which led to the Asilomar AI Principles.

- AI Researchers

Of course, academic research in AI has a set of issues and concerns unique to it that these general codes of ethics for technology and computing professional may not address. Fortunately, substantial effort has been put into addressing this area as well. In particular, “ETHICALLY ALIGNED DESIGN - a Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems” by the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and the ASILOMAR AI Principles by the Future of Life Institute both provide concrete recommendations for AI researchers. Last but not least, researchers should be familiar with the codes of conducts at their universities and professional events they attend. Not all AI conferences have explicit codes of conduct, so a good baseline to be aware of is the NeurIPS 2018 code of conduct. Likewise, the NeurIPS affiliated ML Ally pledge is worth reading and thinking about.

- AI Influencers

Many researchers may also have the potential to have an impact beyond academia, such as in policy or industry. In addition to the prior recommendations, the Montréal Declaration for Responsible AI and AI4People list of Principles and Recommendations offer an excellent overview of things to consider when developing AI in general. And, there are also the more specific Lethal Autonomous Weapons Pledge,the Campaign to Stop Killer Robots, and the Safe Face Pledge, which are likewise very relevant to anyone involved in the research and development of AI technology. Specific companies and research labs (such as Google, OpenAI, DeepMind, Salesforce, and IBM) have also begun to specify their principles, and when considering joining these institutions it is worth reviewing these documents.

Communication & Distribution

As far as we have seen, the potential misuses and ethical considerations of new AI algorithms and products are rarely identified and pointed out in documentation or academic papers. A prominent practical example is that Amazon’s documentation for its Rekognition product did not have warnings on changing the default parameters of the product for law enforcement use cases until after the ACLU pointed out that the product could be misused to classify US senators as criminals.

Perhaps even more importantly, researchers do not just communicate ideas with their papers – they also distribute code, data, and models to the wider AI society. As the capabilities of AI systems continue to become stronger, considerations of dual-use will have to prompt us to develop a set of new best practices with regards to distribution, some of which we discuss here.

Practice: Ethical Consideration Sections

A novel and impactful practice researchers can undertake now is to include a section on Ethical Considerations in our papers, something that machine learning researchers in the Fairness, Accountability and Transparency sub-community have already started to do. A prominent example is Margaret Mitchell, a Senior Research Scientist at Google AI, and Tech Lead of Google’s ML fairness effort, who has included an ethical consideration section in several of her recent papers. For instance, her 2017 paper on predicting imminent suicide risk in a clinical care scenario using patient writings, flagged the potential for abuse of the research by singling out people, which the authors addressed by anonymizing the data. Clearly, this is particularly relevant for research with potential for dual-use. Her blog post provides even more details.

An example of an ethical considerations section (from Margaret Mitchells blog post)

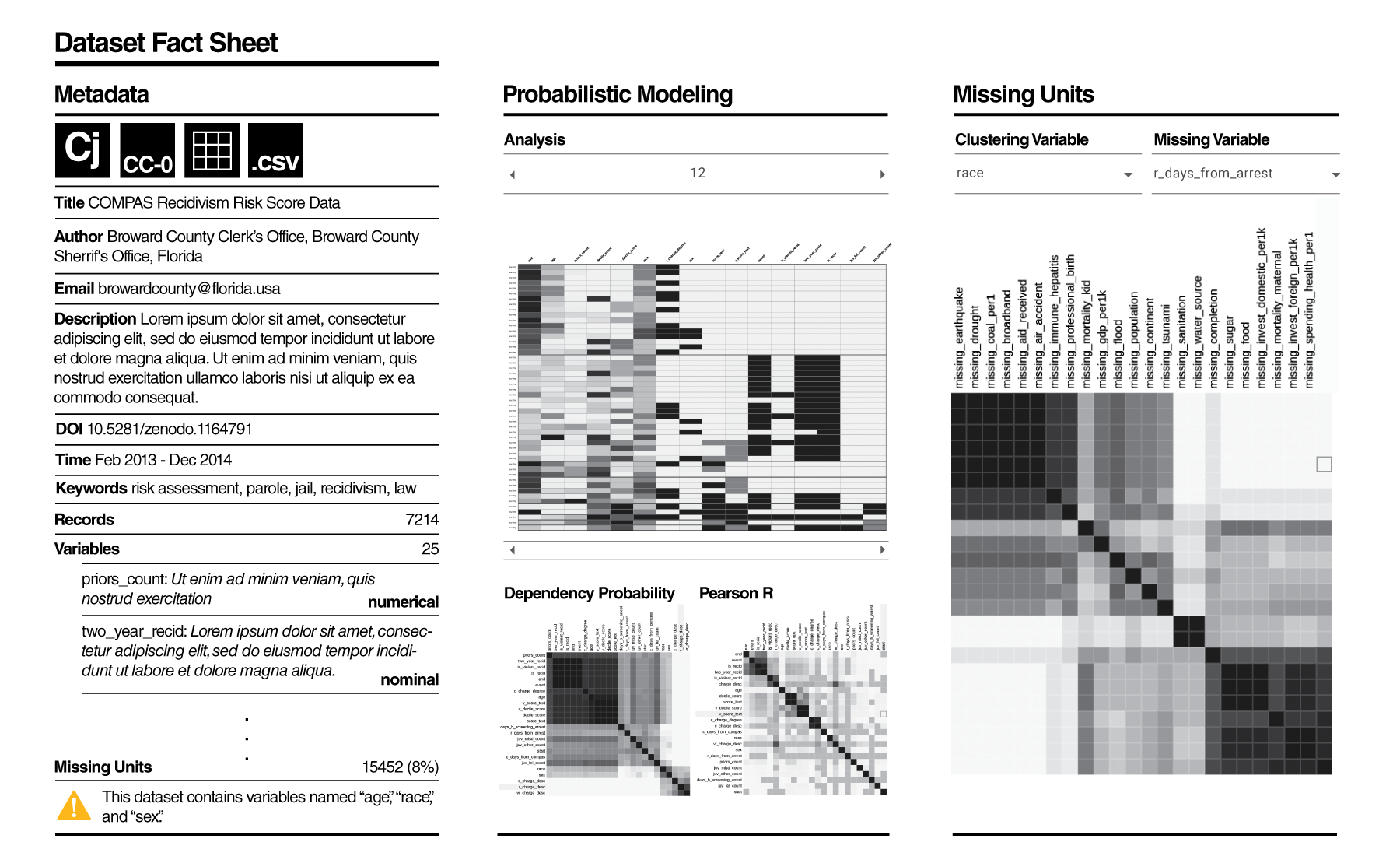

Practice: Cards, Certificates, and Declarations

A ‘Dataset nutrition label’ from the project website

Recently, groups from Google and IBM Research have proposed standardized means of communicating aspects of new datasets and AI services in the papers Model Cards for Model Reporting, Datasheets for Datasets, Data Statements for NLP, The Dataset Nutrition Label, Policy Certificates: Towards Accountable Reinforcement Learning, and Increasing Trust in AI Services through Supplier’s Declarations of Conformity. These methods allow researchers to communicate important information about their work such as a model’s use cases, a dataset’s potential biases, or an algorithm’s security considerations. As the impact of AI research on society continues to grow, we should consider adopting these new standards of communication.

Practice: Approval and Terms of Access for Datasets, Code, and Models

ImageNet has been among the most important datasets in Computer Vision, but what many may not be aware is that being given easy download access requires going through an approval stage and agreeing with precise terms of access. It’s far from the only dataset that mandates a request before being shared, with a newer example being The Pilot Parliaments Benchmark (see above). The benefit of such a process is clear for any dataset with a potential for dual-use, although it is admittedly not without some overhead for the lab or organization distributing the dataset.

The same process could also be applied for code and pretrained models, which of course also have potential for dual-use, though this precedent there is not as established; in general, we believe the AI research community will need to discuss and develop new best practices for distribution of data, code, and models that are essential for reproducibility but may be put to harmful use. Related to this, a team made up of AI researchers, a patent attorney/computer program, and others recently proposed the idea of Responsible AI Licenses “that developers can include with AI software to restrict its use,” and allow researchers to “include clauses for restrictions on the use, reproduction, and distribution of the code for potentially harmful domain applications of the technology”.

A set of considerations related to distributing research results Google highlighted in Perspectives on Issues in AI Governance

Practice: Revise Peer Review

A reasonable retort to the above suggestions might be that they are not typically done today, and the effort needed to follow them may not help or even hurt your paper’s chances of getting accepted. That is why we endorse the position put forth in “It’s Time to Do Something: Mitigating the Negative Impacts of Computing Through a Change to the Peer Review Process”. As summarized well in a New York Times opinion piece, “46 academics and other researchers, are urging the research community to rethink the way it shares new technology. When publishing new research, they say, scientists should explain how it could affect society in negative ways as well as positive.”

Practice: Use, share, and create emerging tools and datasets

Lastly, there are several new and emerging tools and datasets that can help you determine if your models or your dataset have unintended biases and so check for that prior to wider distribution. Some that we would like to highlight are:

- AI Fairness 360, by IBM: an open-source toolkit of different metrics and algorithms developed from the broader Fairness AI community that checks for unfairness and biases in models and datasets

- Facets, by Google’s AI + People Research group: two robust visualization tools to aid in understanding and analyzing machine learning datasets, which might be helpful in quickly identifying biases in your datasets

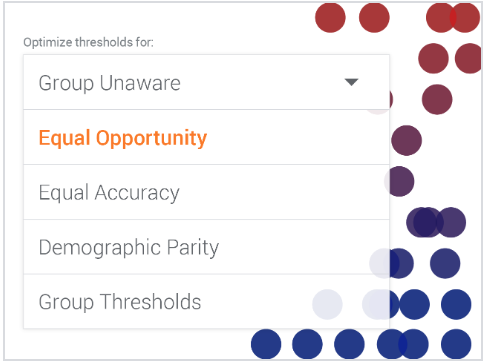

- What If, by Google’s AI + People Research group:: a neat tool to play “what if” with theories of fairness, see the trade-offs, and make the difficult decisions that only humans can make.

- gn_glove, by the authors of Learning Gender-Neutral Word Embeddings (EMNLP 2018): a set of gender-neutral word vectors meant to remove stereotypes that have been shown to exist in prior word vectors.

- WinoBias, by the authors of Gender Bias in Coreference Resolution: Evaluation and Debiasing Methods: a benchmark for coreference resolution focused on gender bias.

- Wikipedia Toxicity Dataset, by Wikimedia: an annotated dataset of 1m crowd-sourced annotations targeting personal attacks, aggression, and toxicity.

- The Pilot Parliaments Benchmark (PPB), by the Algorithmic Justice League: a dataset of human faces meant to achieve better intersectional representation on the basis of gender and skin type. It consists of 1,270 individuals, selected for gender parity, in the national parliaments of three African countries and three European countries..

- The diverse facial recognition dataset, by IBM: a dataset of 36,000 images that is equally distributed across skin tones, genders, and ages.

These are examples we have been able to find, but in general keeping an eye out for such datasets and tools and considering them for your own research is a sensible idea.

Advocacy

Fairness and ethical AI is a growing field in and of itself. If you would like to go beyond the basic expected ethical practices of educating yourself and communicating potential misuses of your creations, here are suggestions on how you can help make the field of AI and the tools our peers create to become more ethical, inclusive, and fair.

Practice: Bring up Concerns in Teaching and Talks

Image from one of Stanford AI Lab’s ‘AI Salon’ events on Best Practices in doing Ethical AI Research

One straightforward principle is to explicitly communicate the ethical implications of our research whenever we get the opportunity. We can easily start in our classrooms, by dedicating parts of the syllabus to address ethical concerns in the field and bring up historical or current examples of misuse. For instance, we can bring up the possibility of unintended bias and how to guard against it when teaching Machine Learning. When assigning large projects, we can provide guidelines for how students can identify and express concerns about the implications of their work. Further yet, we can advocate for courses that delve deeper into these topics, such as Stanford’s CS122: Artificial Intelligence - Philosophy, Ethics, and Impact, CS181: Computers, Ethics, and Public Policy, and CS 521: Seminar on AI Safety. A similar approach could be taken with talks and interviews: simply allocate a portion of them to explicitly addressing any ethical concerns in the research.

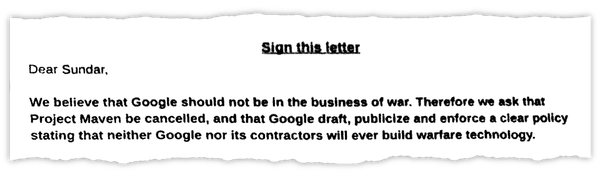

Practice: Take a Stand

As you develop your own code of ethics, you might start noticing when other researchers and institutions make unethical decisions that make you feel uncomfortable. Sometimes, those institutions might be the company or university you work for, or your own government. If your institution goes against your code of ethics, we want to remind you that as an AI researcher, you are not powerless.

A snapshot of the open letter by Google employees about Maven

People can influence the sorts of research their institution pursues through collective action, protests, and even activism. Recently, more than 4000 employees at Google signed an open letter against Project Maven, the company’s contract to develop AI technology for drone video analysis for the Pentagon, over fears that such technology will be used in drone strikes. Google announced soon after that they would not continue with the project, and that they would not participate in JEDI, the $10 billion cloud contract with the Department of Defense, citing their AI principles. Similarly, employees have also protested against Microsoft’s bid in JEDI, and employeesof Amazon have protested against the company’s work with the US Immigration and Customs Enforcement (ICE).

Practice: Obtain and promote more diverse research perspectives

Joy Buolamwini’s TED Talk discussing her research on biases in facial recognition algorithms

In 2017, Joy Buolamwini discovered that state-of-the-art facial recognition algorithms performed very poorly for people of color after testing the algorithms on herself. The fact that some of our best algorithms cannot perform well for those that are underrepresented in the field of AI should not be surprising: as researchers, our approaches to and perspectives for research can often be limited by our experiences, histories, and identities. This is why increasing diversity and making AI a more inclusive place for underrepresented talents can help our field become more ethical and less biased.

To be clear, the general benefit of diversity in research and academia is widely studied and accepted, and is well explained in this column by the Association for Psychological Science. Our specific point is that having a more intellectually and experientially diverse research community will make us much better at identifying the implications of our research on the world at large, which in turn will make it easier for the policymakers to come up with more equitable use cases of AI algorithms. Therefore, we should strive to encourage and nurture diversity and inclusiveness in our institutions and teams, not just for better hiring and enrollment numbers, but for richer and more profound perspectives on our work.

If you are not in the position to hire and diversify your team, we have two suggestions. First, you can expand your own intellectual circle. It is easy to surround yourself with your colleagues and other AI researchers, and only have research discussions with people in your field. Try reaching out to thinkers and researchers in other fields, especially ones in fields that think deeply about ethics and societal implications of technology, such as philosophy, law, or sociology. Second, consider mentoring underrepresented researchers. Through mentorship, you can encourage more diverse talents to join the field and to give more resources to the people historically underrepresented in AI. We highly recommend getting involved with programs such as AI4ALL, Women in AI, and Black in AI.

Practice: Large Scale Initiatives

While all the above steps by us as individuals are collectively powerful, more directed efforts aimed at dealing with ethical issues in AI research are also useful. Therefore, we conclude by highlighting some of the emerging institutions committed to this cause:

- AI Now Institute: An NYU research institute that focuses on four domains: rights and liberties, labor and automation, bias and inclusion, and safety and critical domains.

- Human-Centered AI Institute (HAI): A Stanford institute that works on advancing AI research that benefits humanity. HAI funds human-centered AI research, and has several fellowships available.

- Ada Lovelace Institute: An independent research group set up by the Nuffield Foundation that examines ethical and social issues arising from the use of data, algorithms, and AI.

- The Ethics and Governance of Artificial Intelligence Initiative: A joint project of the MIT Media Lab and the Harvard Berkman-Klein Center for Internet and Society, that does both philanthropic work as well as research in justice, information quality, and autonomy & interaction.

- AAAI/ACM Conference on AI, Ethics, and Society: a multi-disciplinary conference meant to help address ethical concerns regarding ethics and AI with the collaboration of experts from various disciplines, such as ethics, philosophy, economics, sociology, psychology, law, history, and politics.

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems: An IEEE committee working on the overarching principles of the ethical design and use of autonomous and intelligent systems

- Partnership on AI: A multilateral organization that brings together companies, academics, researchers, and civil society organizations to formulate best practices for AI technologies.

- The Future of Life Institute: An organization with the mission to catalyze and support research and initiatives for safeguarding life and developing optimistic visions of the future, which organizes many events and discussions about the future of AI.

Group photo from the Future of Life Beneficial AGI 2019 event

There are of course many other institutions and labs that do work focusing on the ethics and policy of AI, and this list is non-comprehensive.

Conclusion

Regardless of how much one cares to discuss ethics, the undeniable fact is that AI has more potential to change the landscape of our civilization than perhaps any other human invention. The nature of this change will depend greatly on our collective understanding of the benefits and limitations of various AI technologies, and this understanding depends greatly on the engagement of researchers with policymakers, legislators and the broader public as well. The time for taking shelter behind the ivory tower of academic immunity and washing our hands of the implications of our work is over. Instead, let us think about, discuss, and account for these implications in our work to keep them as positive as possible.