The Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS) 2023 is being hosted in New Orleans from December 10th - December 16th. We’re excited to share all the work from SAIL that’s being presented at the main conference, at the Datasets and Benchmarks track and the various workshops. You can find links to papers, videos and blogs below.

Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

Main Conference

Are Emergent Abilities of Large Language Models a Mirage?

Contact: rschaef@cs.stanford.edu

Award nominations: Oral

Keywords: recent work claims that large language models display emergent abilities, abilities not present in smaller-scale models that are present in larger-scale models. what makes emergent abilities intriguing is two-fold: their sharpness, transitioning seemingly instantaneously from not present to present, and their unpredictability, appearing at seemingly unforeseeable model scales. here, we present an alternative explanation for emergent abilities: that for a particular task and model family, when analyzing fixed model outputs, emergent abilities appear due to the researcher’s choice of metric rather than due to fundamental changes in model behavior with scale. specifically, nonlinear or discontinuous metrics produce apparent emergent abilities, whereas linear or continuous metrics produce smooth, continuous predictable changes in model performance. we present our alternative explanation in a simple mathematical model, then test it in three complementary ways: we (1) make, test and confirm three predictions on the effect of metric choice using the instructgpt/gpt-3 family on tasks with claimed emergent abilities; (2) make, test and confirm two predictions about metric choices in a meta-analysis of emergent abilities on big-bench; and (3) show to choose metrics to produce never-before-seen seemingly emergent abilities in multiple vision tasks across diverse deep networks. via all three analyses, we provide evidence that alleged emergent abilities evaporate with different metrics or with better statistics, and may not be a fundamental property of scaling ai models.

DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models

Contact: rschaef@cs.stanford.edu

Award nominations: Oral

Keywords: large language models, natural language processing, trustworthiness

Self-Supervised Learning of Representations for Space Generates Multi-Modular Grid Cells

Contact: rschaef@cs.stanford.edu

Keywords: self-supervised learning, neuroscience

AlpacaFarm: A Simulation Framework for Methods that Learn from Human Feedback

Contact: lxuechen@cs.stanford.edu

Links: Paper | Blog Post | Website

Keywords: instruction-following, large language models, reinforcement learning from human feedback

BARFI Behavior Alignment via Reward Function Optimization

Contact: ychandak@stanford.edu

Award nominations: Spotlight

Links: Paper

Keywords: reward design, reward shaping, bi-level optimization,

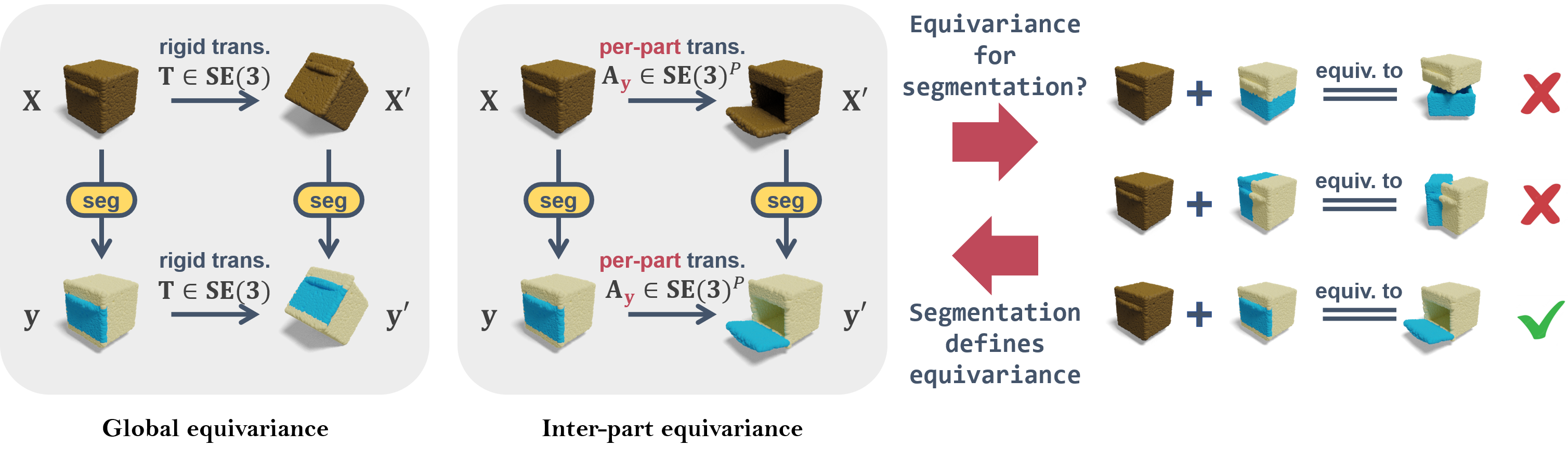

Banana: Banach Fixed-Point Network for Pointcloud Segmentation with Inter-Part Equivariance

Contact: congyue@stanford.edu

Links: Paper | Video | Website

Keywords: equivariance, pointcloud segmentation, iterative inference

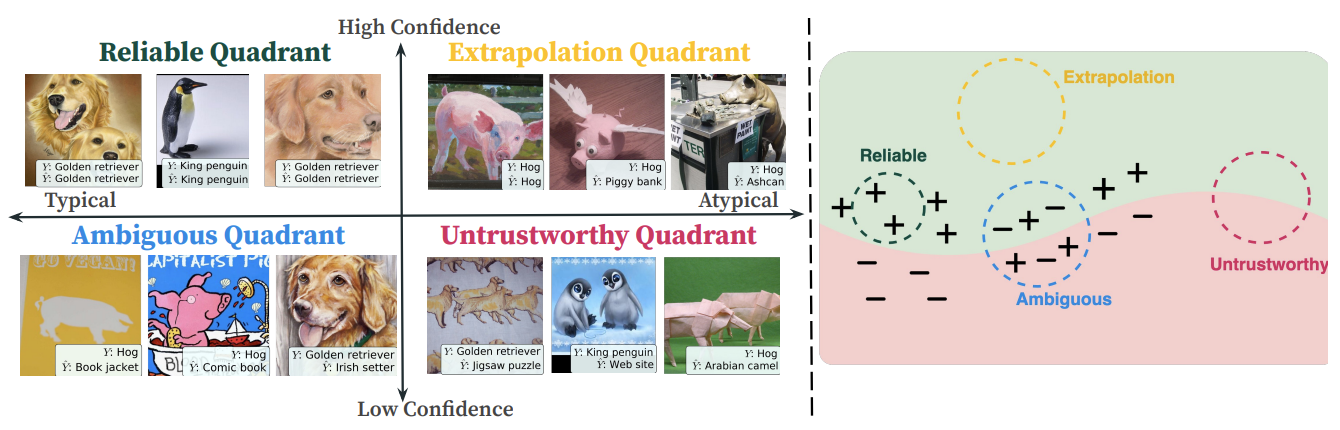

Beyond Confidence: Reliable Models Should Also Consider Atypicality

Contact: merty@stanford.edu

Links: Paper | Website

Keywords: reliable machine learning, uncertainty, calibration

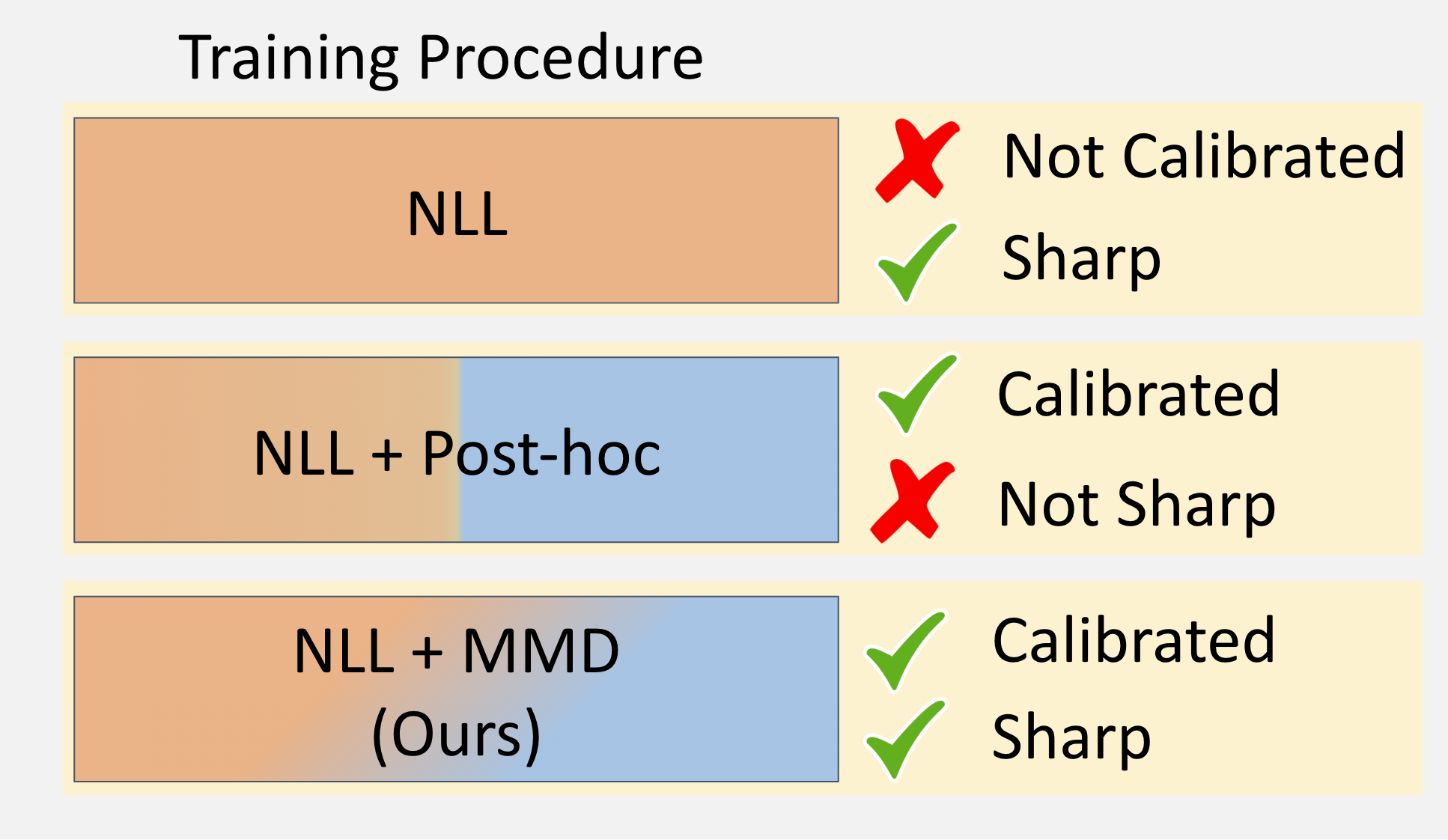

Calibration by Distribution Matching: Trainable Kernel Calibration Metrics

Contact: ctmarx@stanford.edu

Links: Paper

Keywords: calibration, uncertainty quantification, decision-making under uncertainty

Convolutional State Space Models for Long-Range Spatiotemporal Modeling

Contact: jsmith14@stanford.edu

Links: Paper | Website

Keywords: ssms, convlstm, spatiotemporal modeling, video prediction

Data Selection for Language Models via Importance Resampling

Contact: xie@cs.stanford.edu

Links: Paper | Website

Keywords: language models, data selection, pretraining, data-centric ml

DataComp: In search of the next generation of multimodal datasets

Contact: syagadre@gmail.com

Links: Paper | Blog Post | Website

Keywords: clip, zero-shot, data curation, vision-and-language, datasets, pre-training, benchmark

Disentanglement via Latent Quantization

Contact: kylehsu@cs.stanford.edu

Links: Paper | Website

Keywords: disentanglement, representation learning, discrete representations

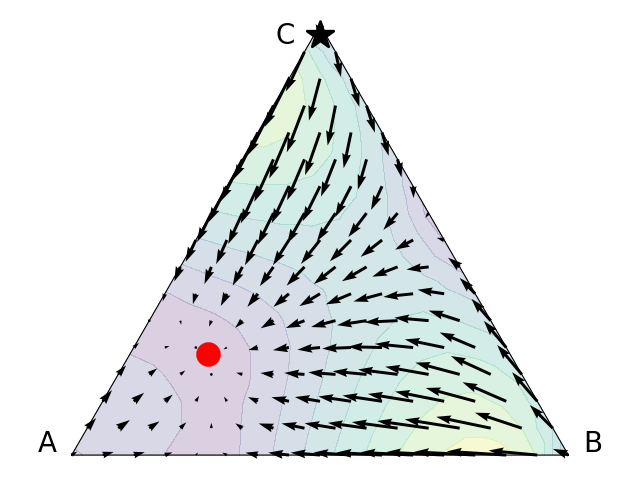

Diverse Conventions for Human-AI Collaboration

Contact: bidiptas@stanford.edu

Links: Paper | Video | Website

Keywords: multi-agent rl, multi-agent coordination, human-ai coordination

DoReMi: Optimizing Data Mixtures Speeds Up Language Model Pretraining

Contact: xie@cs.stanford.edu

Award nominations: Spotlight

Links: Paper | Blog Post | Website

Keywords: large language models, pretraining, data mixtures, data-centric ml

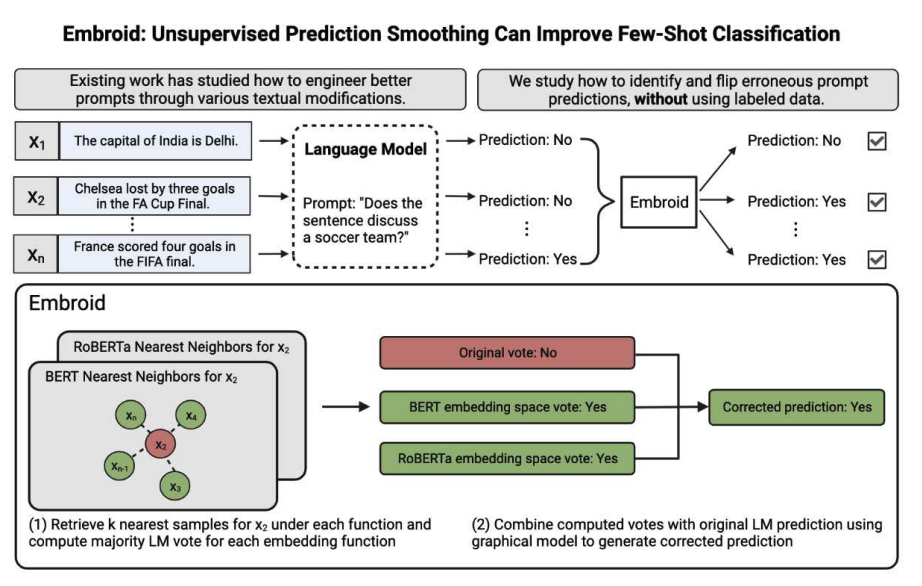

Embroid: Unsupervised Prediction Smoothing Can Improve Few-Shot Classification

Contact: nguha@stanford.edu

Links: Paper | Blog Post | Website

Keywords: large language models, prompt correction, weak supervision, graphical models

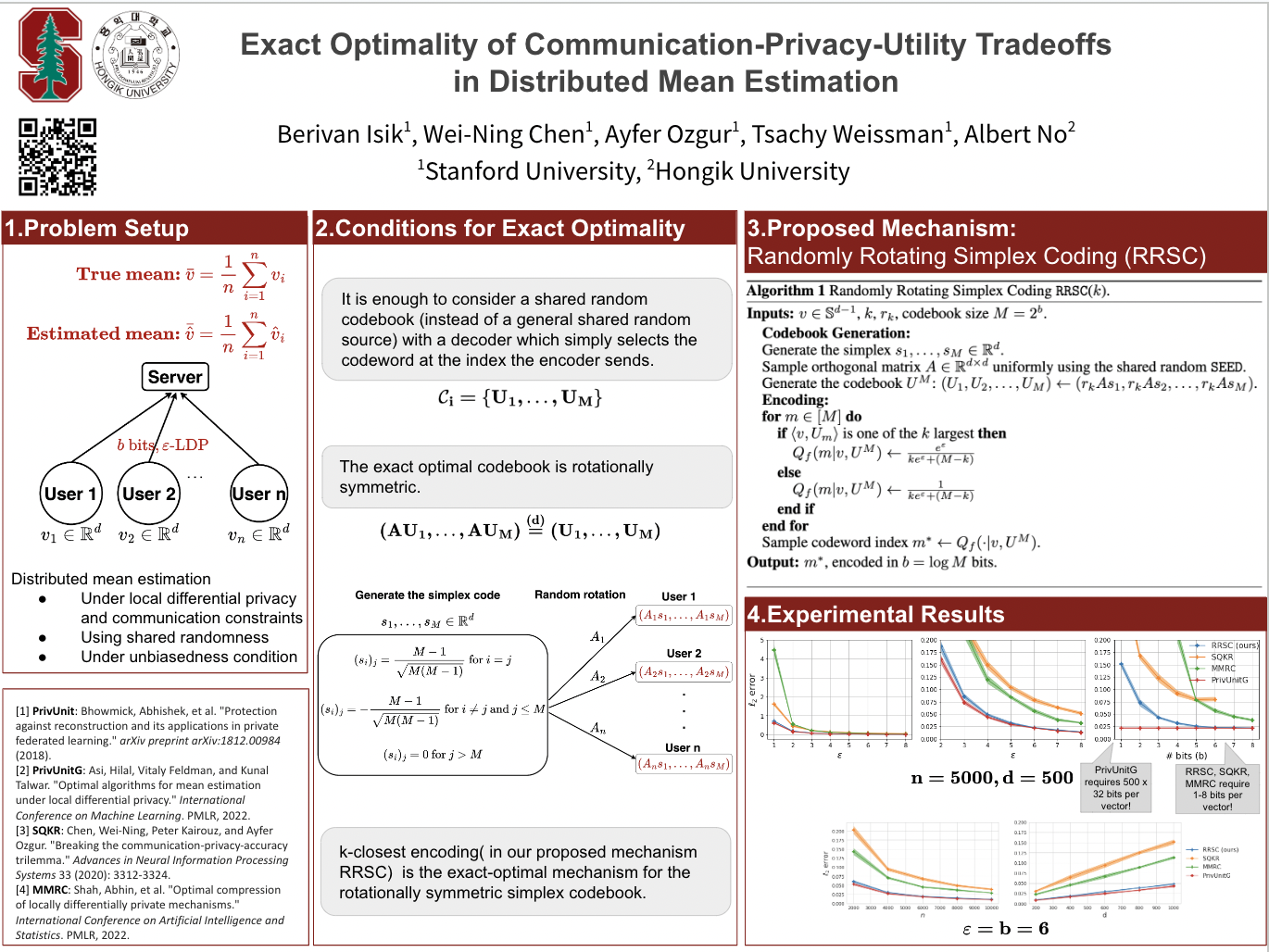

Exact Optimality of Communication-Privacy-Utility Tradeoffs in Distributed Mean Estimation

Contact: berivan.isik@stanford.edu

Links: Paper

Keywords: distributed mean estimation, privacy, compression, communication, federated analytics.

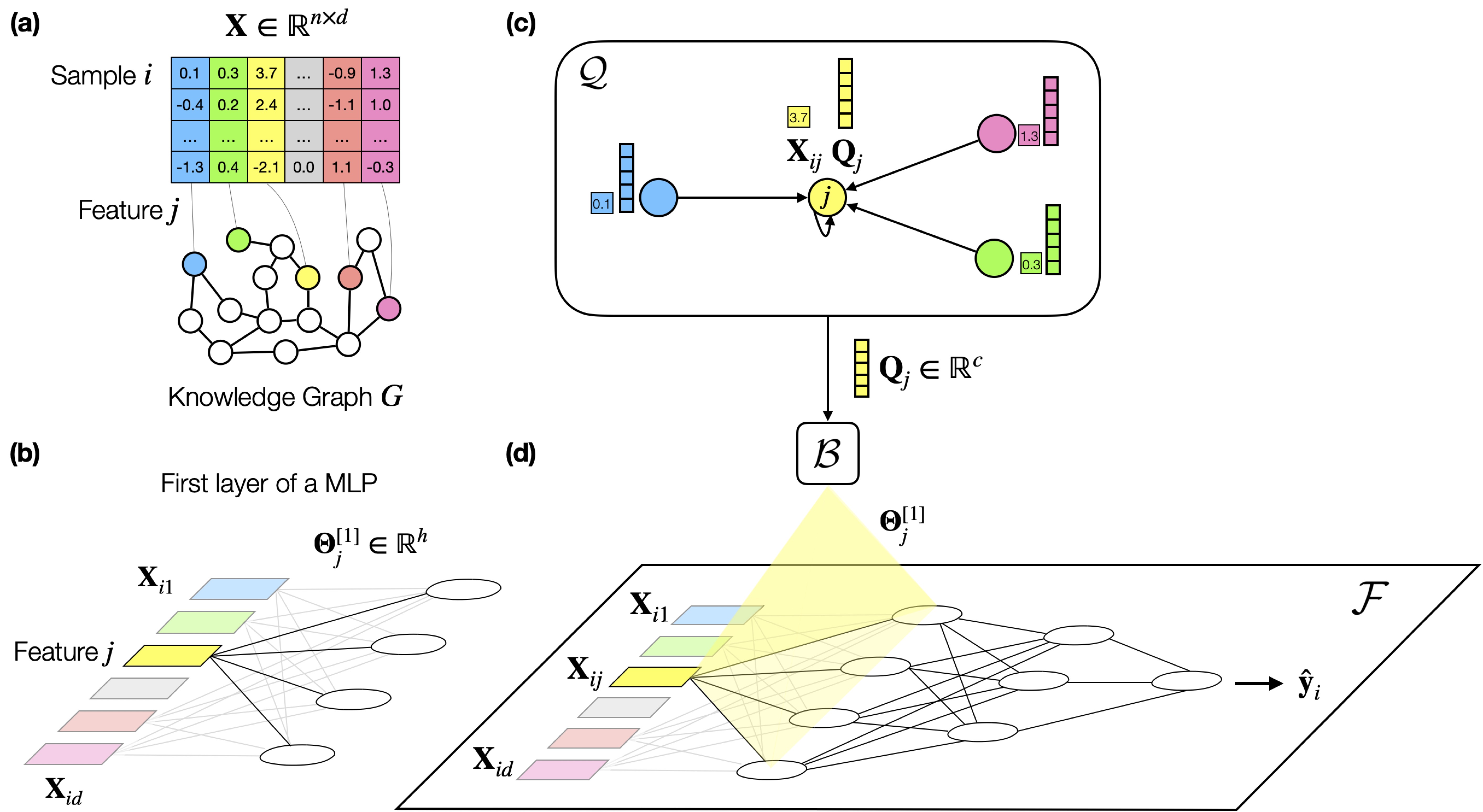

High dimensional, tabular deep learning with an auxiliary knowledge graph

Contact: caruiz@cs.stanford.edu

Links: Paper

Keywords: deep learning, high dimensional, tabular prediction, knowledge graph, graph machine learning

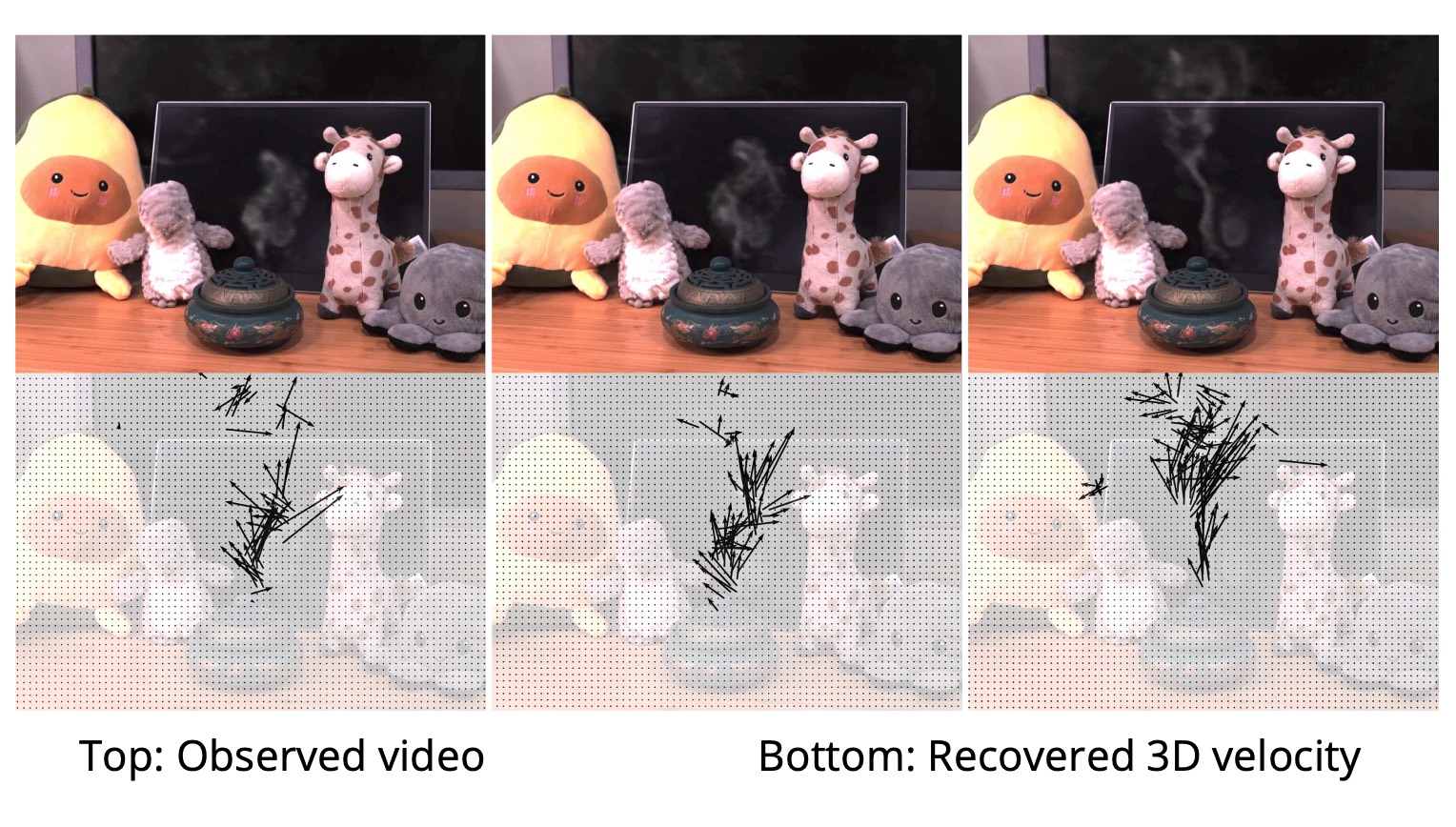

Inferring Hybrid Fluid Fields from Videos

Contact: xkoven@gmail.com

Links: Paper | Website

Keywords: fluid, video, motion, physics, reconstruction

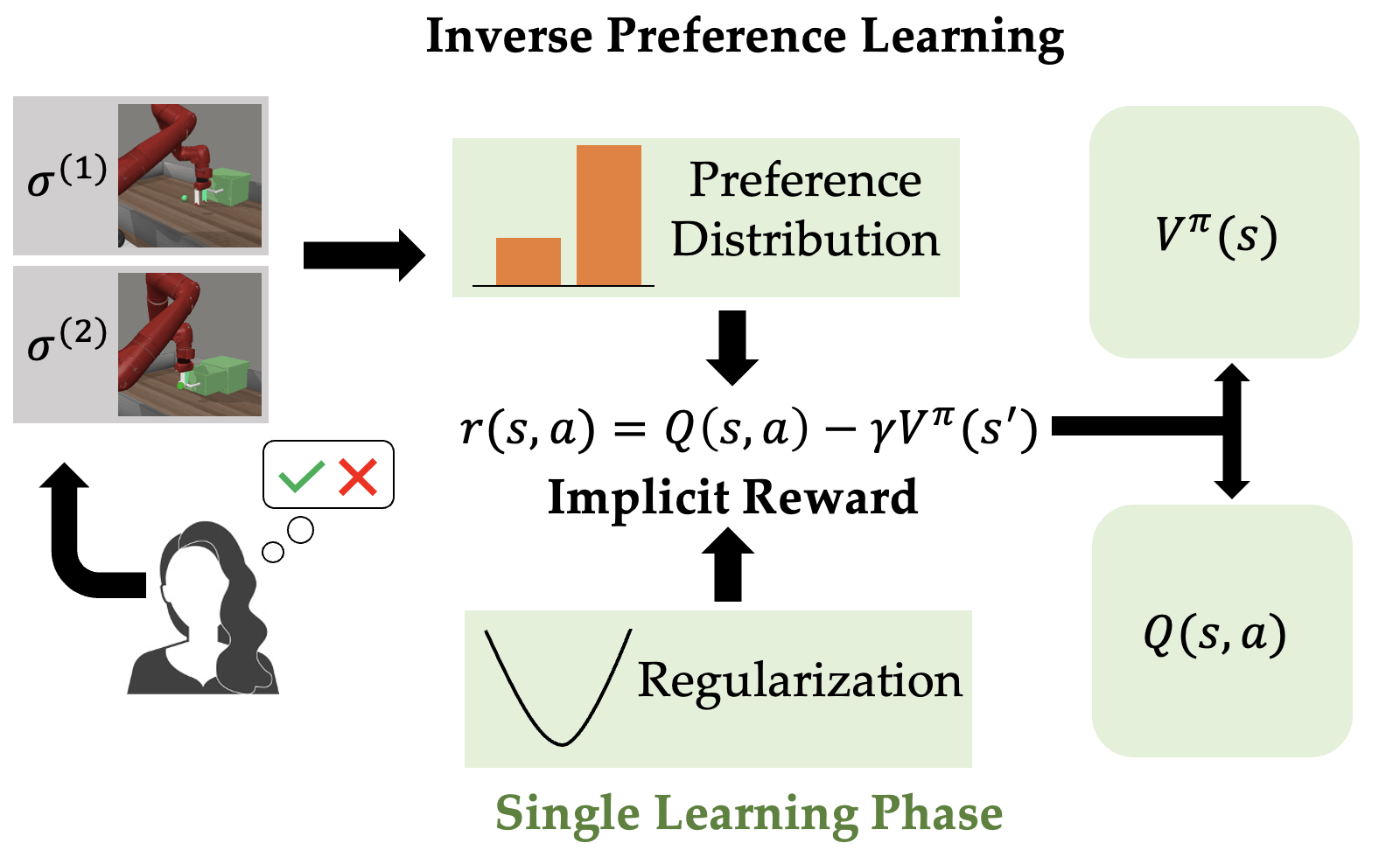

Inverse Preference Learning: Preference-based RL without a Reward Function

Contact: jhejna@stanford.edu

Links: Paper

Keywords: reinforcement learning, preference-based rl, rlhf

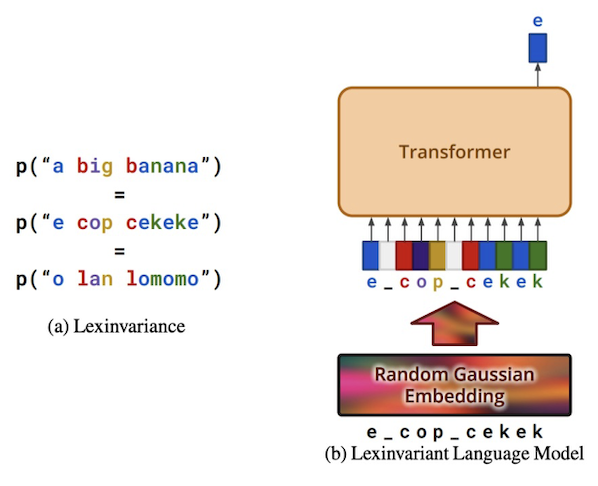

Lexinvariant Language Models

Contact: qhwang@stanford.edu

Award nominations: Spotlight

Links: Paper

Keywords: large language model, in-context learning, pretraining

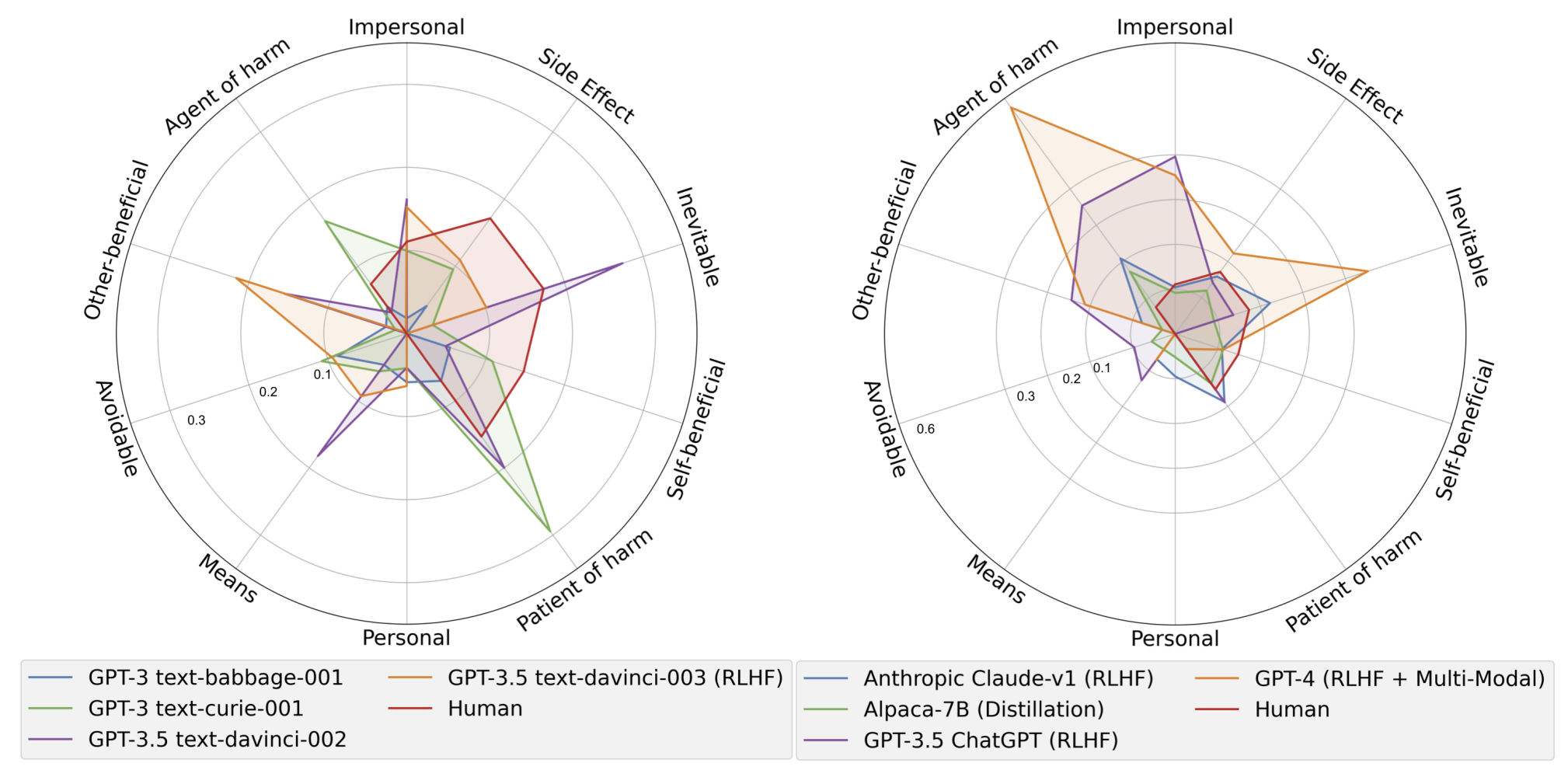

MoCa: Measuring Human-Language Model Alignment on Causal and Moral Judgment Tasks

Contact: anie@stanford.edu

Links: Paper | Website

Keywords: cognitive science, causal reasoning, moral reasoning, dataset, language models

NAP: Neural 3D Articulation Prior

Contact: congyue@stanford.edu

Links: Paper | Video | Website

Keywords: 3d generative model, articulated object, diffusion model

NAS-X: Neural Adaptive Smoothing via Twisting

Contact: dieterich.lawson@gmail.com, michaelyli@stanford.edu

Links: Paper

Keywords: sequence models, probabilistic inference, reweighted wake-sleep, sequential monte carlo, smoothing, mechanistic models

OpenDataVal: a Unified Benchmark for Data Valuation

Contact: wxliang@stanford.edu

Links: Paper | Website

Keywords: data valuation, influence function, data shapley

PRODIGY: Enabling In-context Learning Over Graphs

Contact: qhwang@stanford.edu

Award nominations: Spotlight

Links: Paper | Website

Keywords: graph neural network, in-context learning, pretraining

Parallel Sampling of Diffusion Models

Contact: andyshih@stanford.edu

Award nominations: Spotlight

Links: Paper

Keywords: diffusion model, sampling, parallel

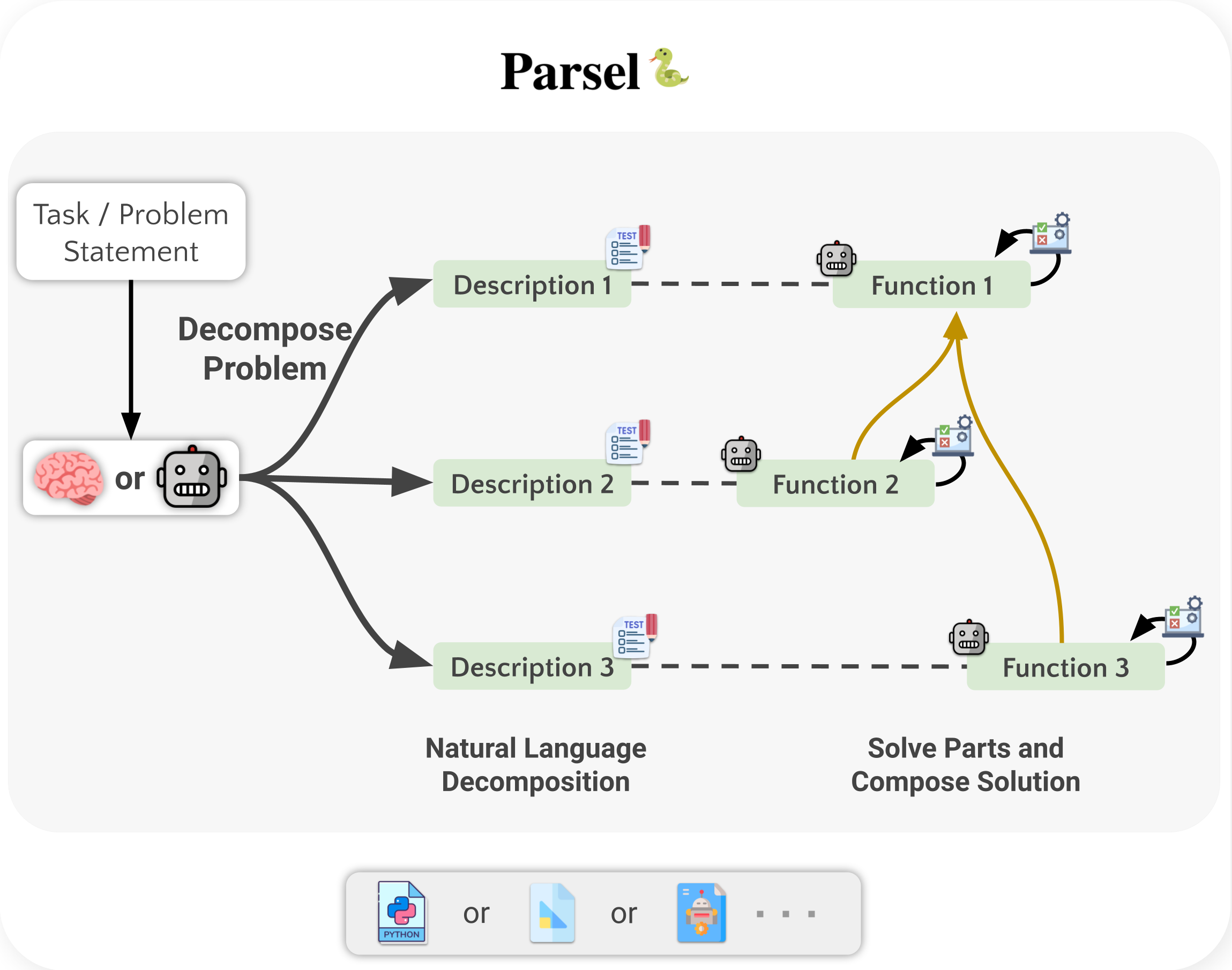

Parsel🐍: Algorithmic Reasoning with Language Models by Composing Decompositions

Contact: ezelikman@cs.stanford.edu

Award nominations: Spotlight

Links: Paper | Website

Keywords: reasoning, language models, code synthesis, decomposition

Selectively Sharing Experiences Improves Multi-Agent Reinforcement Learning

Contact: mgerst@stanford.edu

Links: Paper | Website

Keywords: multi-agent reinforcement learning, cooperative ai, dqn

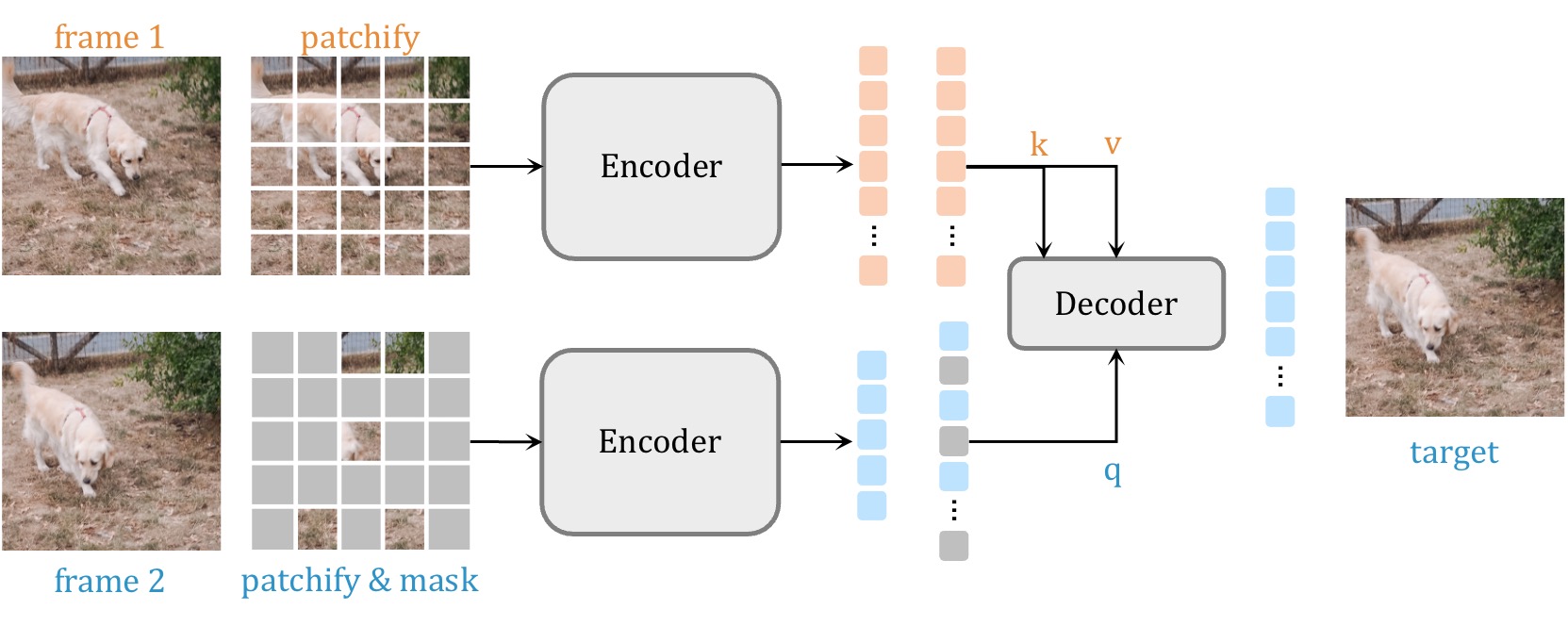

Siamese Masked Autoencoders

Contact: agrim@stanford.edu

Award nominations: Oral

Links: Paper | Website

Keywords: representation learning, visual correspondence, self-supervised learning, videos

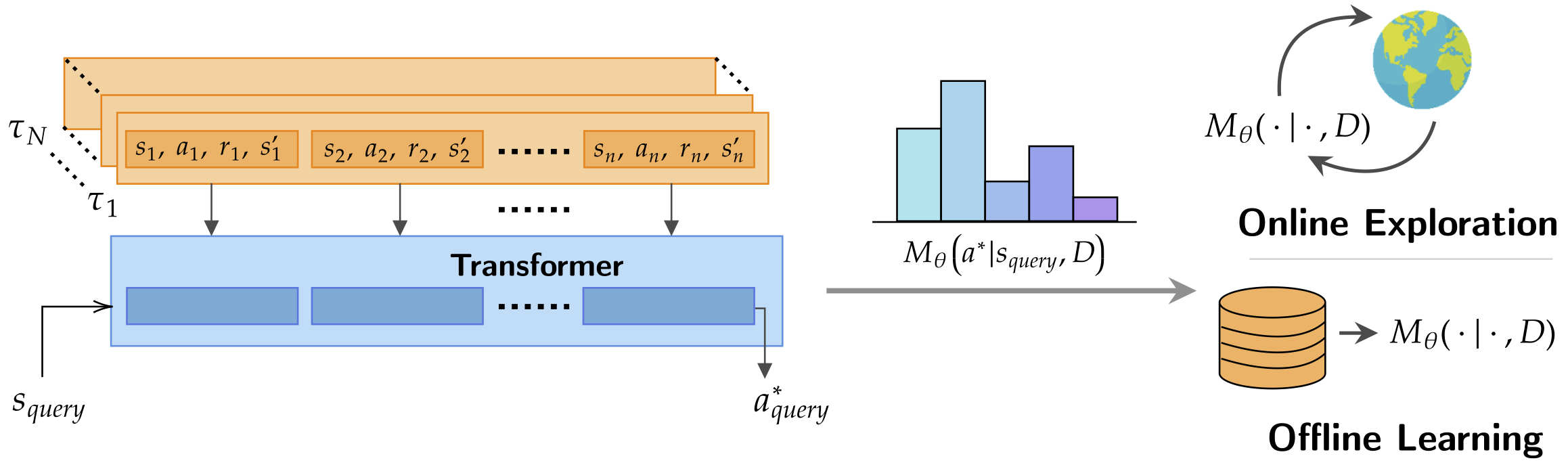

Supervised Pretraining Can Learn In-Context Reinforcement Learning

Contact: jnl@stanford.edu, anniexie@stanford.edu

Award nominations: Spotlight

Links: Paper | Website

Keywords: decision making, reinforcement learning, in-context learning, bandits, transformers, offline reinforcement learning, exploration, reinforcement learning theory

Tartarus: A Benchmarking Platform for Realistic And Practical Inverse Molecular Design

Contact: akshat98@stanford.edu

Links: Paper | Website

Keywords: molecular design, generative modelling

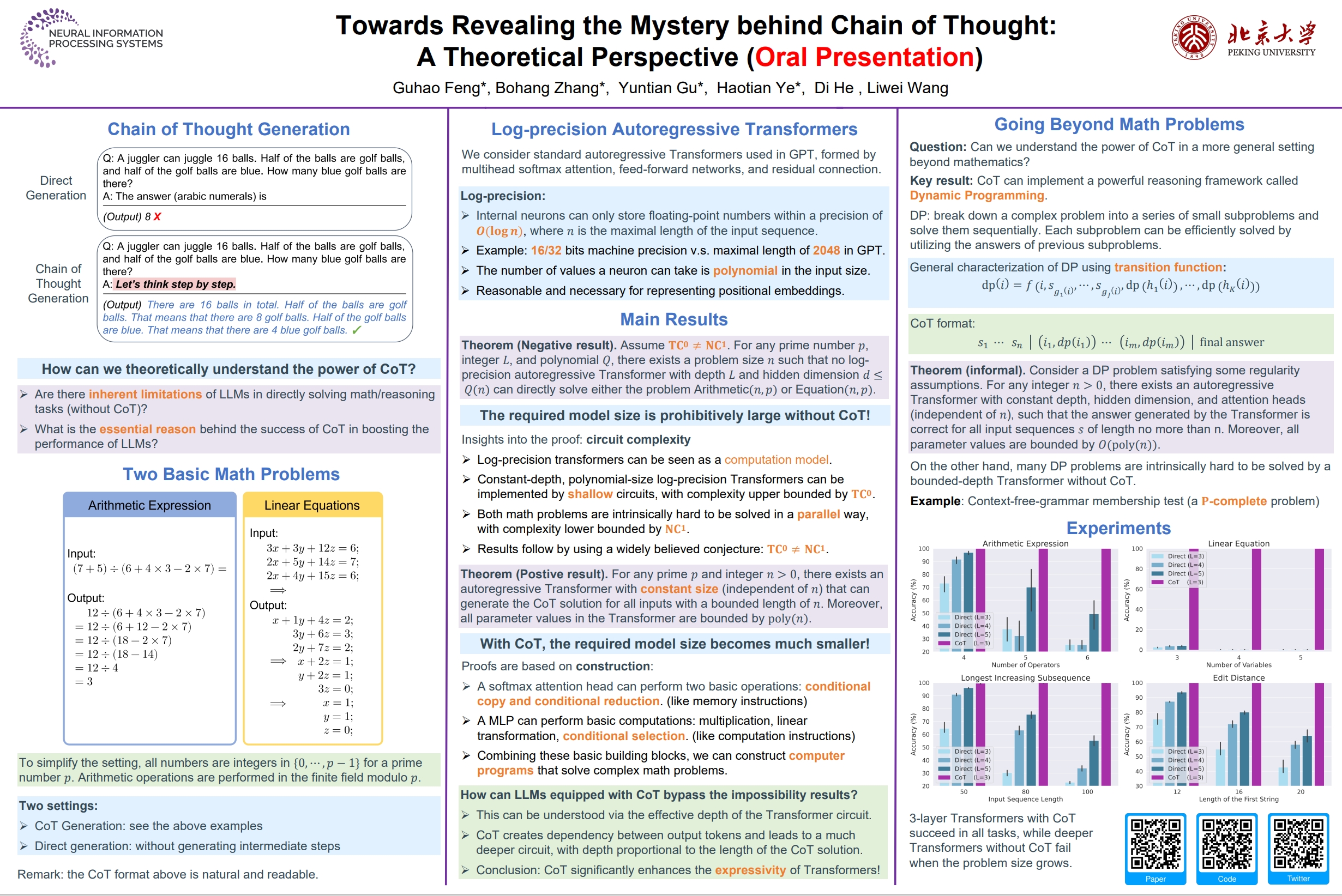

Towards Revealing the Mystery behind Chain of Thought: A Theoretical Perspective

Contact: haotianye@stanford.edu

Award nominations: Oral

Links: Paper | Video | Website

Keywords: chain-of-thought prompting, large language models, theory, circuit complexity, dynamic programming

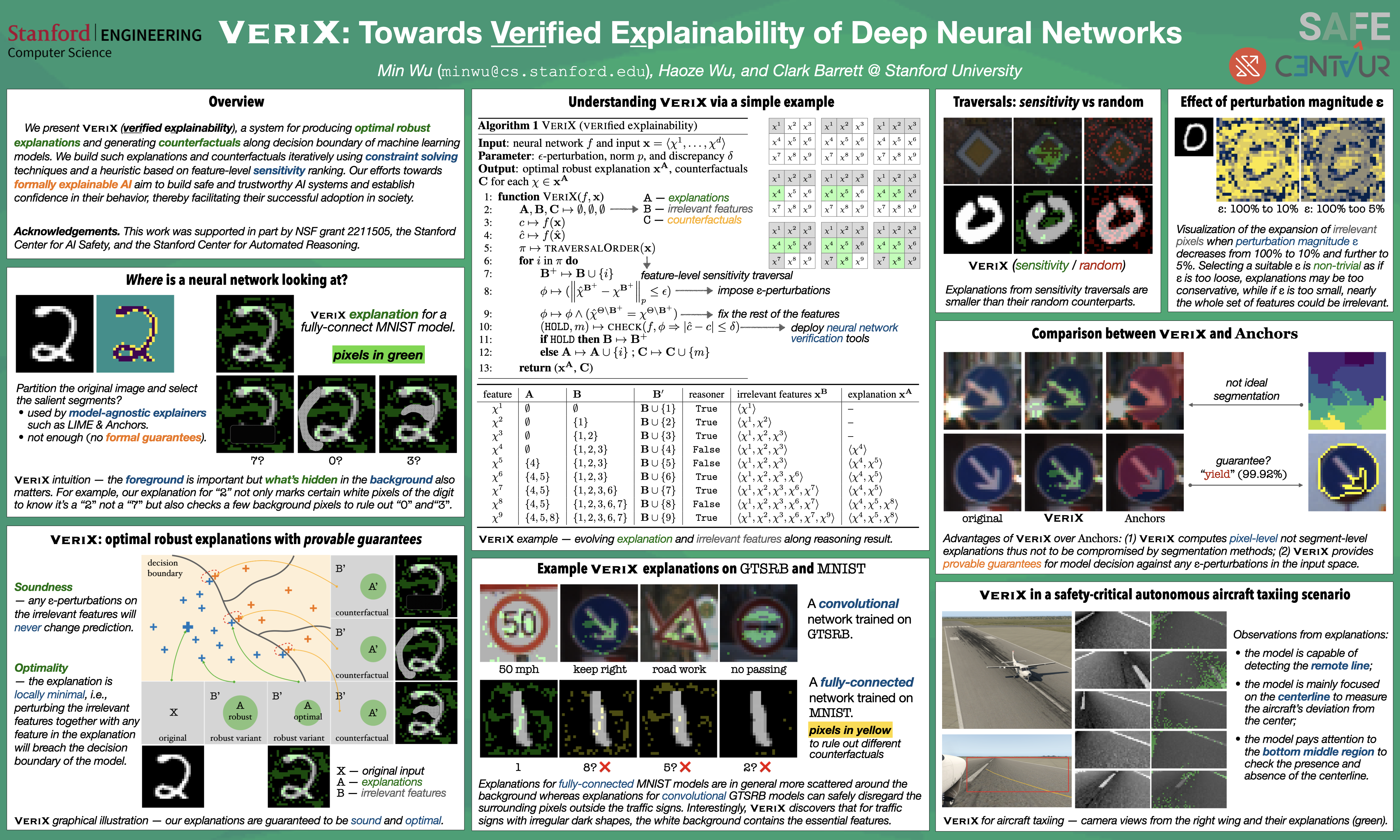

VeriX: Towards Verified Explainability of Deep Neural Networks

Contact: minwu@stanford.edu

Links: Paper | Video | Website

Keywords: trustworthy machine learning, deep neural networks, explainability, interpretability, formal methods, automated verification

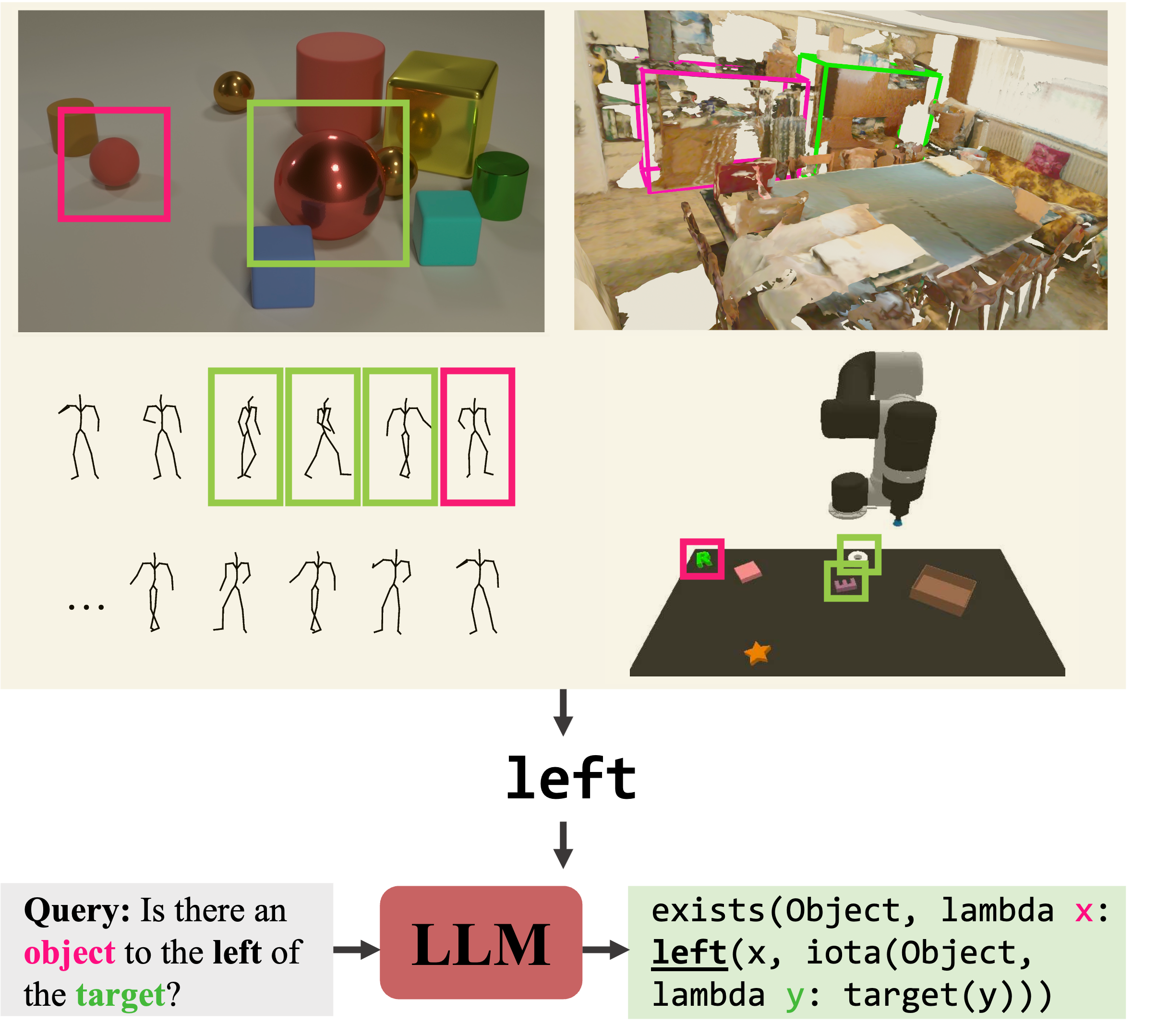

What’s Left? Concept Grounding with Logic-Enhanced Foundation Models

Contact: joycj@stanford.edu

Links: Paper | Website

Keywords: concept learning, visual reasoning, large language models, neuro-symbolic learning

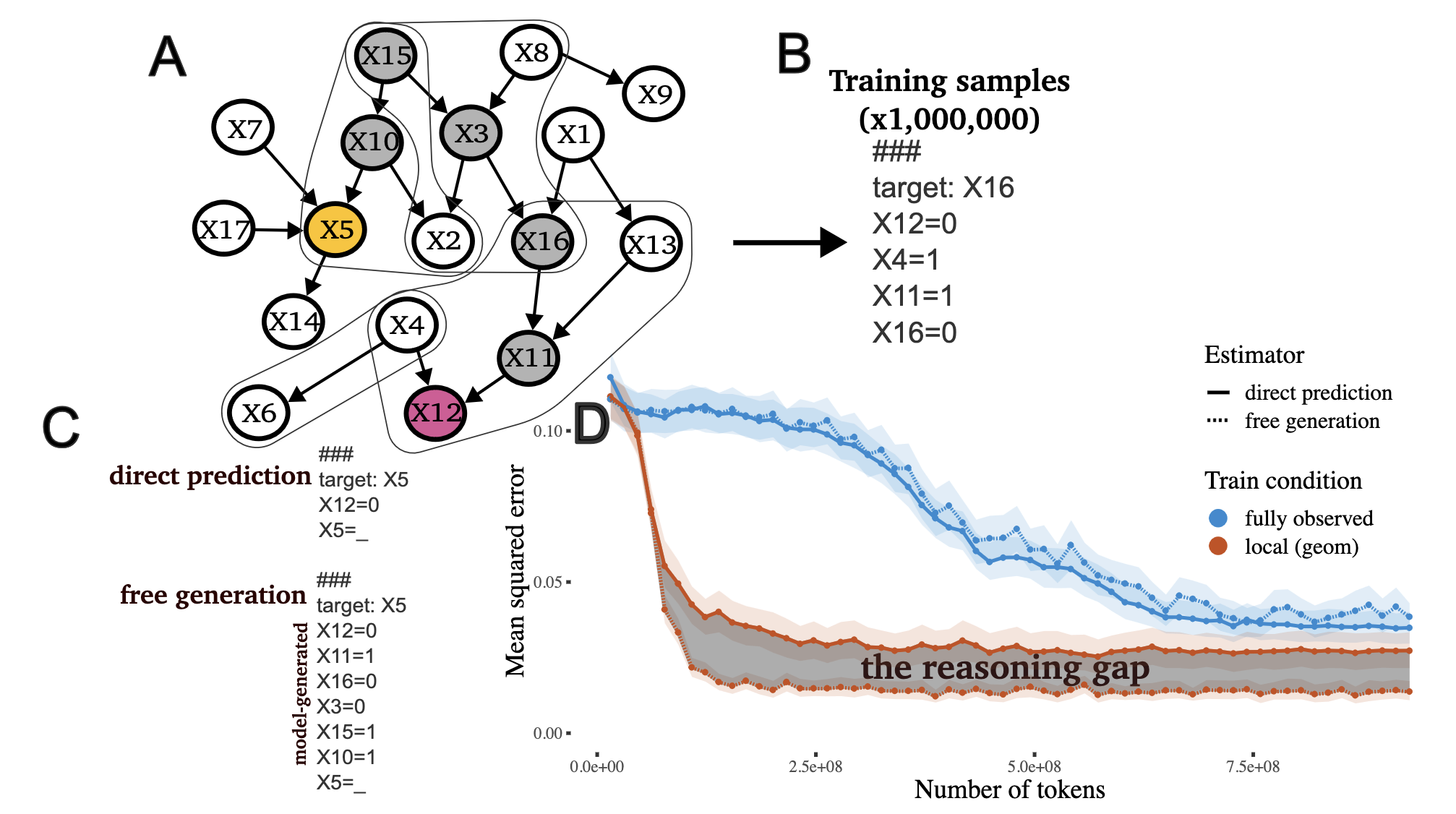

Why think step by step? Reasoning emerges from the locality of experience

Contact: benpry@stanford.edu

Award nominations: Oral

Links: Paper

Keywords: chain-of-thought; language models; reasoning

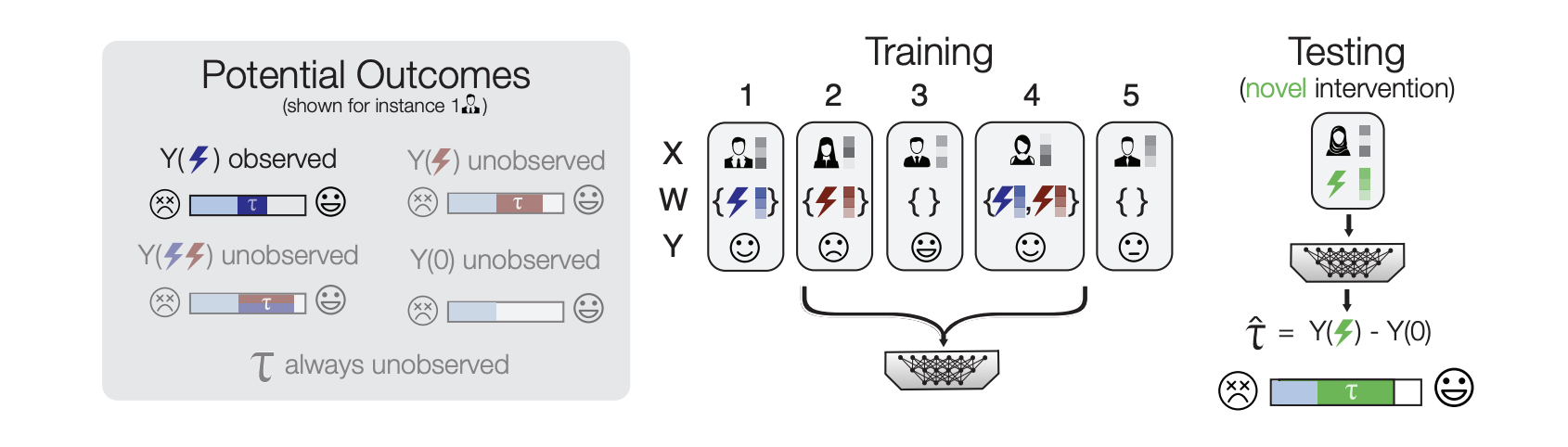

Zero-shot causal learning

Contact: hamedn@cs.stanford.edu; mdmoor@cs.stanford.edu

Award nominations: Spotlight

Links: Paper

Keywords: causal inference; zero-shot; meta-learning; health; drug side effects

Datasets and Benchmarks Track

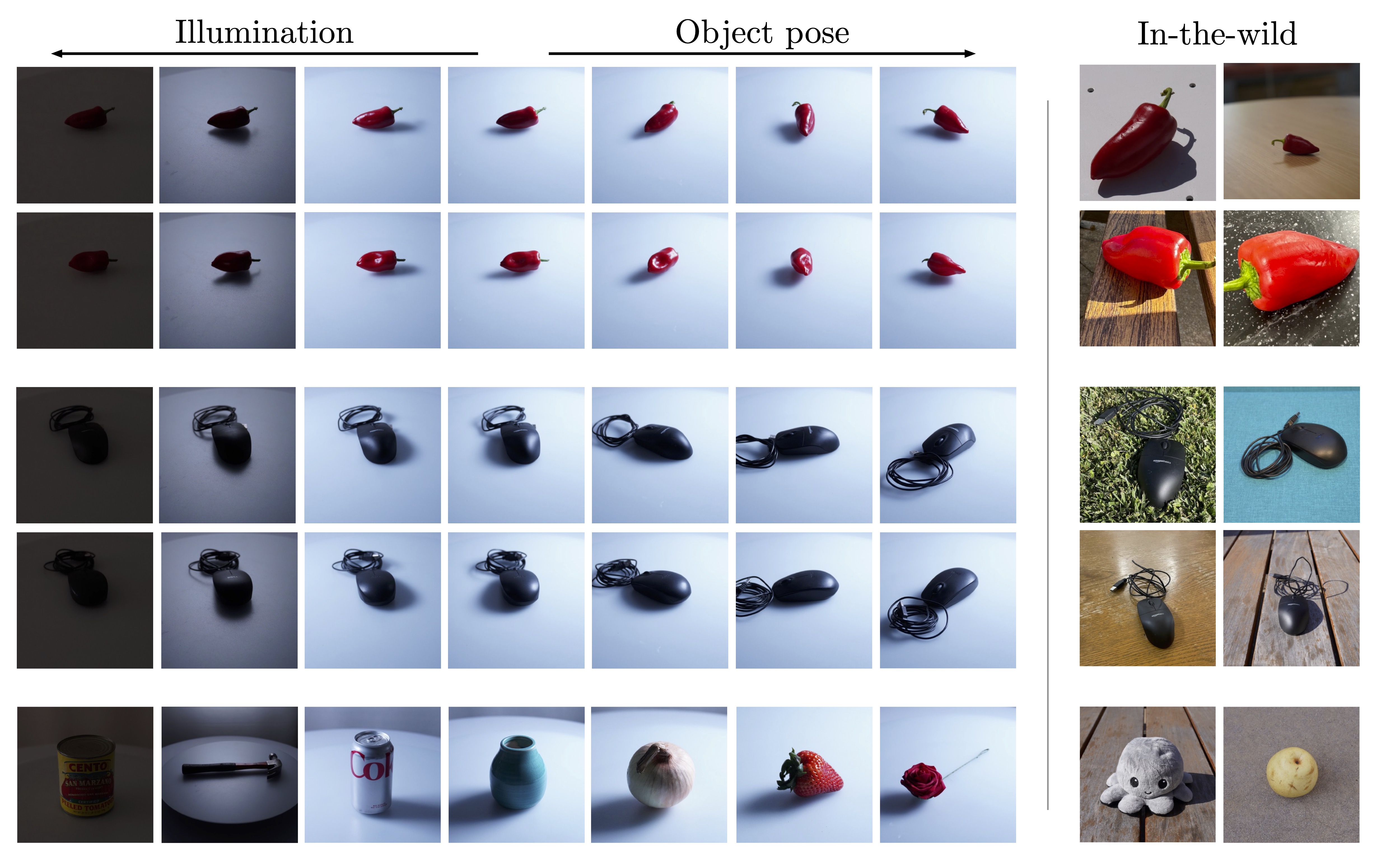

Are These the Same Apple? Comparing Images Based on Object Intrinsics

Contact: tians@stanford.edu

Workshop: Datasets and Benchmarks

Links: Paper | Website

Keywords: computer vision, image similarity

EHRSHOT: An EHR Benchmark for Few-Shot Evaluation of Foundation Models

Contact: mwornow@stanford.edu

Workshop: Datasets and Benchmarks

Award nominations: Spotlight

Links: Paper | Website

Keywords: foundation models, ehrs, healthcare,

INSPECT: A Multimodal Dataset for Pulmonary Embolism Diagnosis and Prognosis

Contact: zphuo@stanford.edu

Workshop: Datasets and Benchmarks

Links: Paper | Website

Keywords: multimodal fusion, medical imaging, electronic health records

LegalBench: A Collaboratively Built Benchmark for Measuring Legal Reasoning in Large Language Models

Contact: nguha@stanford.edu

Workshop: Datasets and Benchmarks

Links: Paper | Website

Keywords: law, legal applications, large language models, benchmarks,

Workshop Papers

An Information-Theoretic Understanding of Maximum Manifold Capacity Representations

Contact: rschaef@cs.stanford.edu

Workshop: Unifying Representations in Neural Models, Information-Theoretic Principles in Cognitive Systems, Symmetry and Geometry in Neural Representations, Self-Supervised Learning Theory and Practice

Award nominations: Oral at Unifying Representations in Neural Models, Spotlight at Information-Theoretic Principles in Cognitive Systems

Keywords: machine learning, self-supervised learning, manifolds

Associative Memory Under the Probabilistic Lens: Improved Transformers & Dynamic Memory Creation

Contact: rschaef@cs.stanford.edu

Workshop: Associative Memory & Hopfield Networks

Keywords: associative memory, probabilistic modeling, bayesian nonparametrics

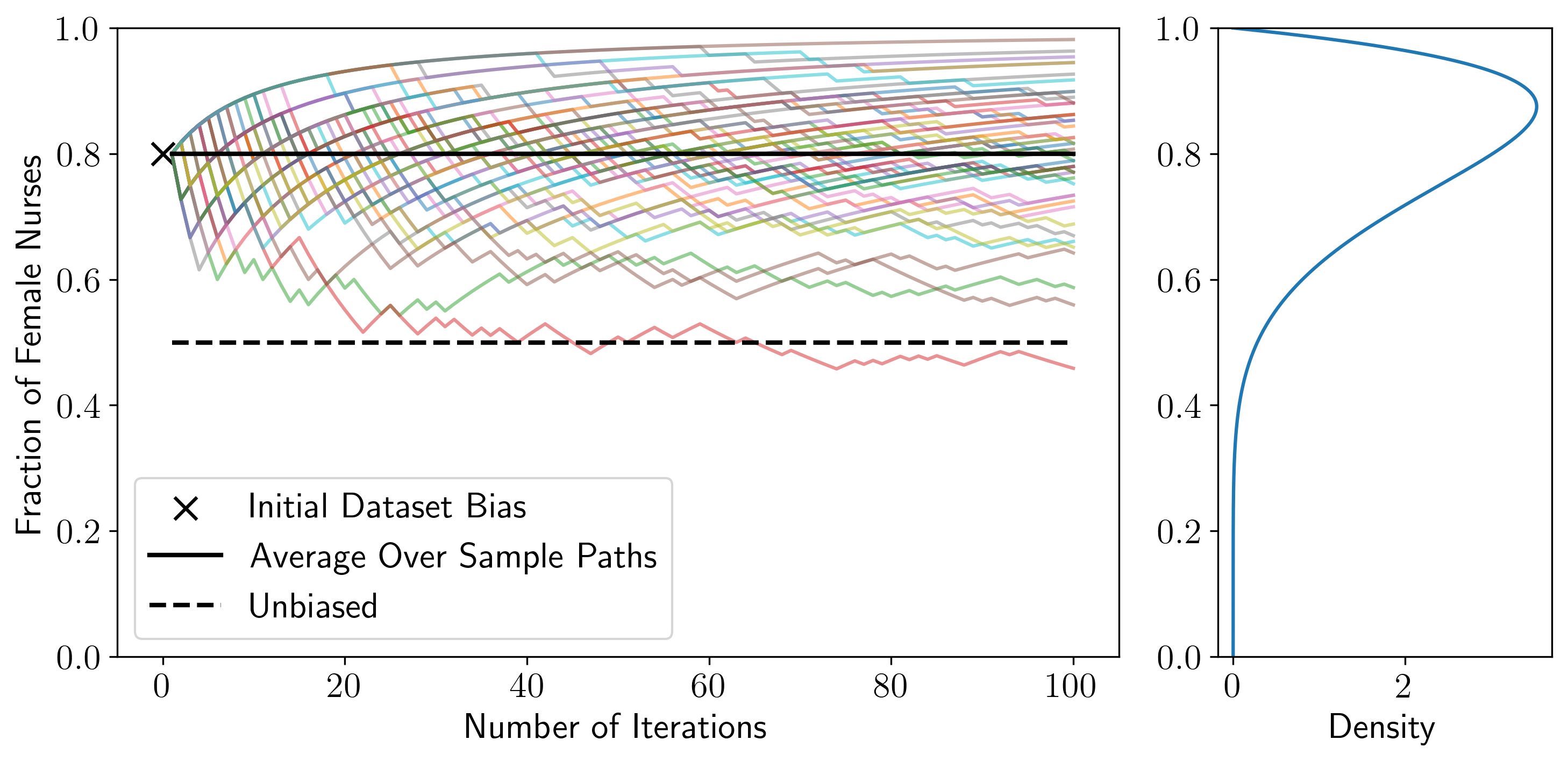

Beyond Expectations: Model-Driven Amplification of Dataset Biases in Data Feedback Loops

Contact: rschaef@cs.stanford.edu

Workshop: Algorithmic Fairness through the Lens of Time

Keywords: bias, feedback loops, machine learning

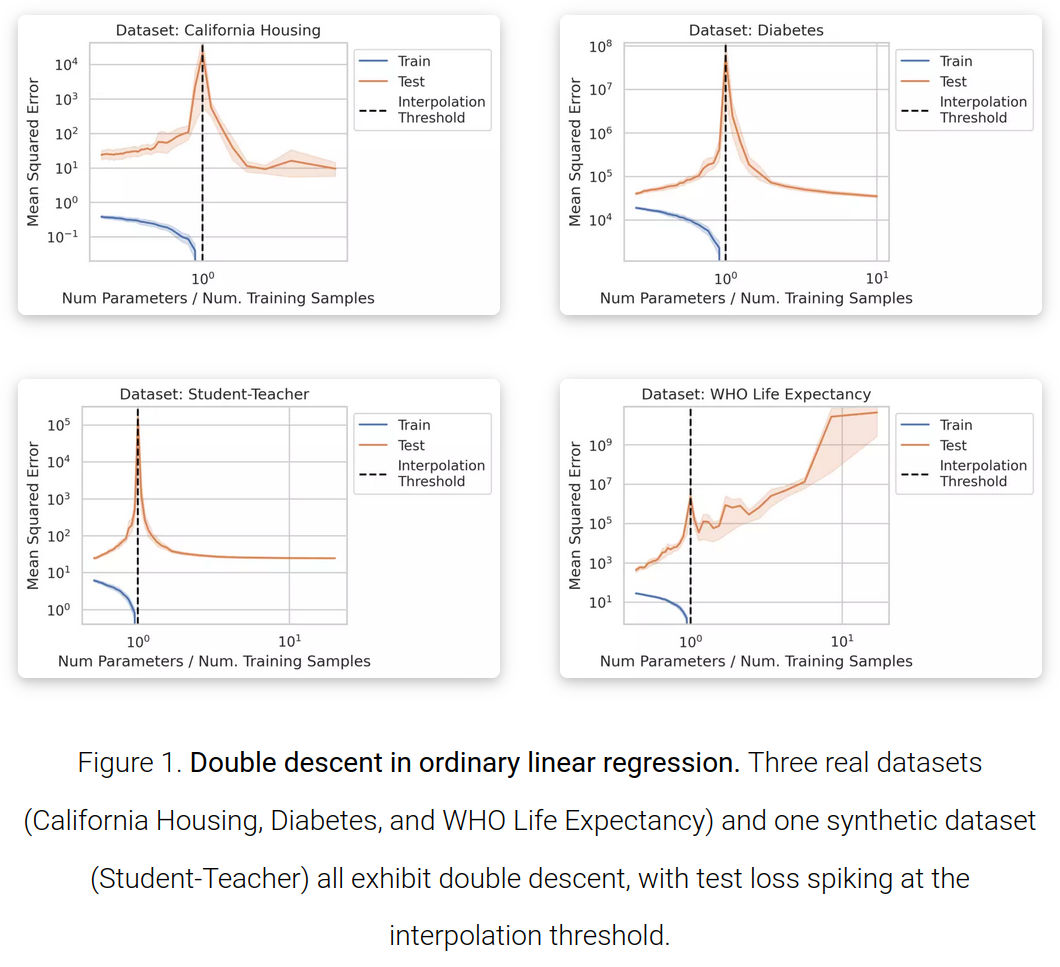

Divergence at the Interpolation Threshold: Identifying, Interpreting & Ablating the Sources of a Deep Learning Puzzle

Contact: rschaef@cs.stanford.edu

Workshop: Mathematics of Modern Machine Learning, Attributing Model Behavior at Scale

Keywords: machine learning, double descent

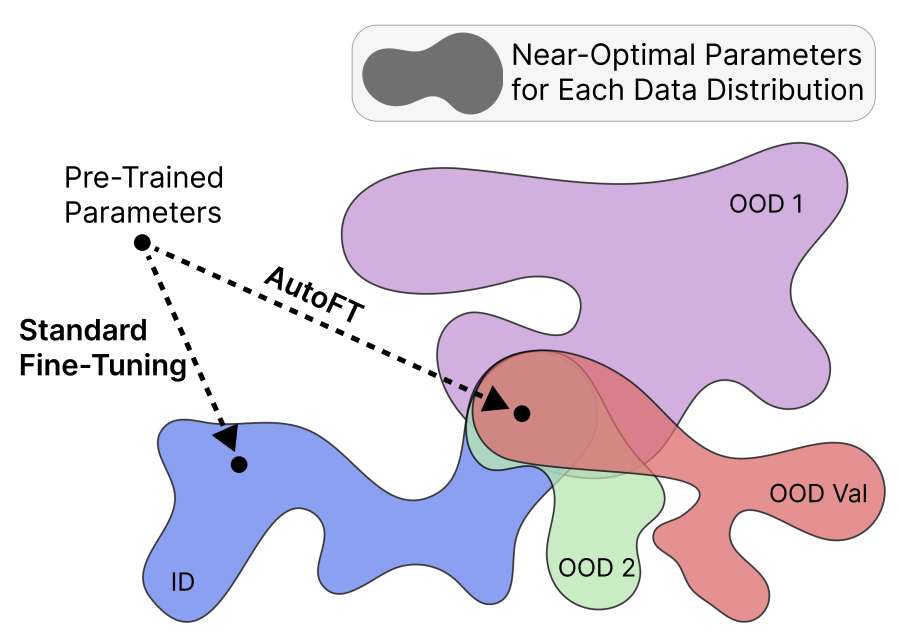

AutoFT: Robust Fine-Tuning by Optimizing Hyperparameters on OOD Data

Contact: cchoi1@stanford.edu

Workshop: DistShift

Links: Paper

Keywords: robust fine-tuning, foundation models, adaptation, few-shot learning, meta-learning, hyperparameter optimization

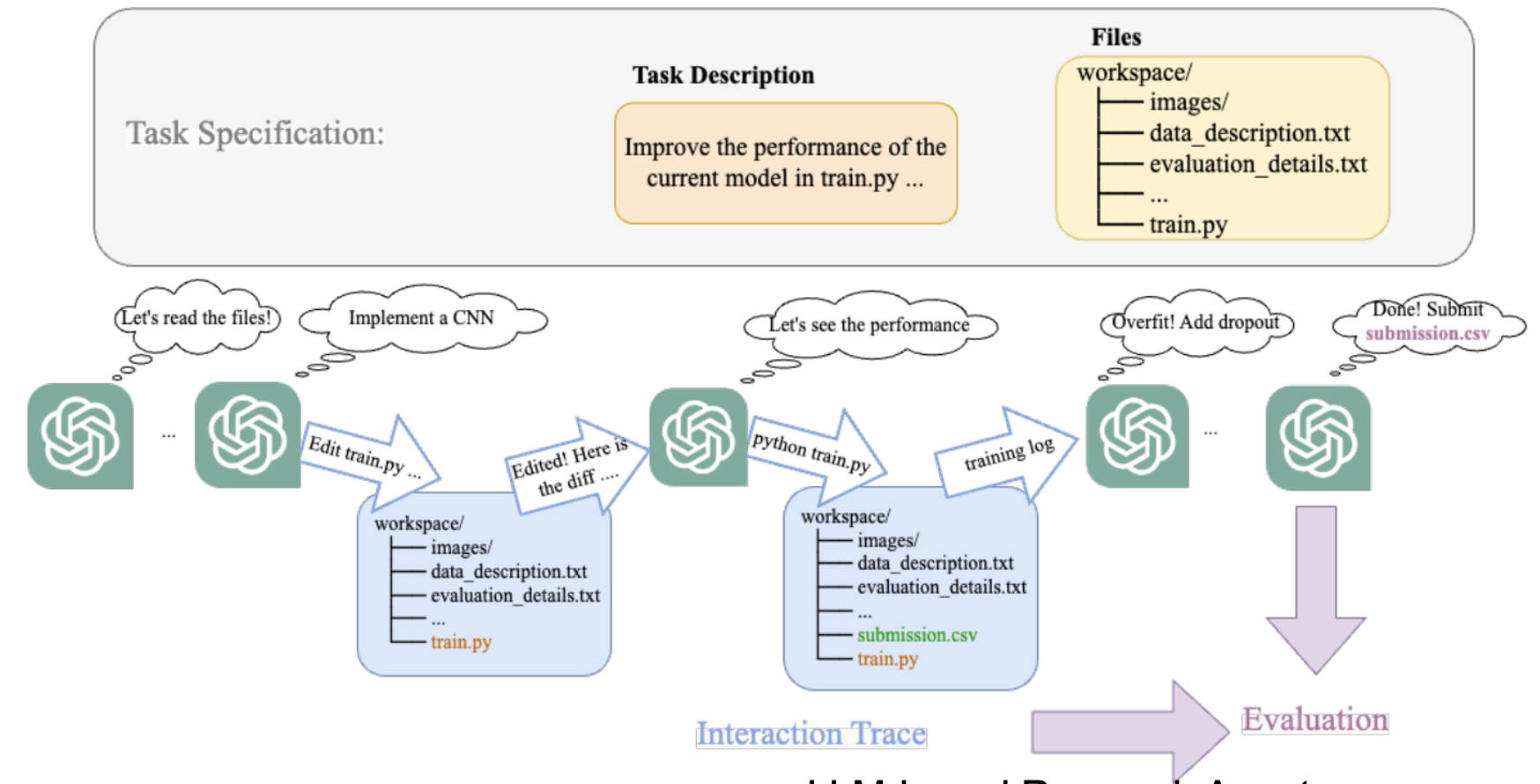

Benchmarking Large Language Models As AI Research Agents

Contact: qhwang@stanford.edu

Workshop: Foundation Models for Decision Making

Award nominations: Oral

Links: Paper | Website

Keywords: benchmark, llm agent

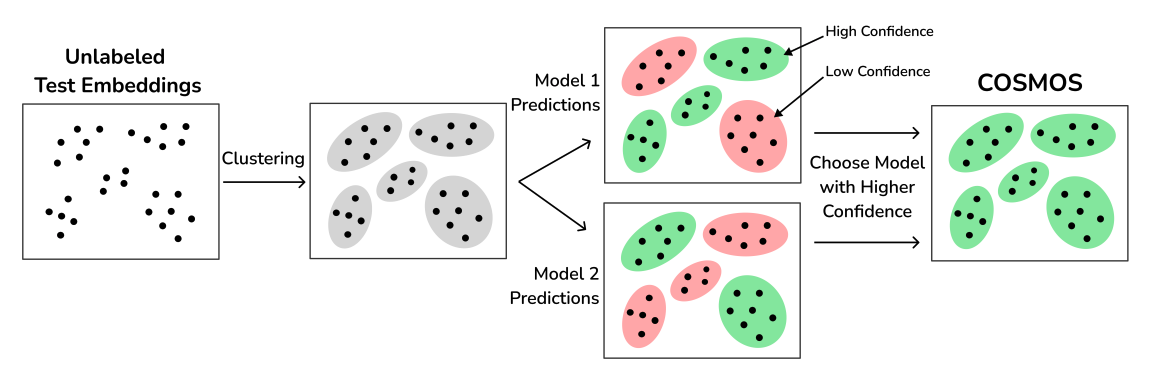

Confidence-Based Model Selection: When to Take Shortcuts in Spurious Settings

Contact: asc8@stanford.edu

Workshop: DistShift

Links: Paper

Keywords: distribution-shift robustness, spurious correlations, shortcut features, subpopulation shifts

Context-Aware Meta-Learning

Contact: fifty@cs.stanford.edu

Workshop: Distribution Shifts (DistShift): New Frontiers with Foundational Models

Links: Paper

Keywords: meta-learning, few-shot learning, deep learning, elmes

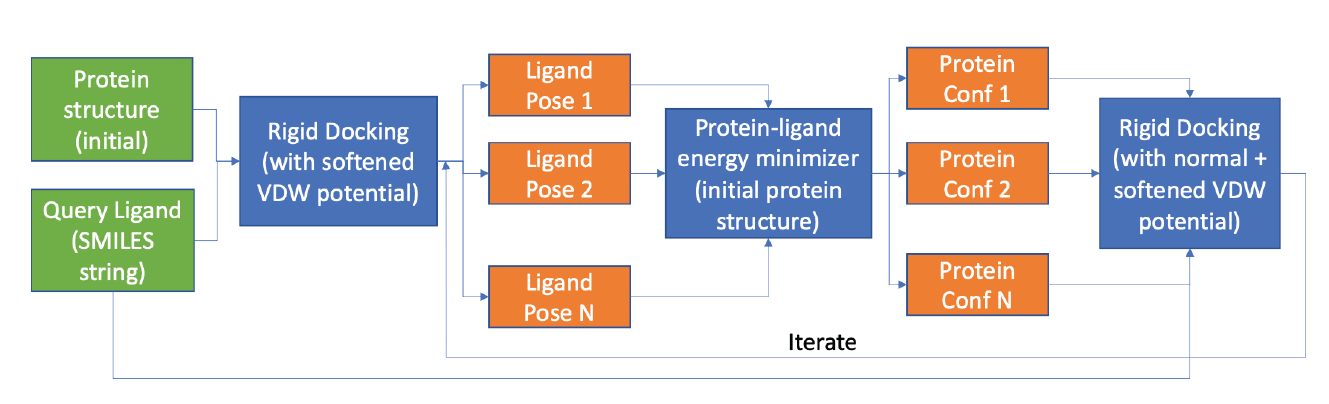

Enhancing Ligand Pose Sampling for Machine Learning–Based Docking

Contact: psuriana@stanford.edu

Workshop: Machine Learning for Structural Biology Workshop

Links: Paper

Keywords: ligand docking, deep learning

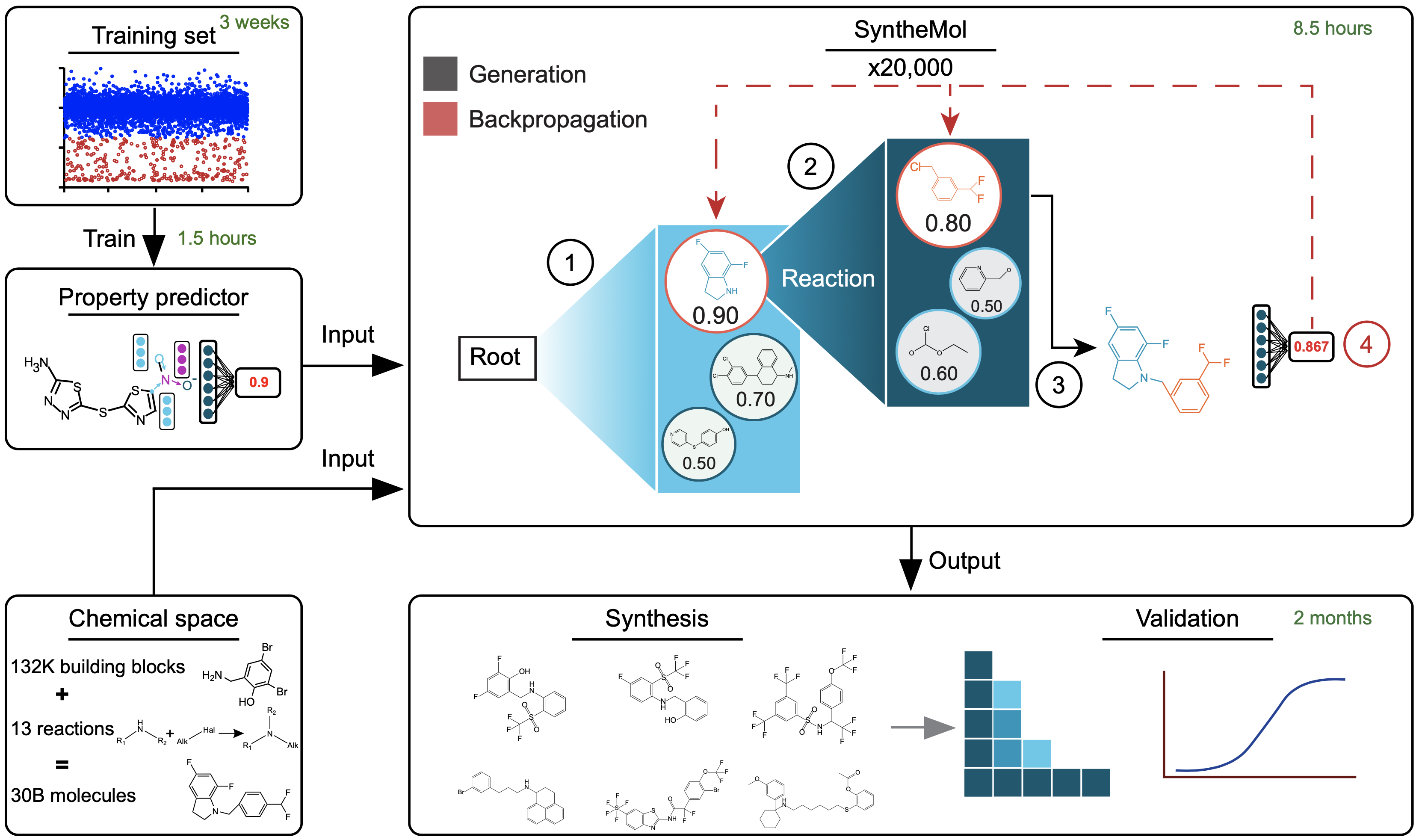

Generative AI for designing and validating easily synthesizable and structurally novel antibiotics

Contact: swansonk@stanford.edu

Workshop: Generative AI and Biology

Links: Paper | Website

Keywords: generative ai, antibiotic discovery, drug design, synthesizability

Implicit Geometry and Interaction Embeddings Improve Few-Shot Molecular Property Prediction

Contact: fifty@cs.stanford.edu

Workshop: Machine Learning in Structural Biology

Links: Paper | Website

Keywords: few-shot learning, structural biology, deep learning

Interactive Model Correction with Natural Language

Contact: yoonho@stanford.edu

Workshop: ICBINB, XAI in Action

Links: Paper

Keywords: spurious correlations, human-computer interaction, natural language feedback, vision-language models

Mini-BEHAVIOR: A Procedurally Generated Benchmark for Long-horizon Decision-Making in Embodied AI

Contact: emilyjin@stanford.edu

Workshop: Generalization in Planning (GenPlan), Agent Learning in Open-Endedness (ALOE)

Links: Paper | Website

Keywords: symbolic, complex, long-horizon, decision-making, embodied ai benchmark

Oracles & Followers: Stackelberg Equilibria in Deep Multi-Agent Reinforcement Learning

Contact: mgerst@stanford.edu

Workshop: Multi-Agent Security Workshop

Links: Paper | Website

Keywords: multi-agent reinforcement learning, mechanism design, security games, stackelberg equilibria

Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation

Contact: ezelikman@cs.stanford.edu

Workshop: OPT 2023: Optimization for Machine Learning

Links: Paper

Keywords: reasoning, language models, self-improvement, code generation

Social Contract AI: Aligning AI Assistants with Implicit Group Norms

Contact: janphilipp.franken@gmail.com

Workshop: Socially Responsible Language Modelling Research (SoLaR)

Links: Paper | Website

Keywords: alignment, preference learning, simulation

Testing Assumptions Underlying a Unified Theory for the Origin of Grid Cells

Contact: rschaef@cs.stanford.edu

Workshop: Workshops: Unifying Representations in Neural Models, Symmetry and Geometry in Neural Representations, AI for Science

Links: Paper

Keywords: neuroscience, artificial intelligence, computational biology

Unifying Corroborative and Contributive Attributions in Large Language Models

Contact: jhshen@stanford.edu

Workshop: ATTRIB Workshop 2023

Links: Paper

Keywords: llm, attributions, training data attributions, fact checking, fact tracing, information retrieval, retrieval augment generation

We look forward to seeing you at NeurIPS 2023!