The 58th annual meeting of the Association for Computational Linguistics is being hosted virtually this week. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

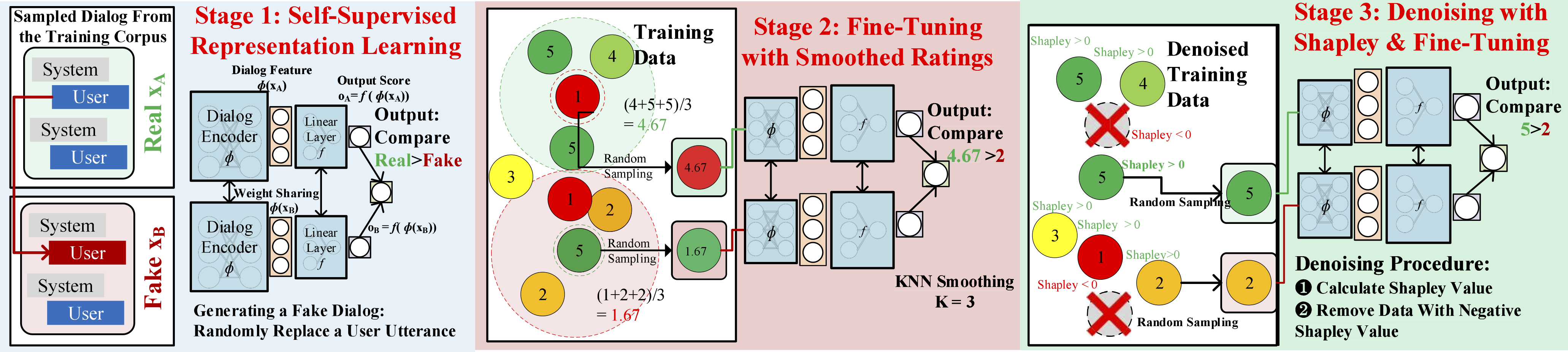

Beyond User Self-Reported Likert Scale Ratings: A Comparison Model for Automatic Dialog Evaluation

Contact: wxliang@stanford.edu

Keywords: dialog, automatic dialog evaluation, user experience

Contextual Embeddings: When Are They Worth It?

Contact: simarora@stanford.edu

Links: Paper | Video

Keywords: contextual embeddings, pretraining, benefits of context

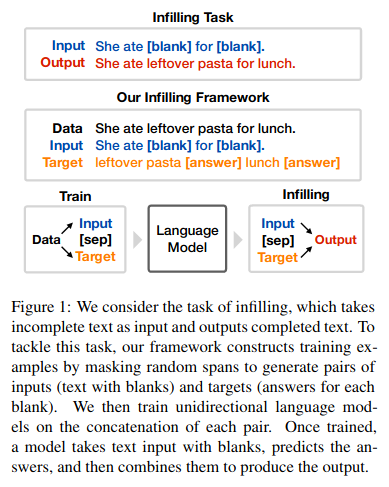

Enabling Language Models to Fill in the Blanks

Contact: cdonahue@cs.stanford.edu

Links: Paper | Blog Post | Video

Keywords: natural language generation, infilling, fill in the blanks, language models

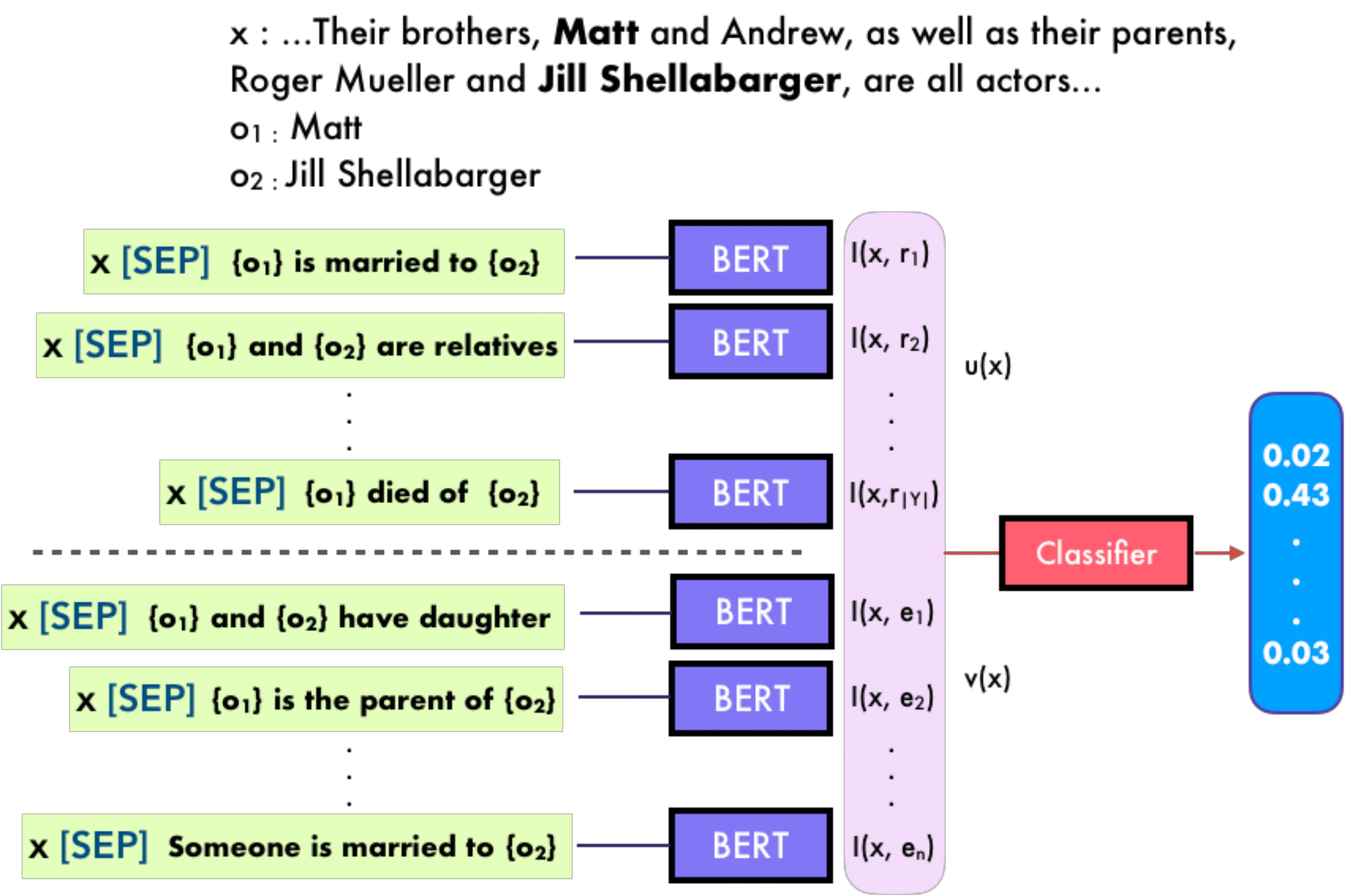

ExpBERT: Representation Engineering with Natural Language Explanations

Contact: smurty@cs.stanford.edu

Links: Paper | Video

Keywords: language explanations, bert, relation extraction, language supervision

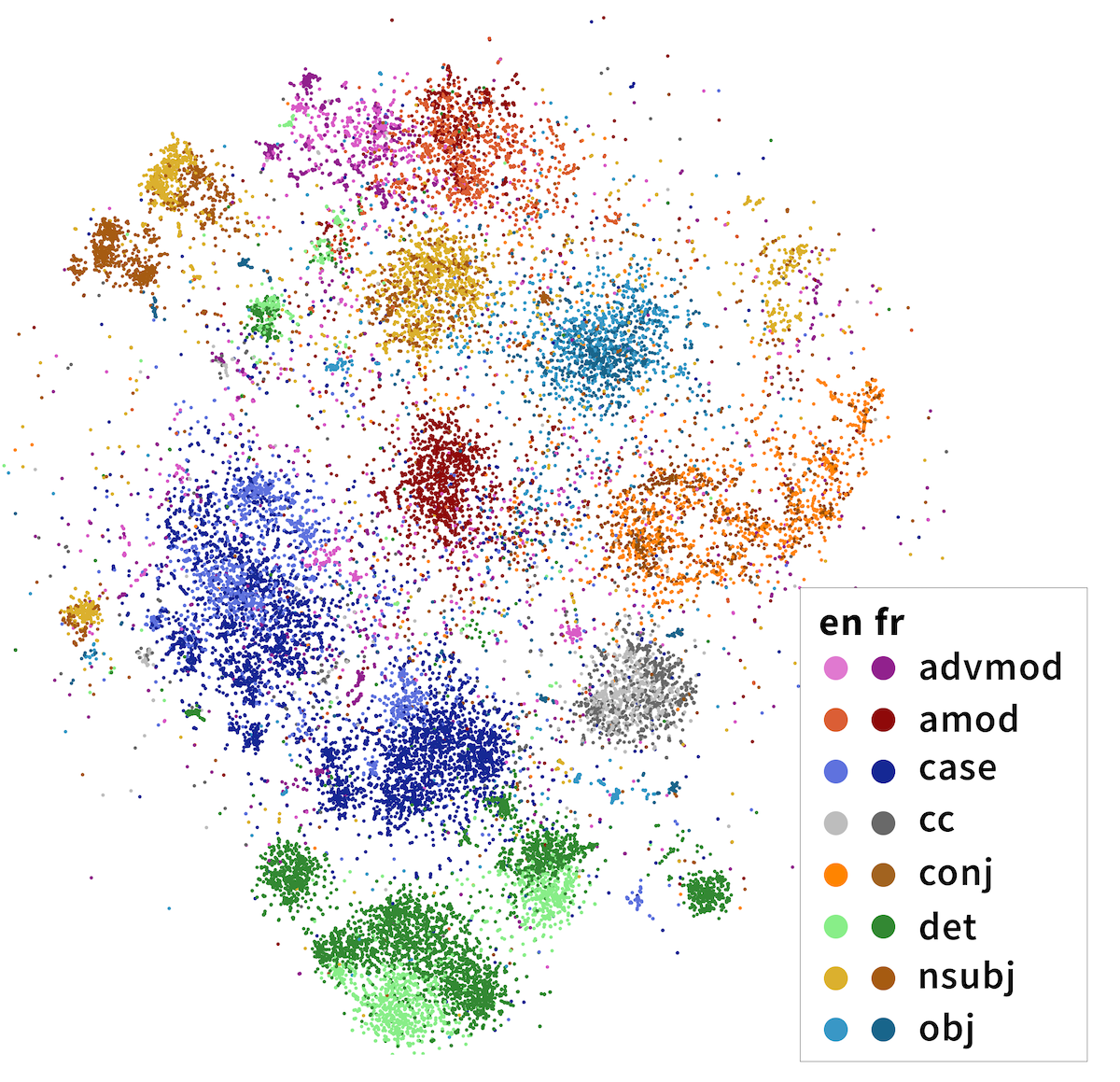

Finding Universal Grammatical Relations in Multilingual BERT

Contact: ethanchi@cs.stanford.edu

Links: Paper | Blog Post

Keywords: analysis, syntax, multilinguality

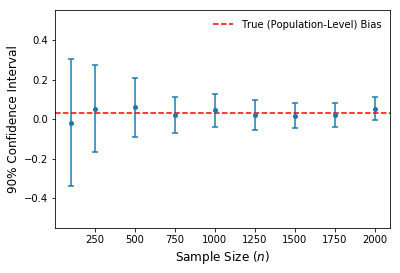

Is Your Classifier Actually Biased? Measuring Fairness under Uncertainty with Bernstein Bounds

Contact: kawin@stanford.edu

Links: Paper

Keywords: fairness, bias, equal opportunity, ethics, uncertainty

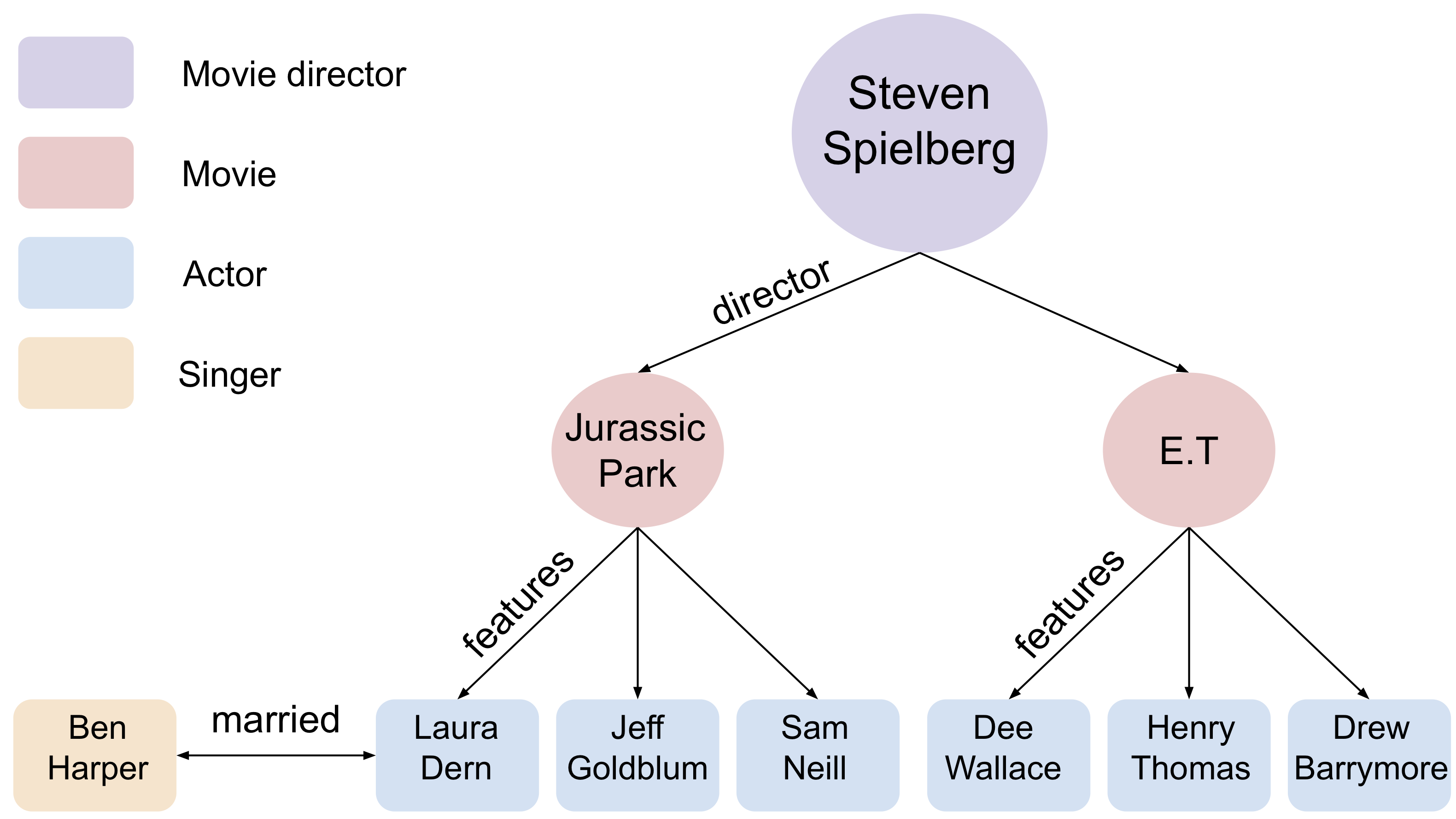

Low-Dimensional Hyperbolic Knowledge Graph Embeddings

Contact: chami@stanford.edu

Links: Paper | Video

Keywords: knowledge graphs, hyperbolic embeddings, link prediction

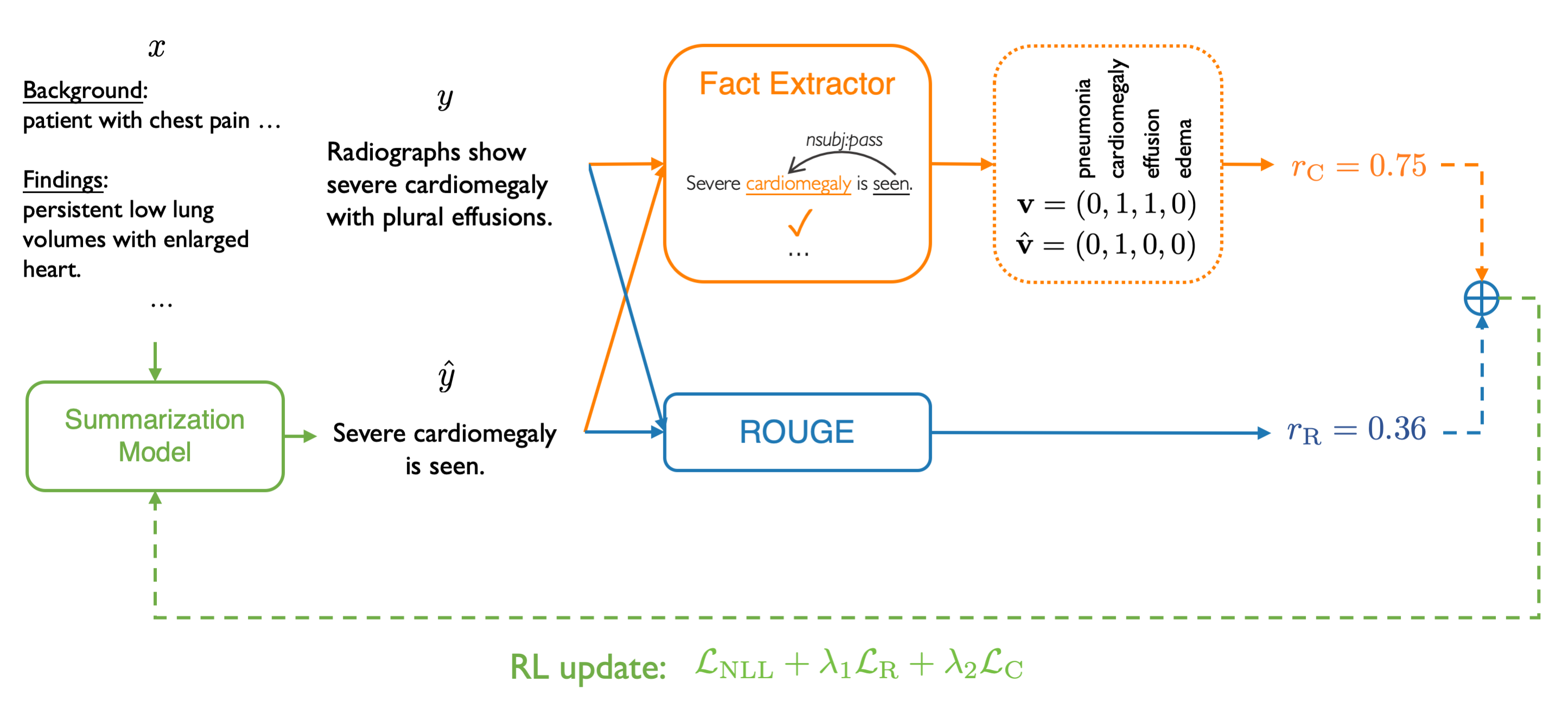

Optimizing the Factual Correctness of a Summary: A Study of Summarizing Radiology Reports

Contact: yuhao.zhang@stanford.edu

Links: Paper

Keywords: nlp, text summarization, reinforcement learning, medicine, radiology report

Orthogonal Relation Transforms with Graph Context Modeling for Knowledge Graph Embedding

Contact: jhuang18@stanford.edu

Links: Paper | Video

Keywords: orthogonal transforms, knowledge graph embedding

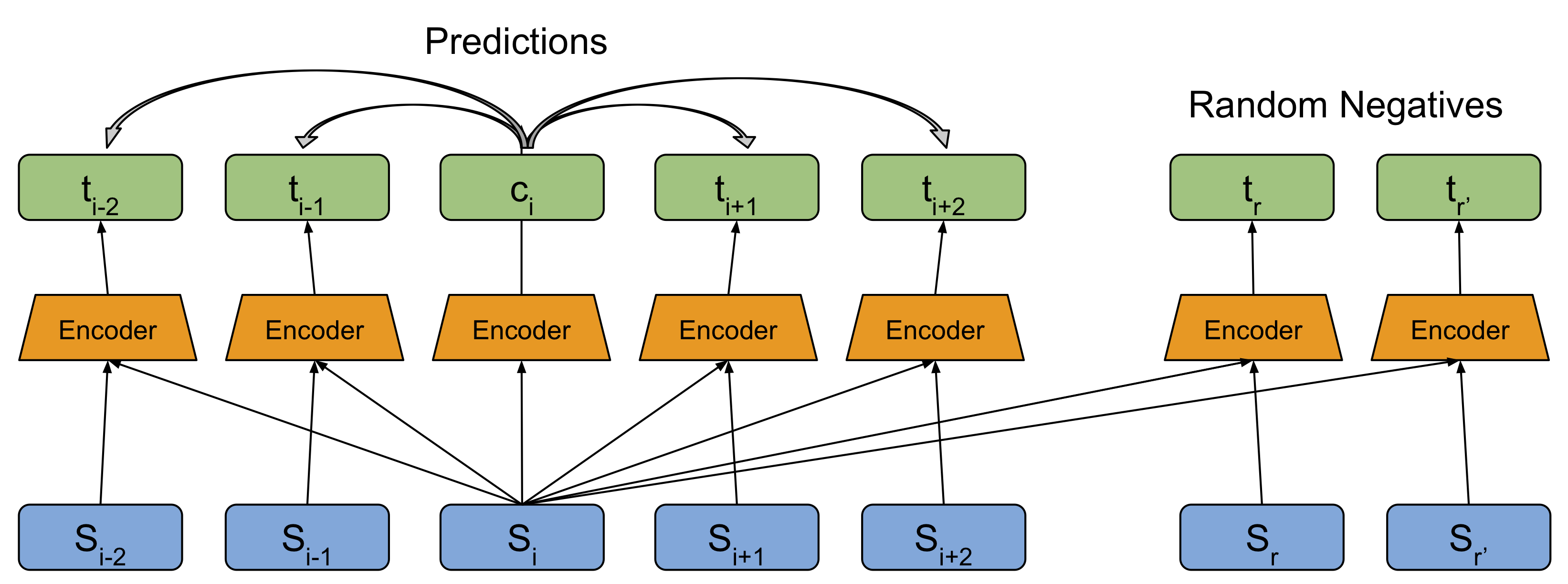

Pretraining with Contrastive Sentence Objectives Improves Discourse Performance of Language Models

Contact: daniter@stanford.edu

Links: Paper

Keywords: discourse coherence, language model pretraining

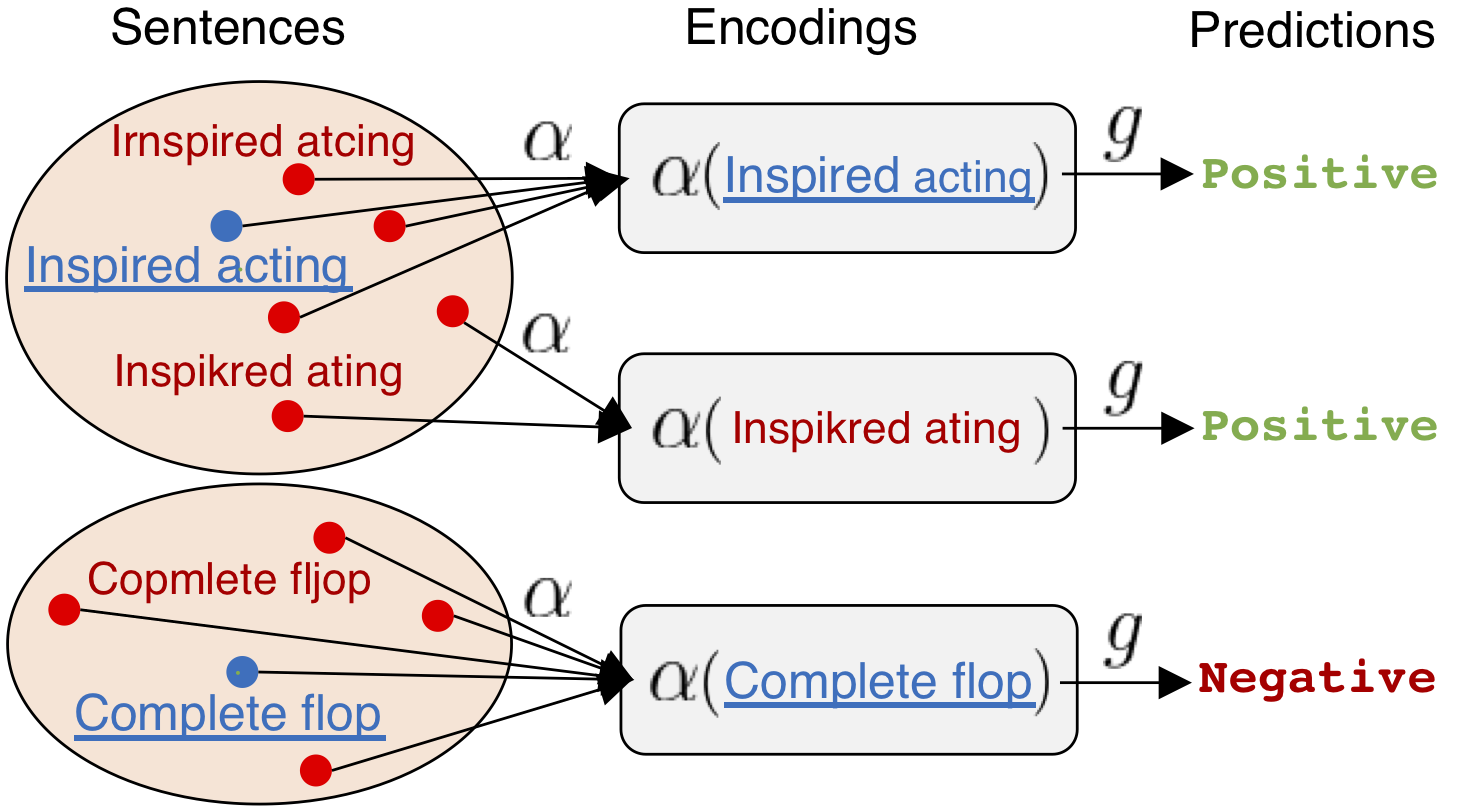

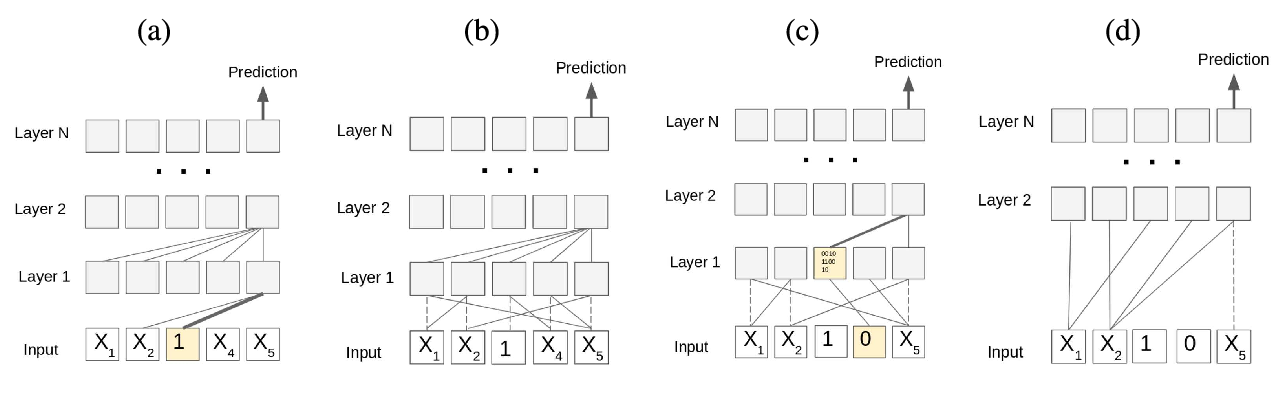

Robust Encodings: A Framework for Combating Adversarial Typos

Contact: erjones@stanford.edu

Links: Paper

Keywords: nlp, robustness, adversarial robustness, typos, safe ml

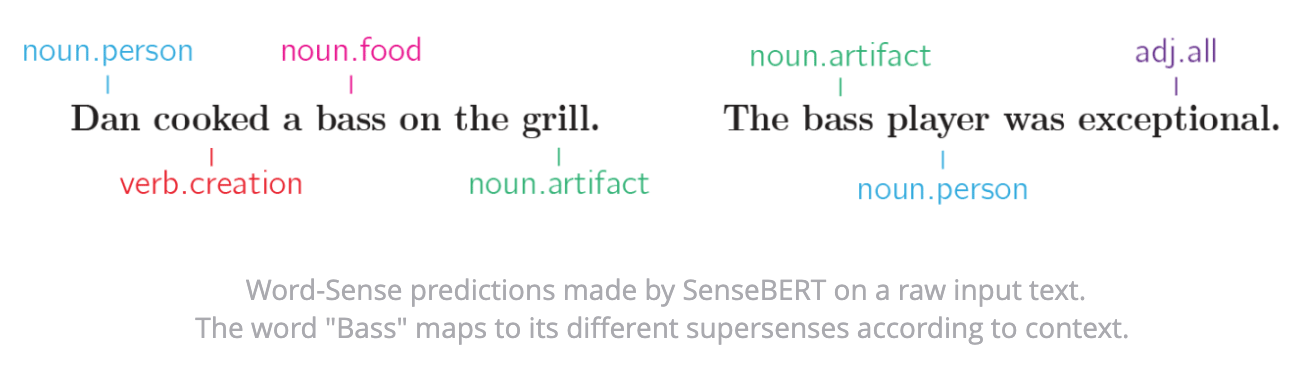

SenseBERT: Driving Some Sense into BERT

Contact: shoham@cs.stanford.edu

Links: Paper | Blog Post

Keywords: language models, semantics

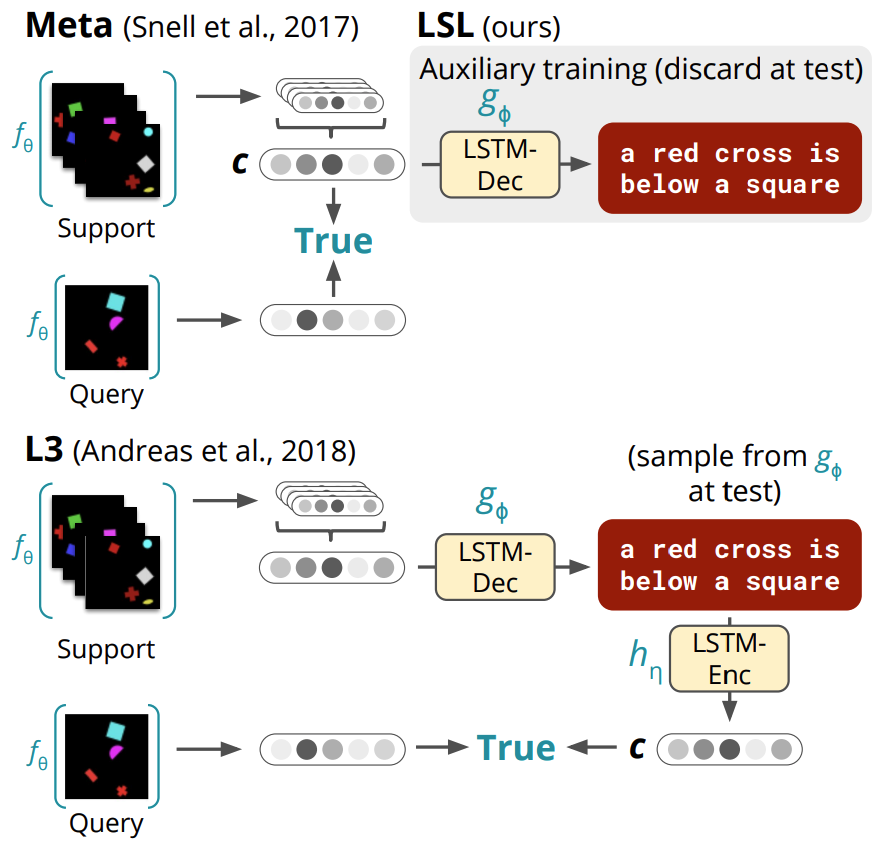

Shaping Visual Representations with Language for Few-shot Classification

Contact: muj@stanford.edu

Links: Paper

Keywords: grounding, language supervision, vision, few-shot learning, meta-learning, transfer

Stanza: A Python Natural Language Processing Toolkit for Many Human Languages

Contact: pengqi@cs.stanford.edu

Links: Paper

Keywords: natural language processing, multilingual, data-driven, neural networks

Theoretical Limitations of Self-Attention in Neural Sequence Models

Contact: mhahn2@stanford.edu

Links: Paper

Keywords: theory, transformers, formal languages

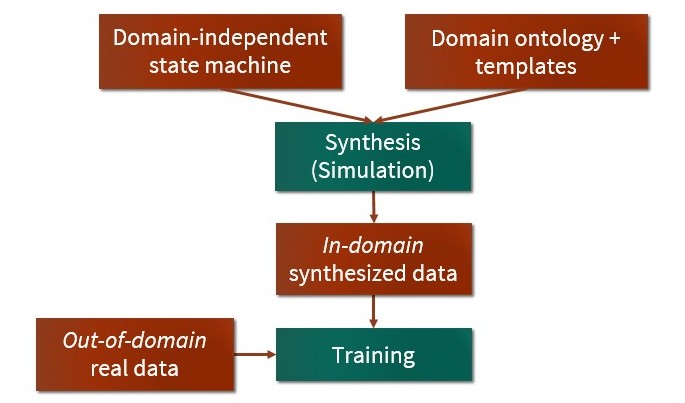

Zero-Shot Transfer Learning with Synthesized Data for Multi-Domain Dialogue State Tracking

Contact: gcampagn@stanford.edu

Links: Paper

Keywords: dialogue state tracking, multiwoz, zero-shot, data programming, pretraining

We look forward to seeing you at ACL 2020!