The Conference on Robot Learning (CoRL 2022) will take place next week. We’re excited to share all the work from SAIL that will be presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

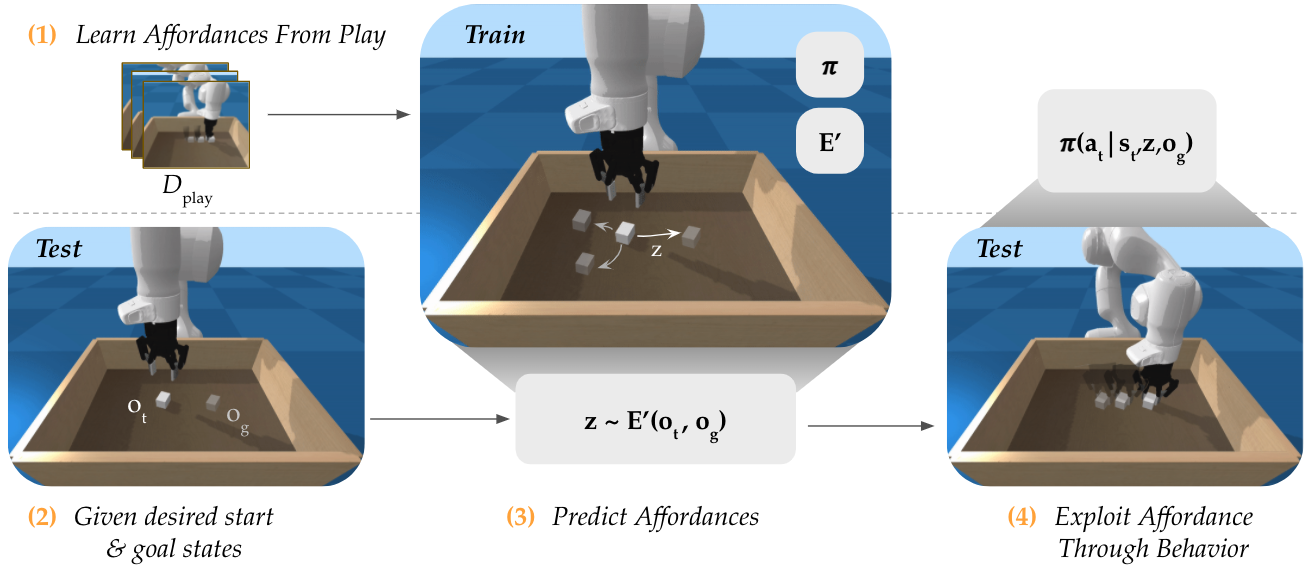

PLATO: Predicting Latent Affordances Through Object-Centric Play

Authors: Suneel Belkhale, Dorsa Sadigh

Authors: Suneel Belkhale, Dorsa Sadigh

Contact: dorsa@stanford.edu

Links: Paper | Video

Keywords: Human Play Data, Object Affordance Learning, Imitation Learning

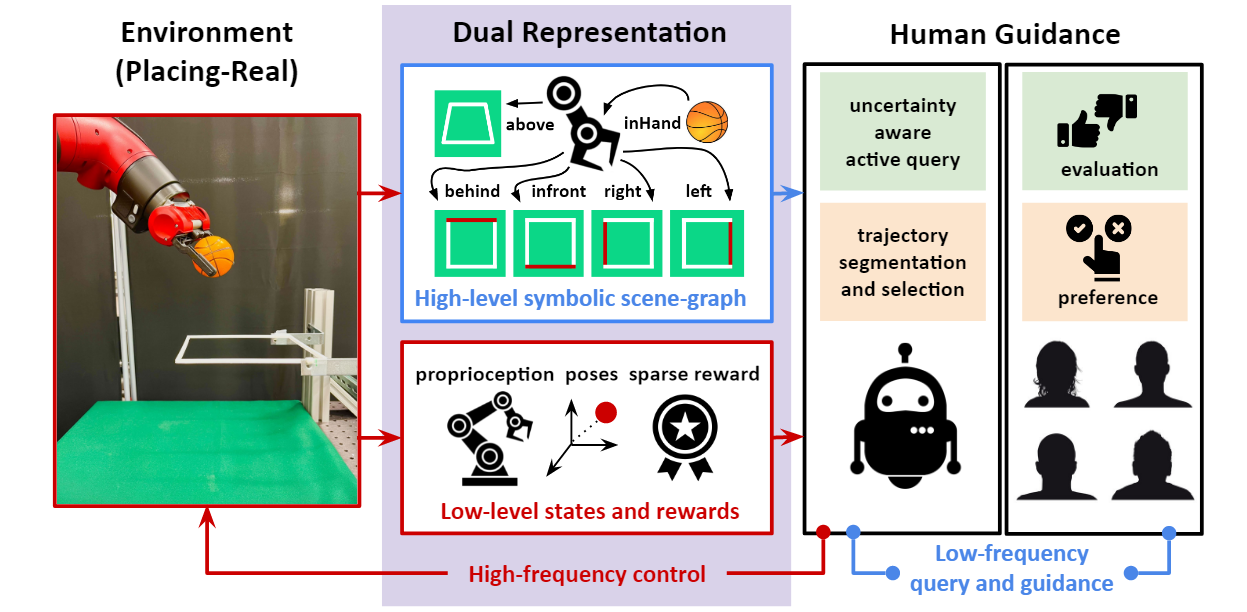

A Dual Representation Framework for Robot Learning with Human Guidance

Contact: zharu@stanford.edu

Links: Paper

Keywords: human guidance, evaluative feedback, preference learning

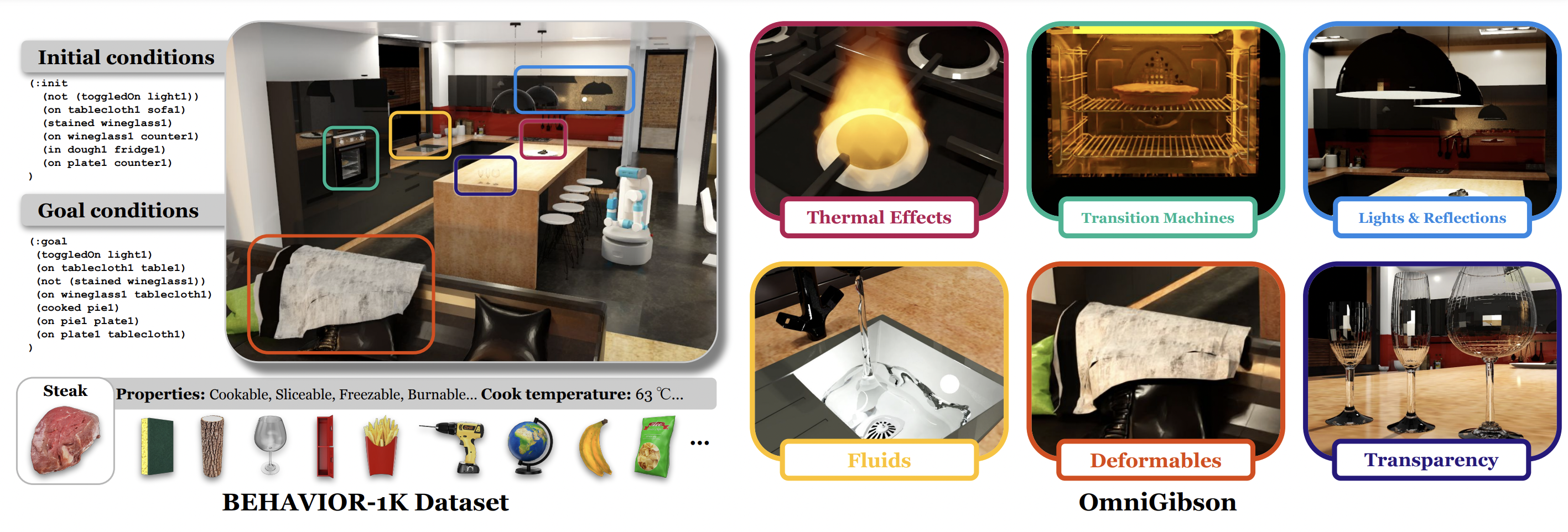

BEHAVIOR-1K: A Benchmark for Embodied AI with 1,000 Everyday Activities and Realistic Simulation

Contact: zharu@stanford.edu

Award nominations: Best paper nomination

Links: Paper | Website

Keywords: embodied ai benchmark, everyday activities, mobile manipulation

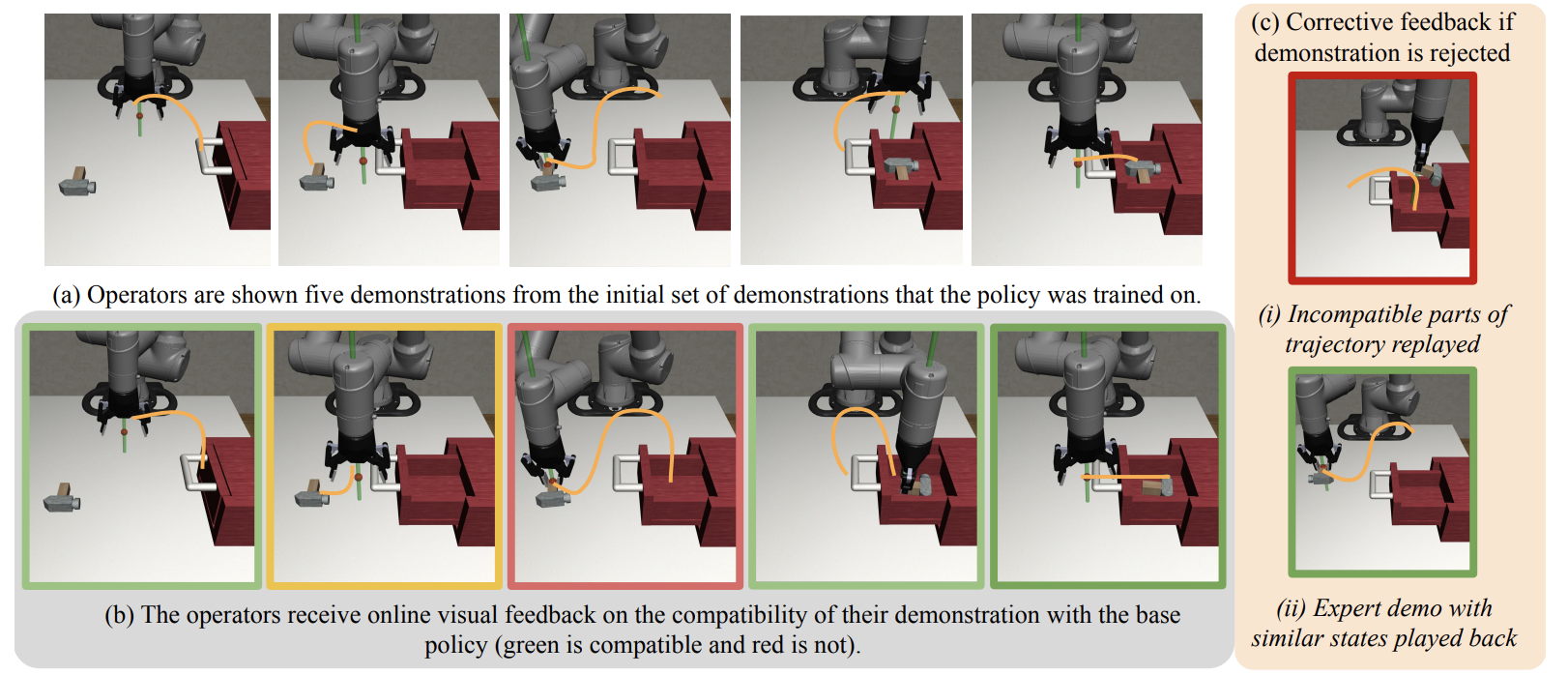

Eliciting Compatible Demonstrations for Multi-Human Imitation Learning

Contact: kanishk.gandhi@stanford.edu

Links: Paper | Website

Keywords: imitation learning, active learning, human-robot interaction

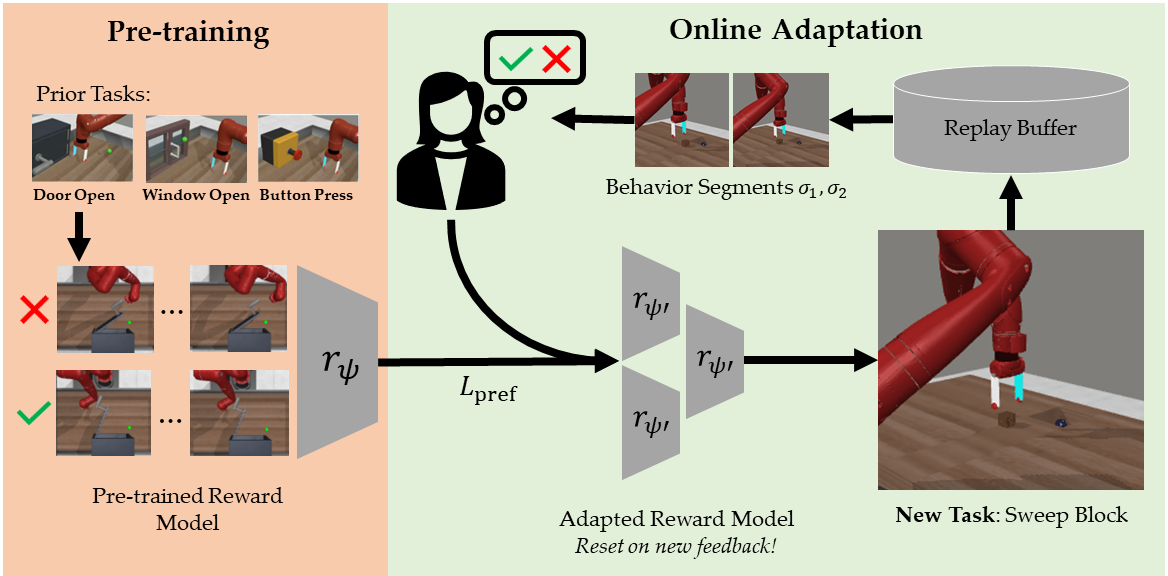

Few-Shot Preference Learning for Human-in-the-Loop Rl

Contact: jhejna@stanford.edu

Links: Paper | Video | Website

Keywords: preference learning, interactive learning, multi-task learning, human-in-the-loop

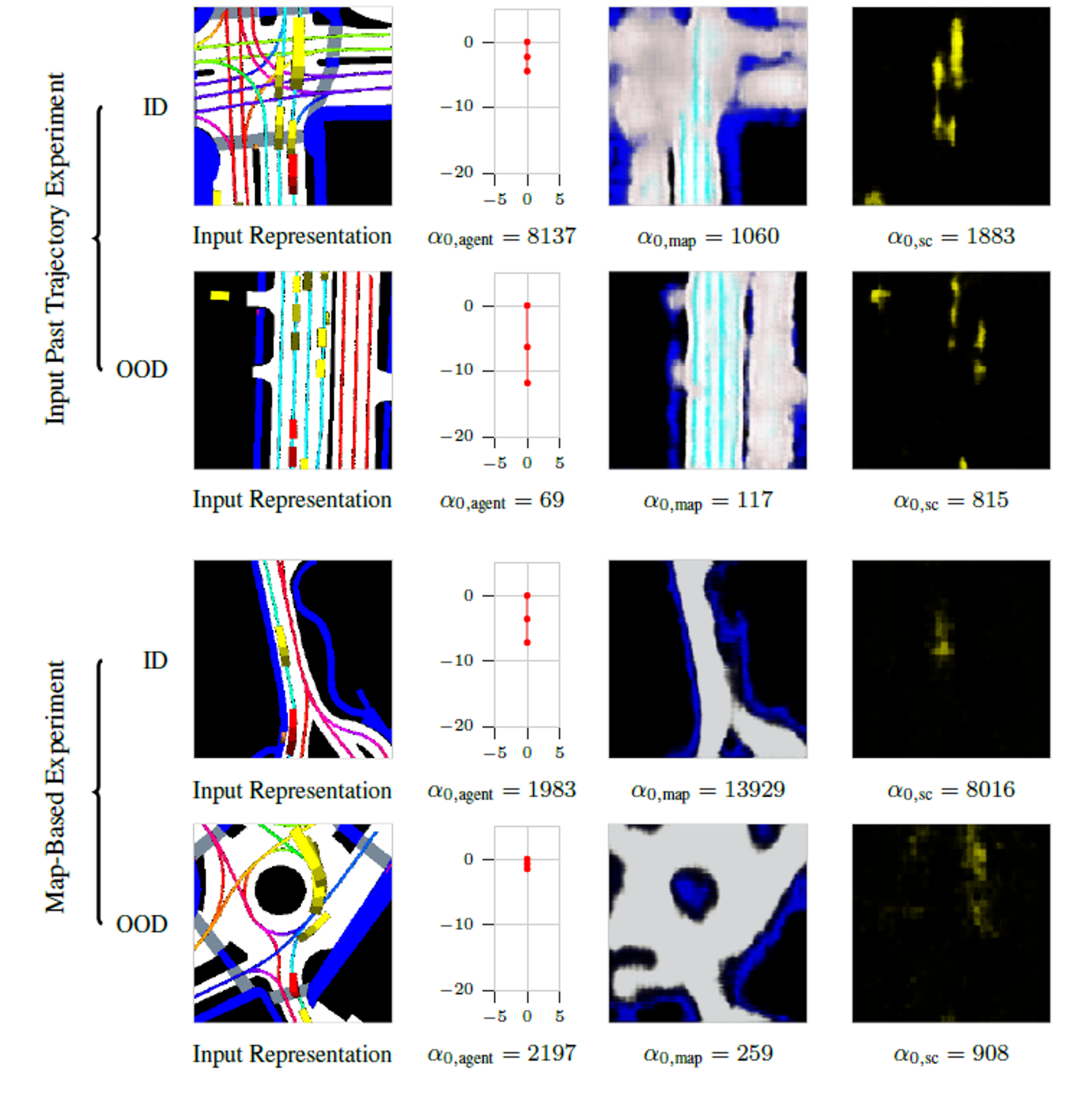

Interpretable Self-Aware Neural Networks for Robust Trajectory Prediction

Contact: mitkina@stanford.edu

Links: Paper | Website

Keywords: autonomous vehicles, trajectory prediction, distribution shift

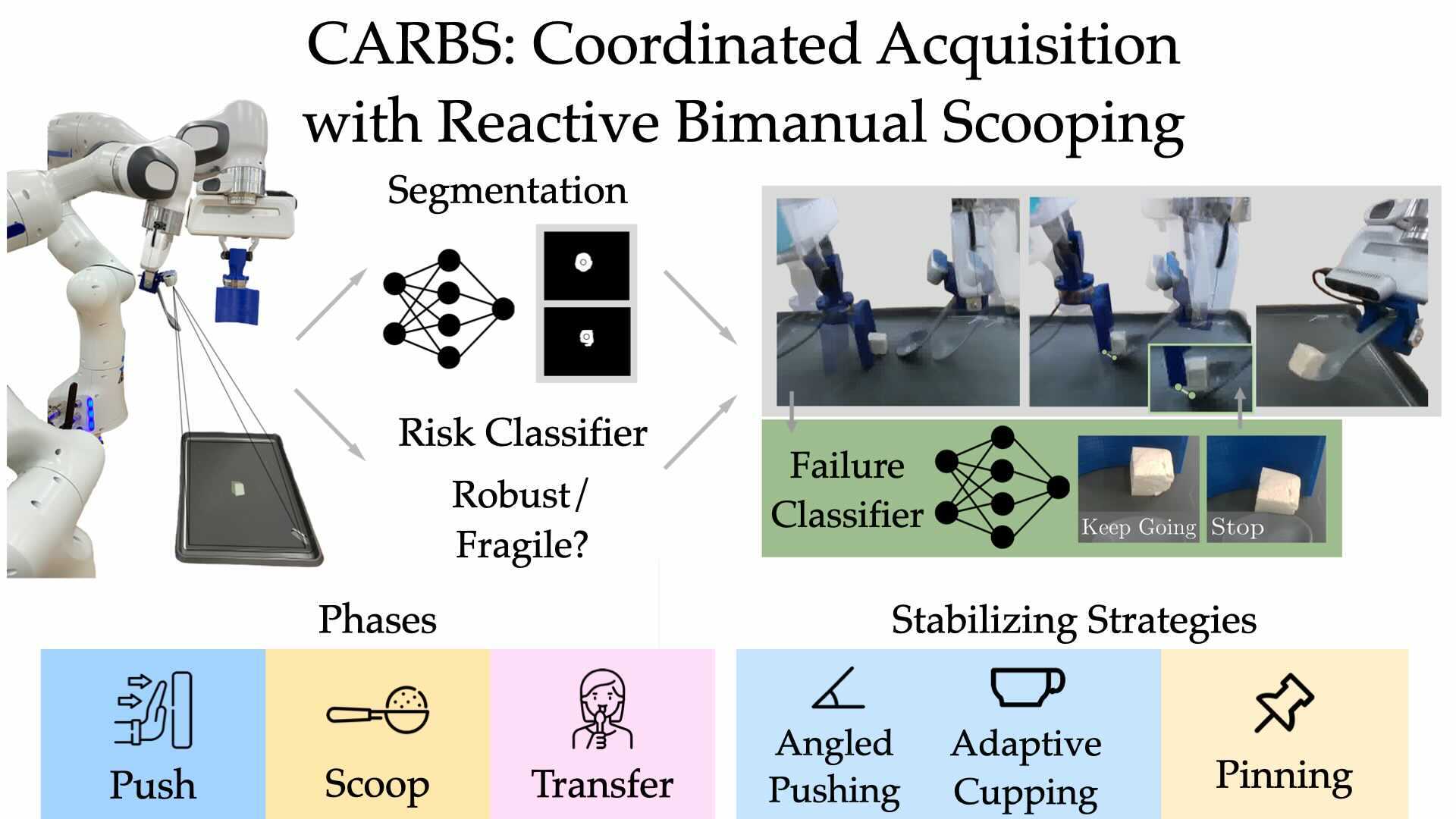

Learning Bimanual Scooping Policies for Food Acquisition

Contact: jgrannen@stanford.edu

Links: Paper | Video | Website

Keywords: bimanual manipulation, food acquisition, robot-assisted feeding, deformable object manipulation

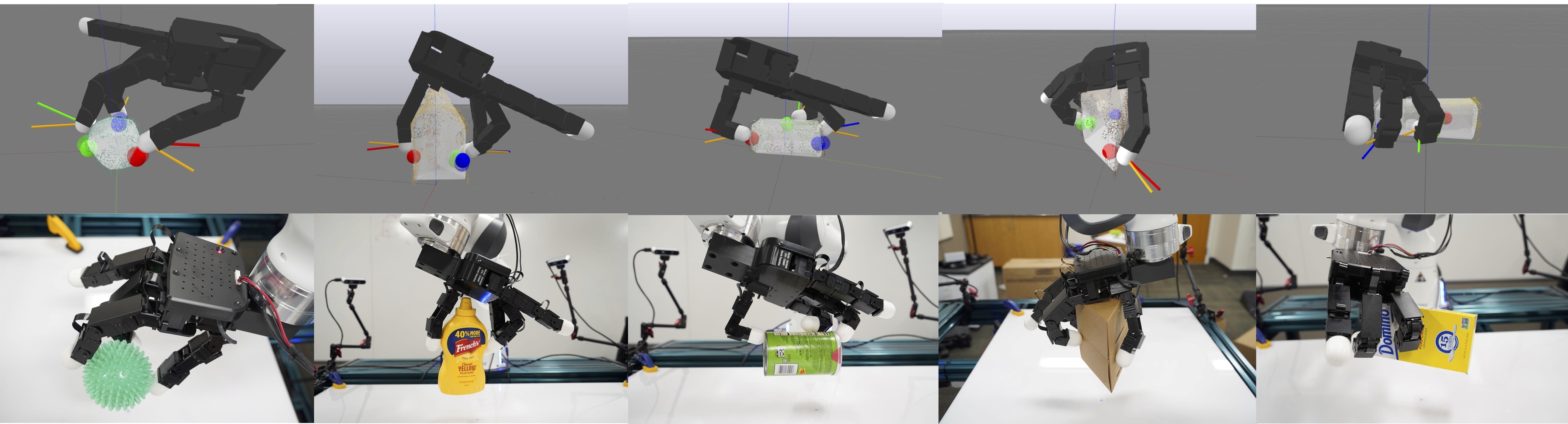

Learning Diverse and Physically Feasible Dexterous Grasps with Generative Model and Bilevel Optimization

Contact: amhwu@stanford.edu

Links: Paper | Video

Keywords: dexterous grasping, grasp planning, bilevel optimization, generative model

Learning Visuo-Haptic Skewering Strategies for Robot-Assisted Feeding

Contact: priyasun@stanford.edu

Links: Paper | Video

Keywords: manipulation, deformable manipulation, perception, planning, computer vision

Leveraging Haptic Feedback to Improve Data Quality and Quantity for Deep Imitation Learning Models

Contact: ccuan@stanford.edu

Links: Paper

Keywords: haptics and haptic interfaces, imitation learning, data curation

Offline Reinforcement Learning at Multiple Frequencies

Contact: kayburns@stanford.edu

Links: Paper | Video | Website

Keywords: offline reinforcement learning, robotics

R3M: A Universal Visual Representation for Robot Manipulation

Contact: surajn@stanford.edu

Links: Paper | Website

Keywords: visual representation learning, robotic manipulation

See, Hear, Feel: Smart Sensory Fusion for Robotic Manipulation

Contact: rhgao@cs.stanford.edu

Links: Paper | Video | Website

Keywords: multisensory, robot learning, robotic manipulation

We look forward to seeing you at CoRL 2022!