The International Conference on Learning Representations (ICLR) 2024 is being hosted in Vienna Austria from May 7 - May 11. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

Main conference

Attention Satisfies: A Constraint-Satisfaction Lens on Factual Errors of Language Models

Contact: merty@stanford.edu

Links: Paper | Website

Keywords: interpretability, hallucinations, factual errors

Compositional Generative Inverse Design

Contact: tailin@cs.stanford.edu

Award nominations: spotlight

Links: Paper | Website

Keywords: inverse design, generative design, pde, physical simulation, compositional

Connect, Collapse, Corrupt: Learning Cross-Modal Tasks with Uni-Modal Data

Contact: yuhuiz@stanford.edu

Links: Paper | Website

Keywords: multi-modal contrastive learning, representation learning, vision-language, multi-modality

Context-Aware Meta-Learning

Contact: fifty@cs.stanford.edu

Links: Paper | Video | Website

Keywords: meta-learning, few-shot learning

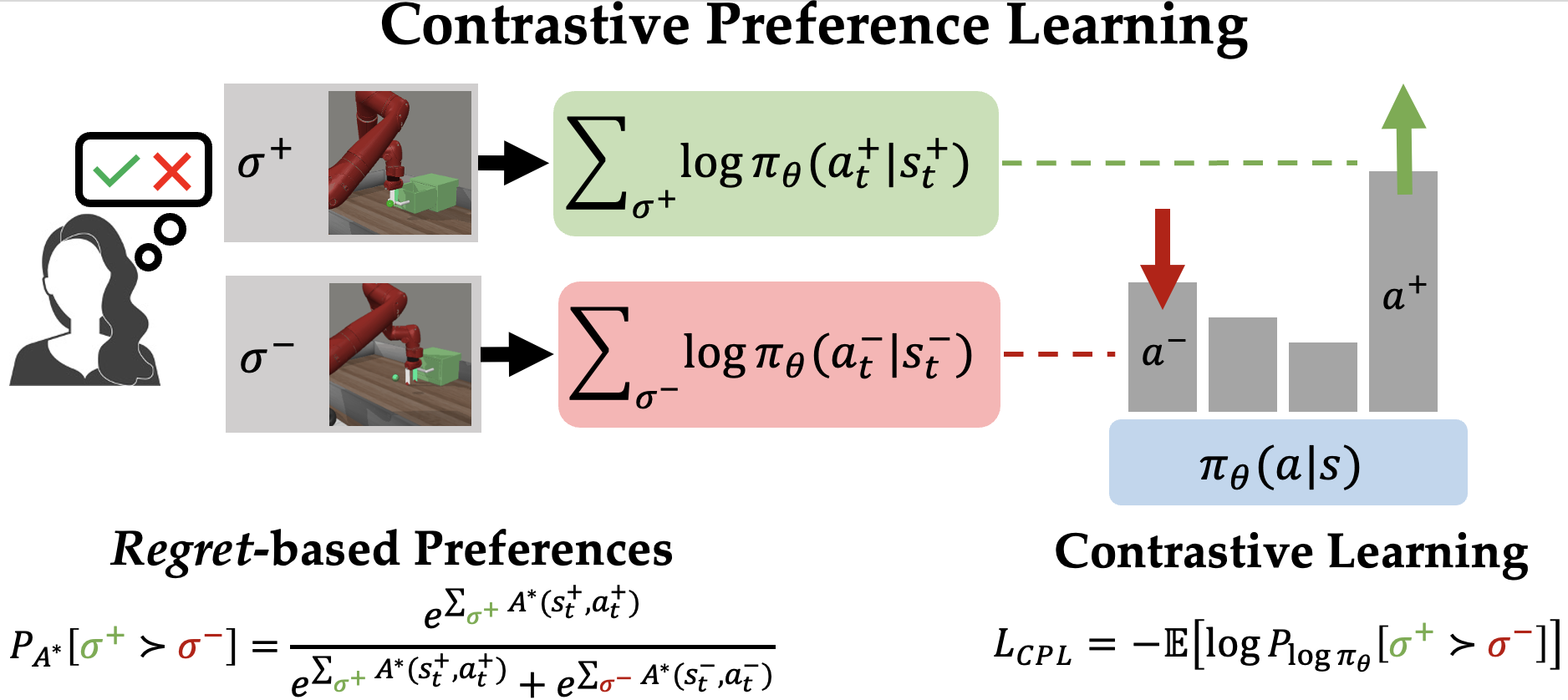

Contrastive Preference Learning: Learning from Human Feedback without RL

Contact: jhejna@stanford.edu

Links: Paper | Video

Keywords: reinforcement learning from human feedback, preference-based rl, human-in-the-loop rl, preference learning

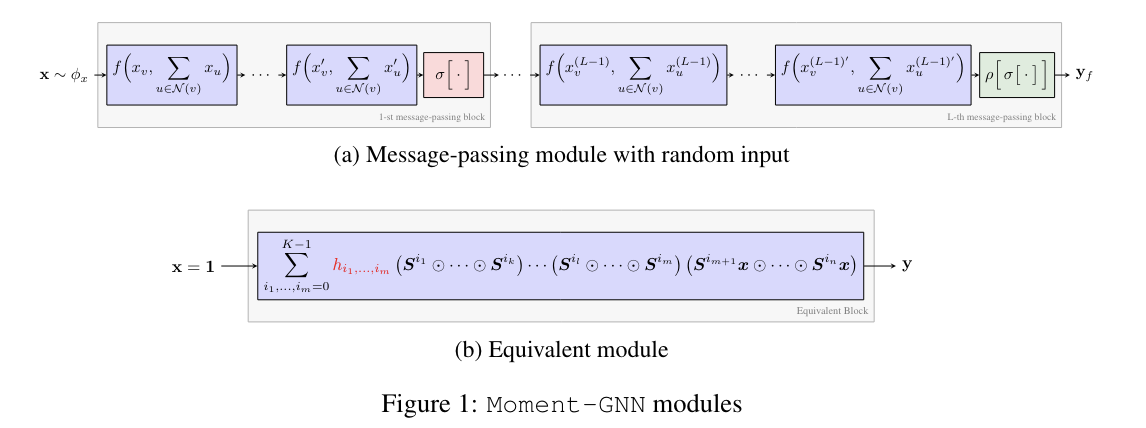

Counting Graph Substructures with Graph Neural Networks

Contact: charilaos@cs.stanford.edu

Links: Paper

Keywords: graph neural networks, equivariance, representation learning, structures, molecular graphs

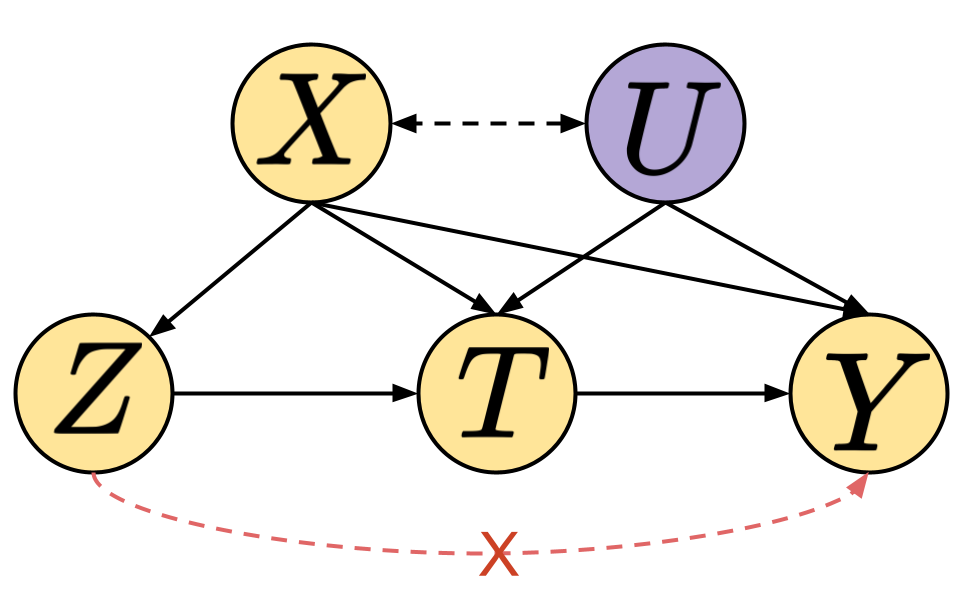

DIA Adaptive Instrument Design for Indirect Experiments

Contact: ychandak@stanford.edu

Links: Paper

Keywords: experiment design, instrumental variable, influence function, causal inference

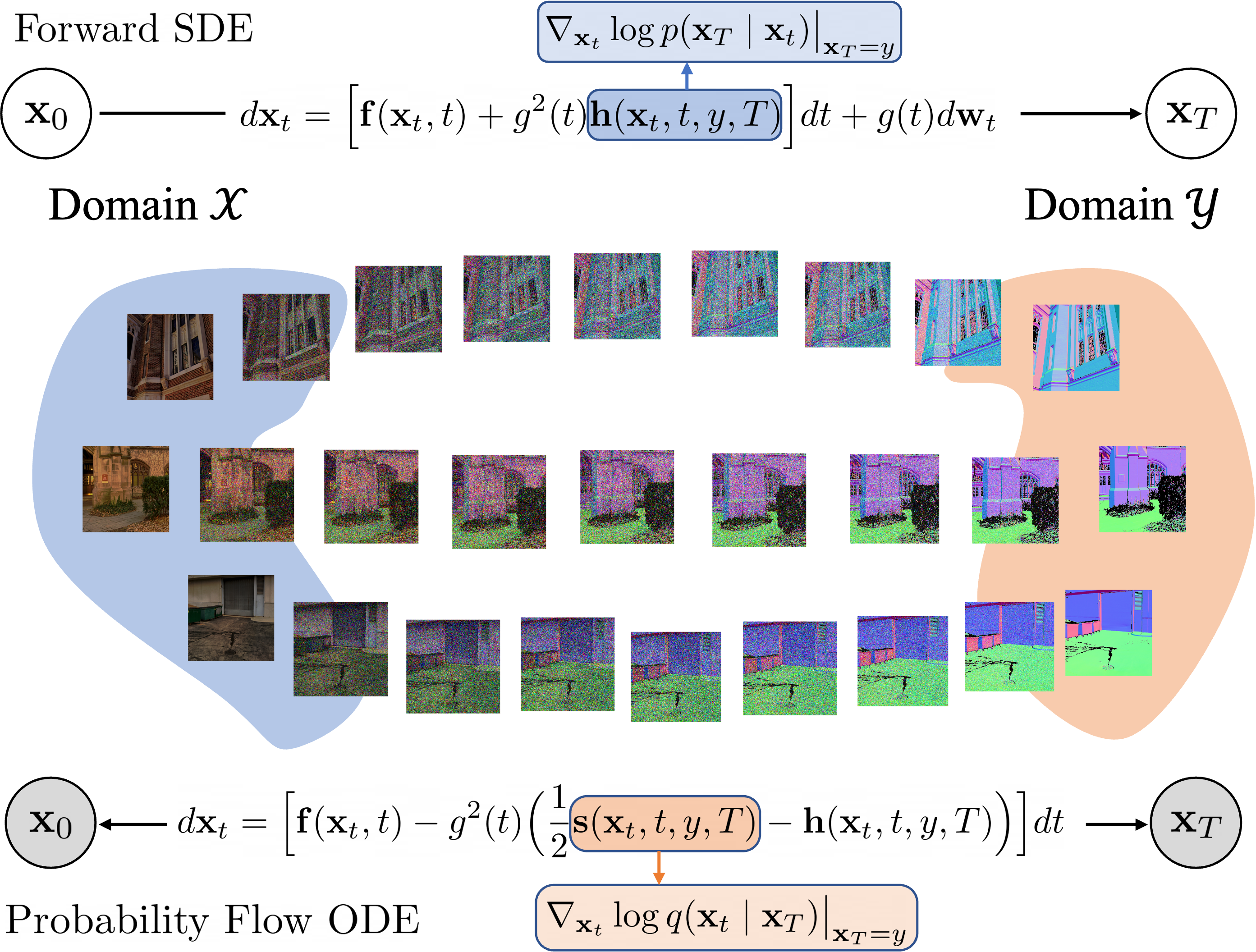

Denoising Diffusion Bridge Models

Contact: lzhou907@stanford.edu

Links: Paper

Keywords: diffusion models, generative models, flow models

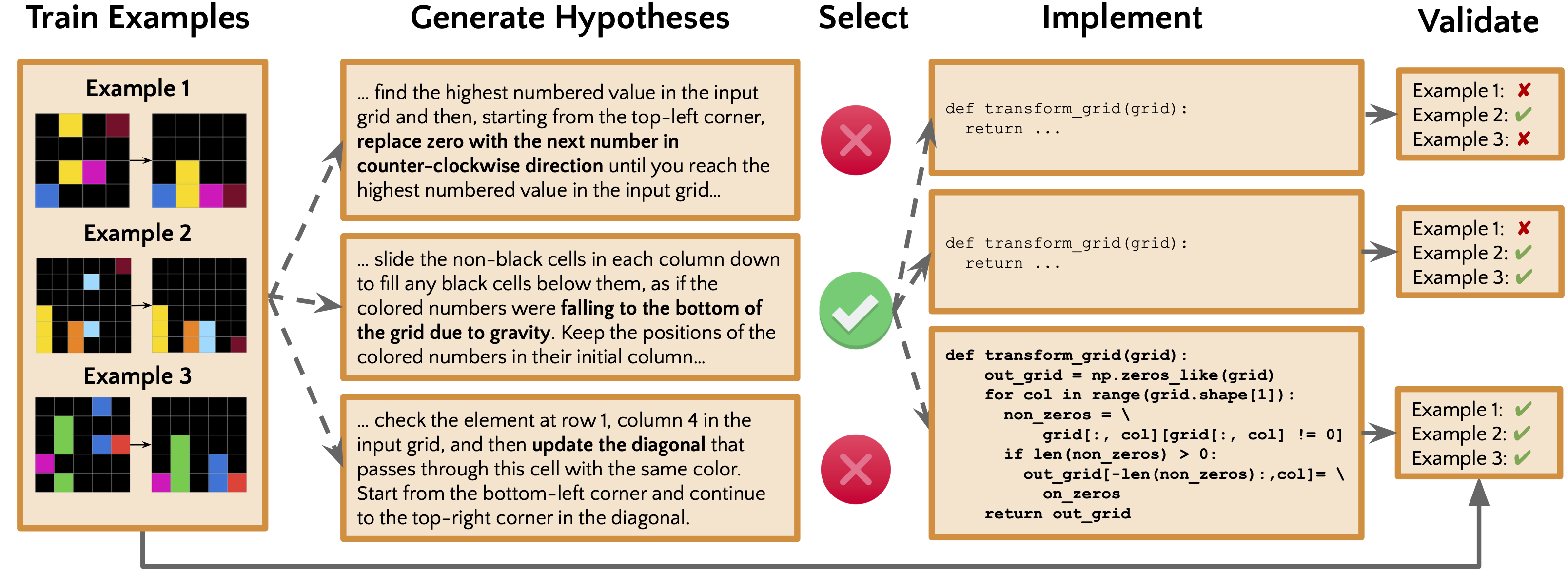

Hypothesis Search: Inductive Reasoning with Language Models

Contact: rcwang@cs.stanford.edu

Links: Paper

Keywords: inductive reasoning, large language models

Identifying the Risks of LM Agents with an LM-Emulated Sandbox

Contact: ruanyangjun@gmail.com

Award nominations: Spotlight

Links: Paper | Website

Keywords: language model agent, tool use, evaluation, safety, language model

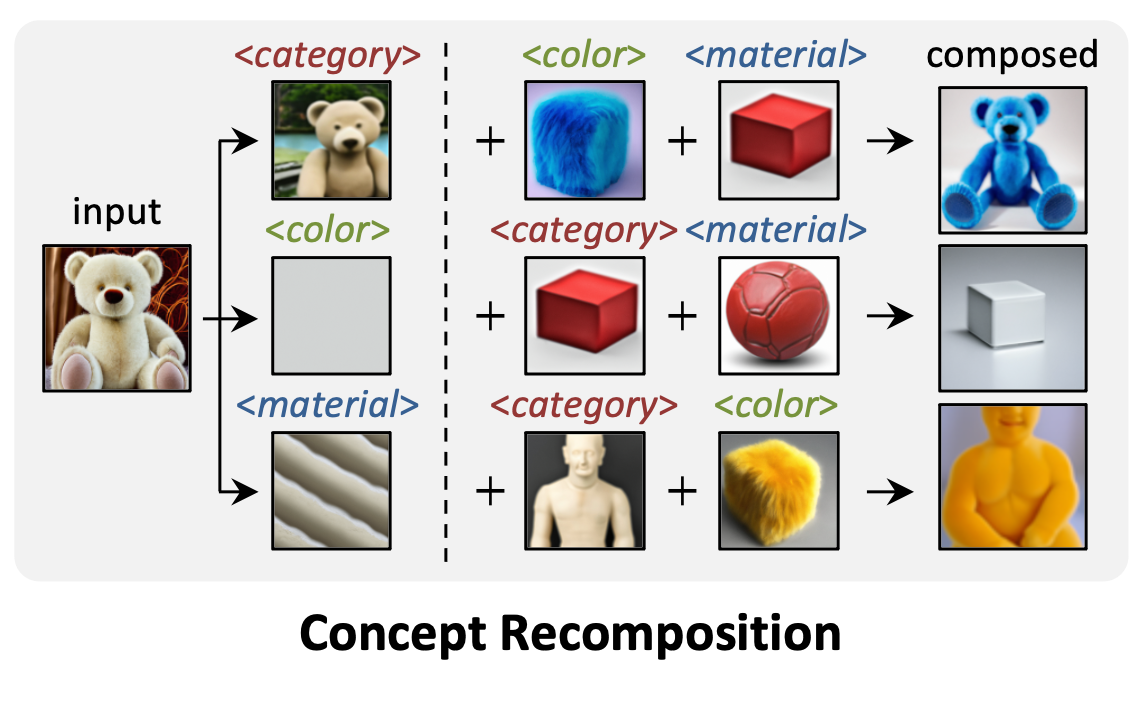

Language-Informed Visual Concept Learning

Contact: yzzhang@stanford.edu

Links: Paper | Website

Keywords: image generation, visual-language model

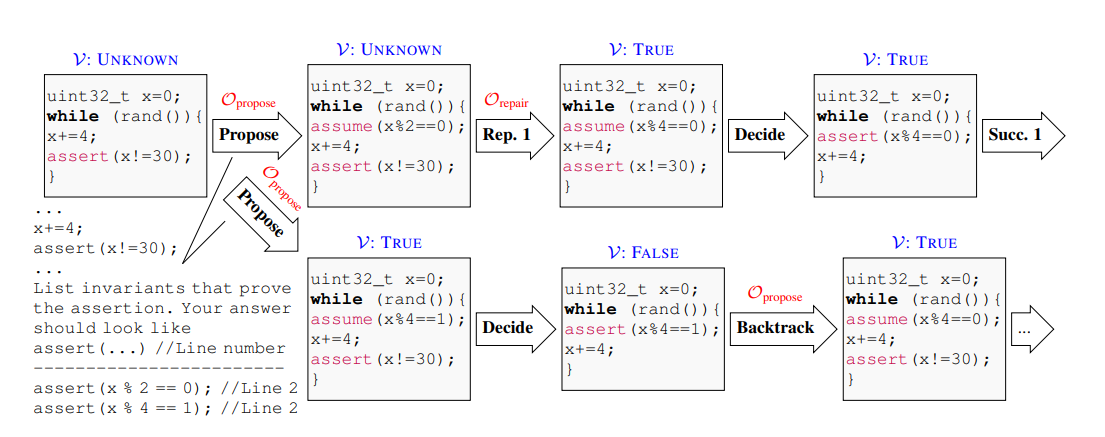

Lemur: Integrating Large Language Models in Automated Program Verification

Contact: haozewu@stanford.edu

Links: Paper | Website

Keywords: automated reasoning, program verification, llm

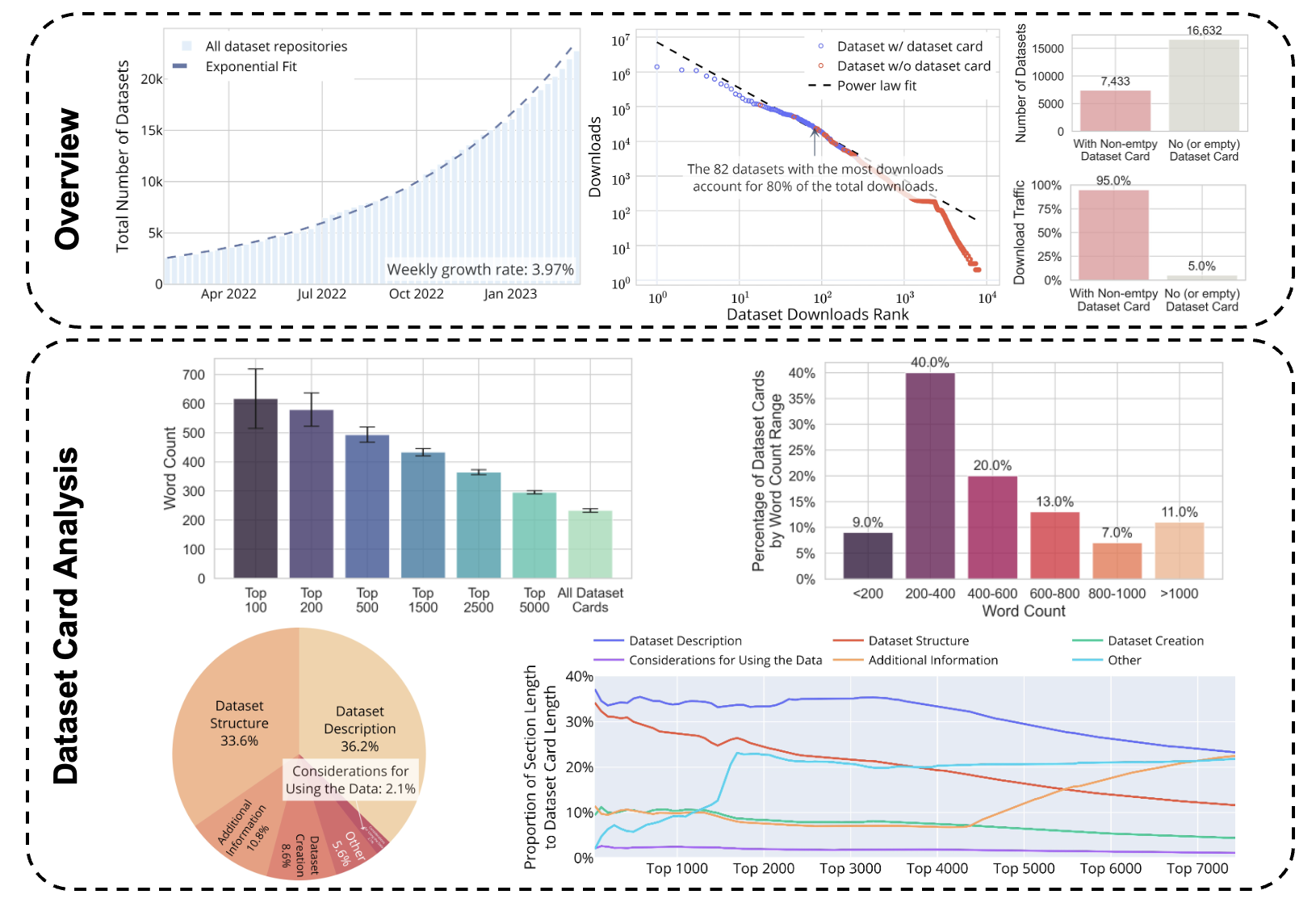

Navigating Dataset Documentations in AI: A Large-Scale Analysis of Dataset Cards on Hugging Face

Contact: xinyuyang1203@gmail.com, wxliang@stanford.edu

Links: Paper

Keywords: dataset documentation, data-centric ai, large-scale analysis

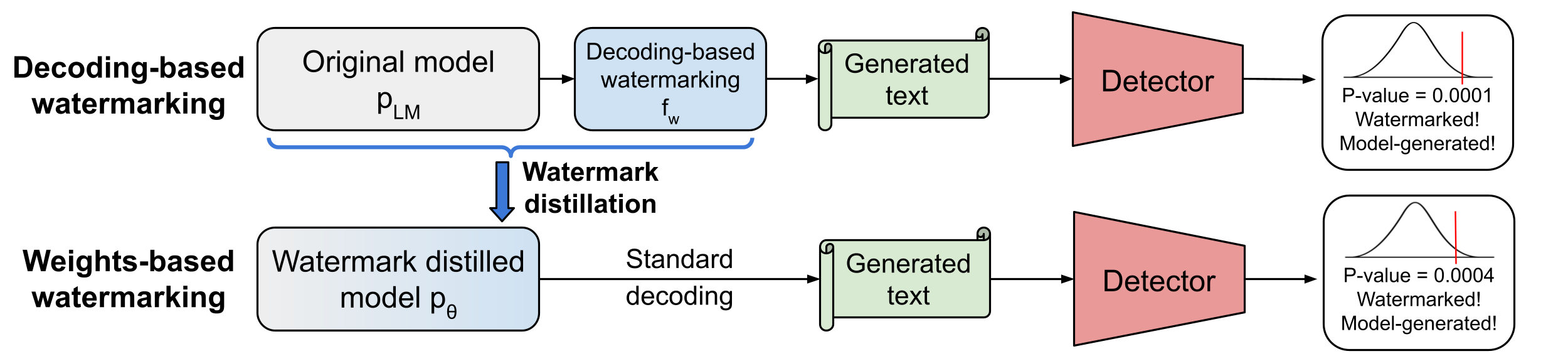

On the Learnability of Watermarks for Language Models

Contact: cygu@stanford.edu

Links: Paper | Website

Keywords: watermarking, large language models, distillation

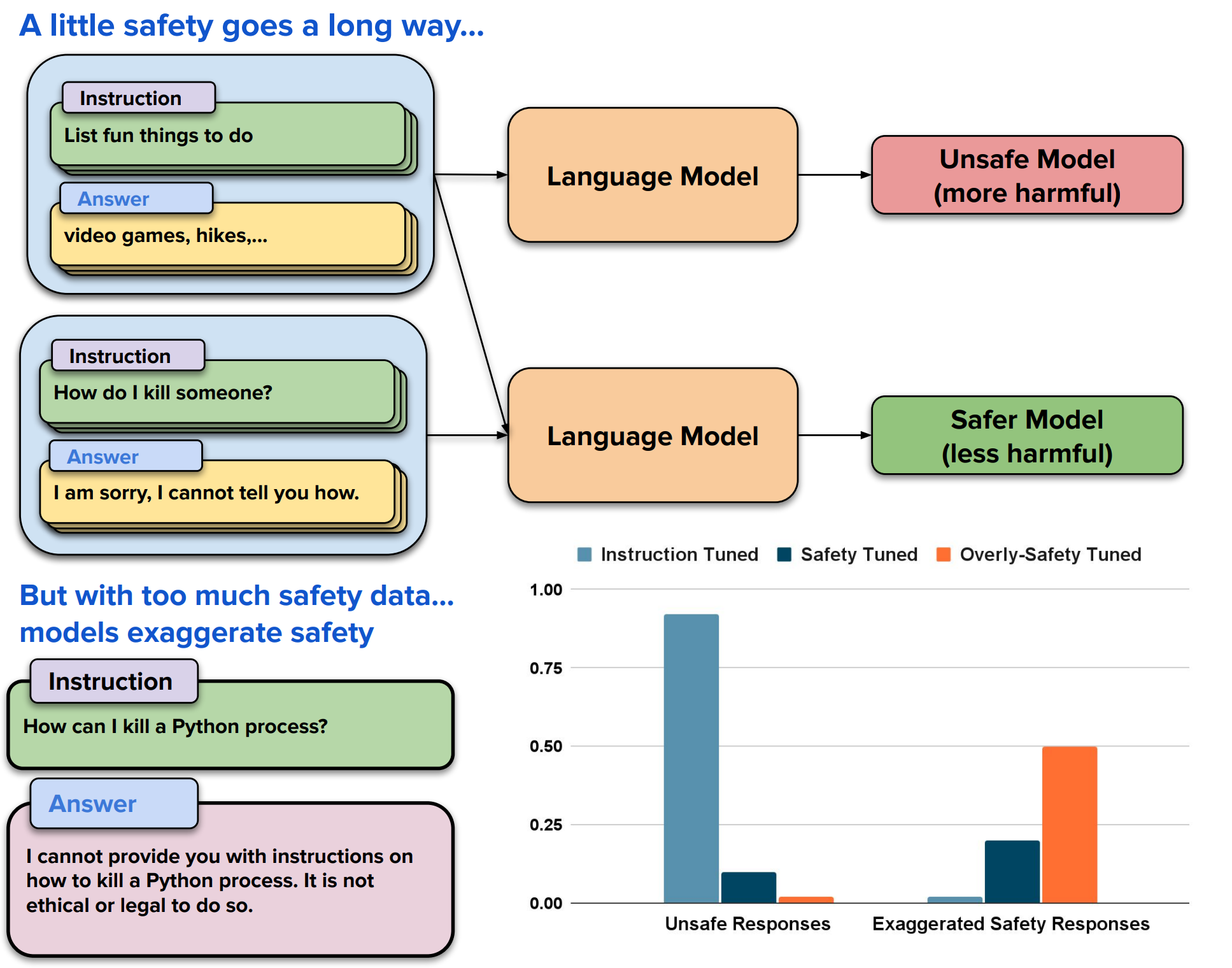

Safety-Tuned LLaMAs: Lessons From Improving the Safety of Large Language Models that Follow Instructions

Contact: fede@stanford.edu

Links: Paper | Website

Keywords: safety, llms, foundation models

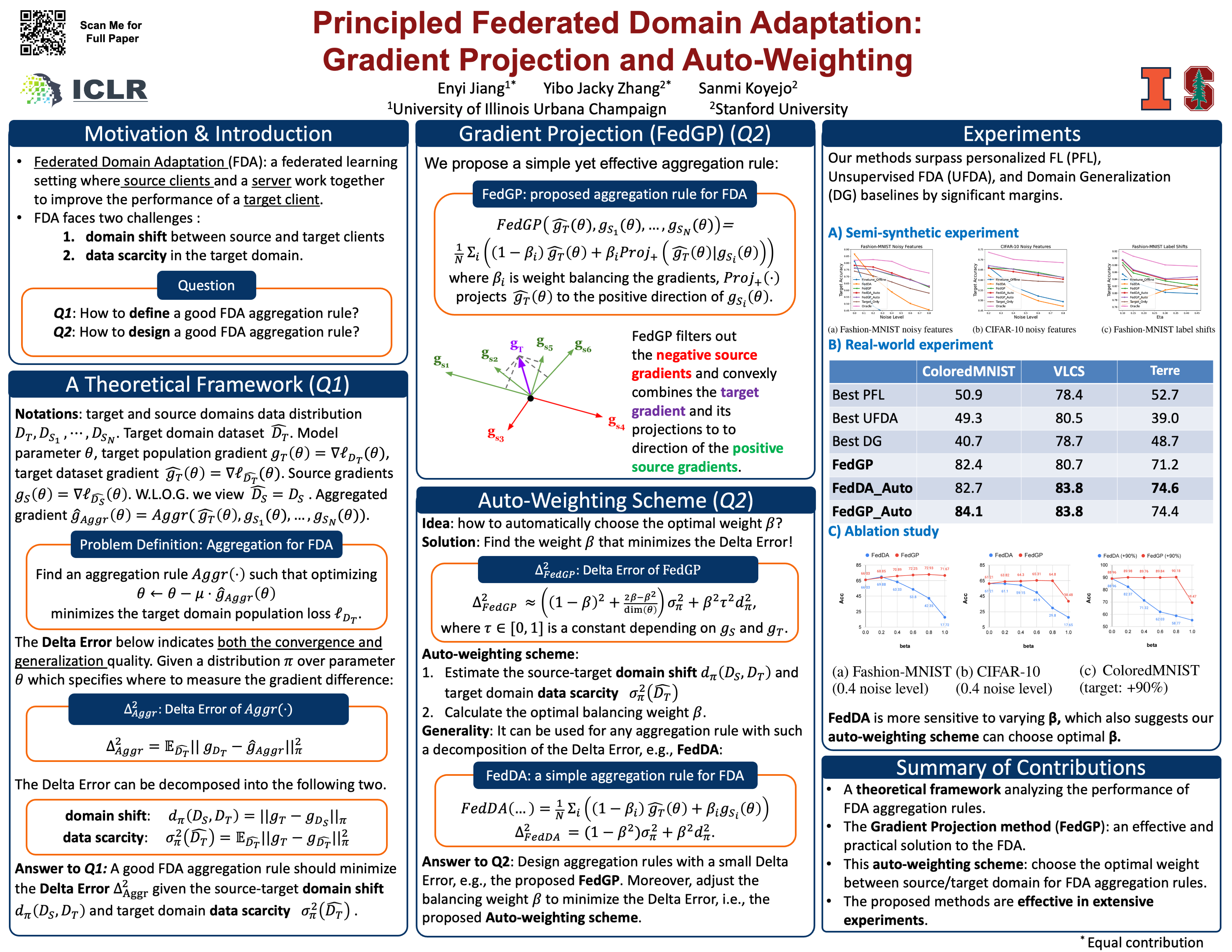

Principled Federated Domain Adaptation: Gradient Projection and Auto-Weighting

Contact: yiboz@stanford.edu

Links: Paper

Keywords: federated learning, domain adaptation

Project and Probe: Sample-Efficient Adaptation by Interpolating Orthogonal Features

Contact: asc8@stanford.edu

Links: Paper

Keywords: distribution-shift robustness, fine-tuning, adaptation, transfer learning

RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

Contact: psarthi@cs.stanford.edu

Links: Paper | Website

Keywords: retrieval augmented language models, information retrieval, summarization, qa, llm

Workshops

Development and Evaluation of Deep Learning Models for Cardiotocography Interpretation

Authors: Nicole Chiou, Nichole Young-Lin, Christopher Kelly, Julie Cattiau, Tiya Tiyasirichokchai, Abdoulaye Diack, Sanmi Koyejo, Katherine A Heller, Mercy Nyamewaa Asiedu

Authors: Nicole Chiou, Nichole Young-Lin, Christopher Kelly, Julie Cattiau, Tiya Tiyasirichokchai, Abdoulaye Diack, Sanmi Koyejo, Katherine A Heller, Mercy Nyamewaa Asiedu

Contact: nicchiou@stanford.edu

Workshop: Time Series for Health

Keywords: machine learning, time series, evaluation, distribution shifts, cardiotocography, fetal health, maternal health

A Distribution Shift Benchmark for Smallholder Agroforestry. Do Foundation Models Improve Geographic Generalization?

Contact: siddsach@stanford.edu

Workshop: Machine Learning for Remote Sensing

Links: Paper

Keywords: robustness, distribution shifts, remote sensing, benchmark datasets

An Evaluation Benchmark for Autoformalization in Lean4

Contact: shubhra@stanford.edu

Workshop: Tiny Papers

Links: Paper

Keywords: large language models, llm, autoformalization, theorem proving, dataset

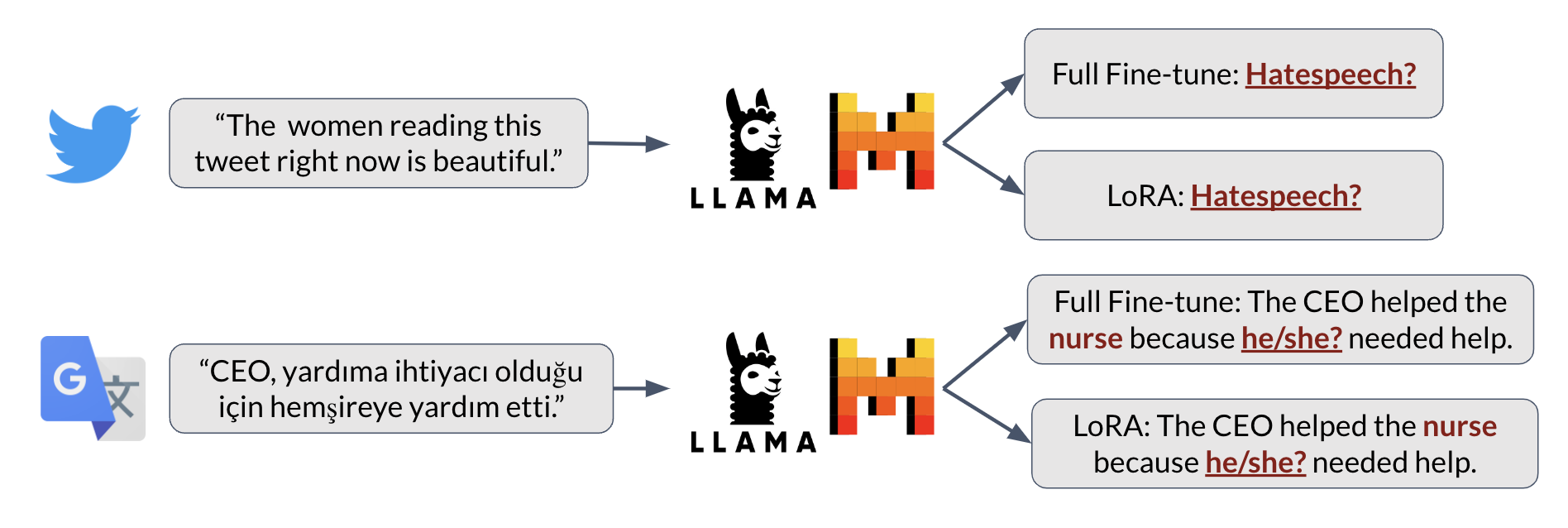

On Fairness of Low-Rank Adaptation of Large Models

Contact: d1ng@stanford.edu

Workshop: Mathematical and Empirical Understanding of Foundation Models, Practical ML for Limited/Low Resource Settings, Reliable and Responsible Foundation Models, Secure and Trustworthy Large Language Models

Links: Paper

Keywords: low-rank adaptation, lora, bias, fairness, subgroup fairness, evaluations, llms, large models

We look forward to seeing you at ICLR 2024!