Imagine that you are a student, sitting at home, studying for your next exam. How do you prepare? One might utilize flashcards, a popular method of memorizing simple facts and definitions. In addition to their simplicity, flashcards have also been shown to be quite effective, with elaborate spaced repetition techniques to ensure that students get the most out of their time.

In recent years, flashcard systems have been modernized on the web and on mobile phones, but they are more or less the same as they’ve always been. Read a card, flip it over, rinse and repeat.

What if there was a different way? Specifically, what if we can leverage what we know about modern artificial intelligence and natural language processing, and create a chatbot for factual knowledge that is more engaging and just as effective as traditional flashcard methods? That’s the idea behind QuizBot, a recent research project at Stanford.

A New Way to Learn Factual Knowledge

A chatbot is a computer program designed to communicate with humans. They are traditionally built as state machines, and are becoming increasingly more powerful with the recent advances in deep NLP. They are common in many domains and have become pervasive in the era of digital personal assistants. However, they are still not typically found in the field of education.

A Chatbot for Factual Knowledge

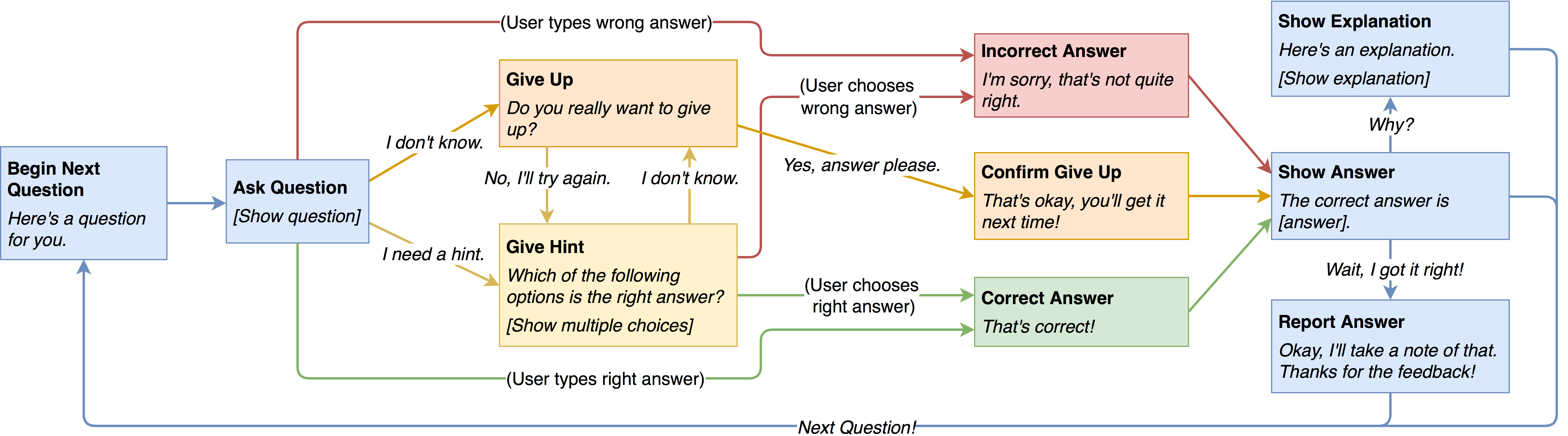

QuizBot is a chatbot for helping students learn factual knowledge. It covers a variety of topics and utilizes an interactive chat interface while leveraging modern artificial intelligence techniques (e.g., by providing supervised NLP-powered targeted feedback and implementing DASH1, an adaptive algorithm for question sequencing – see Section 3.3 in our paper). To make QuizBot feel more human, the chatbot is personified as an animated penguin named Frosty (see Figure 1).

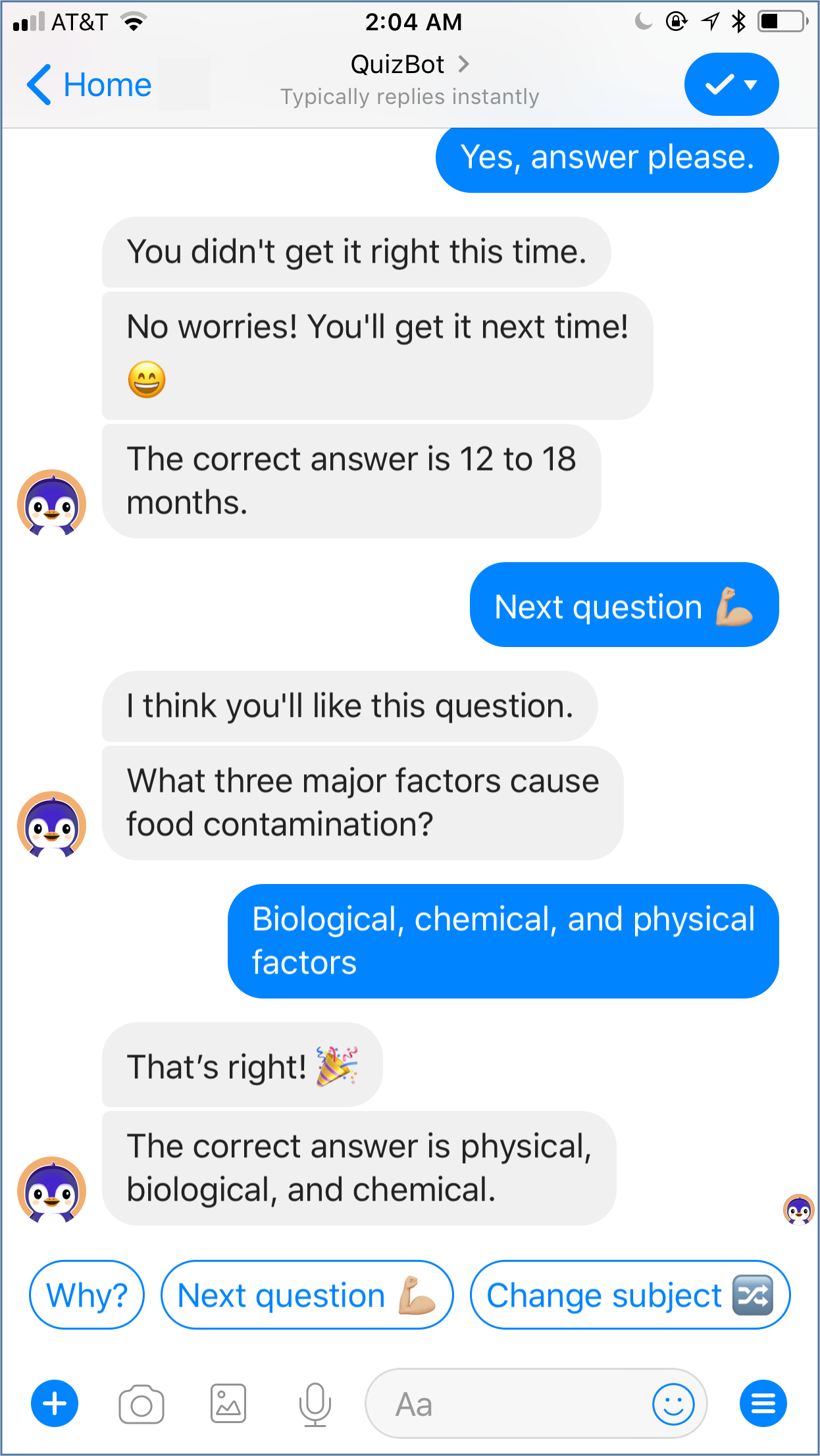

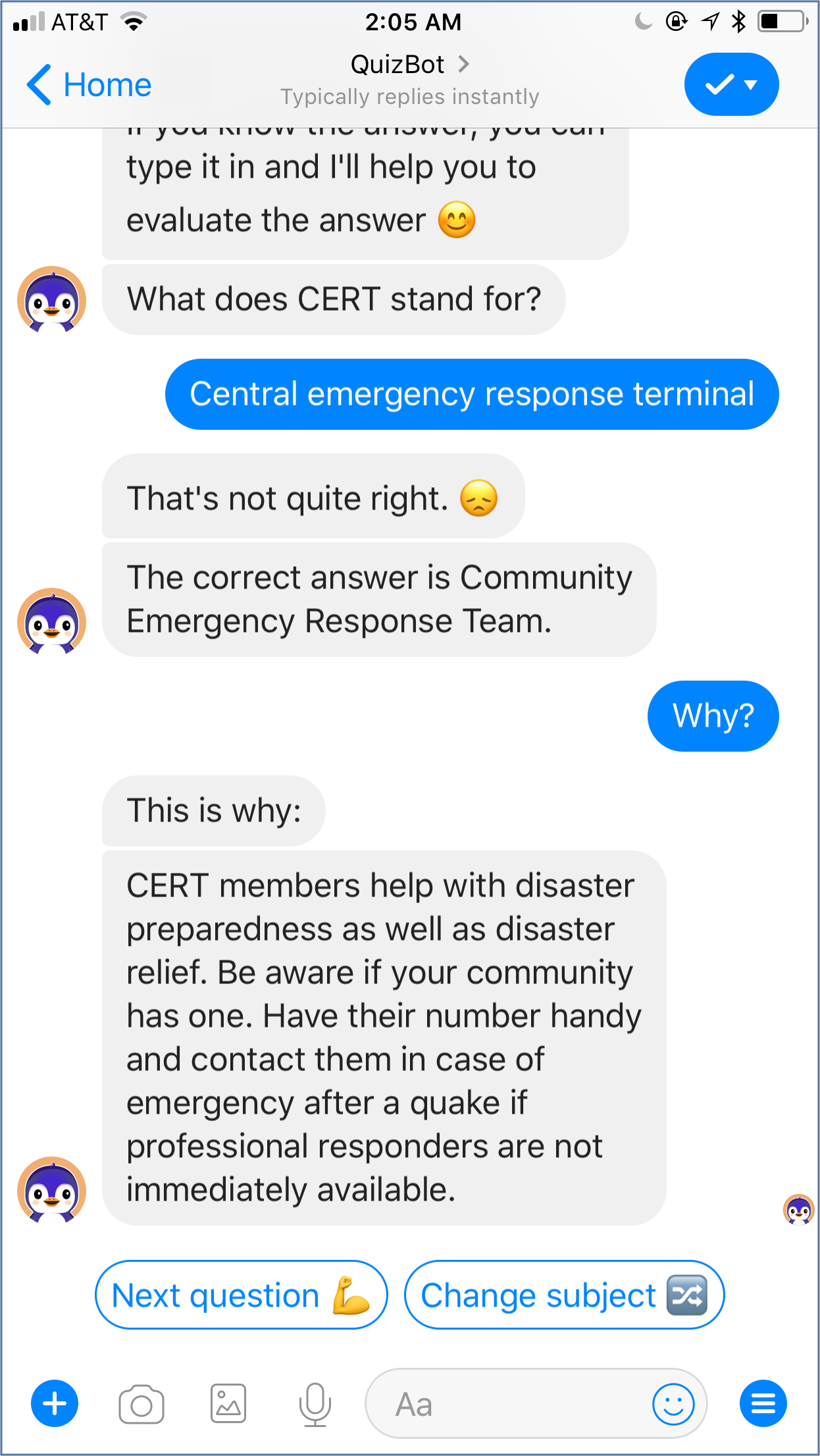

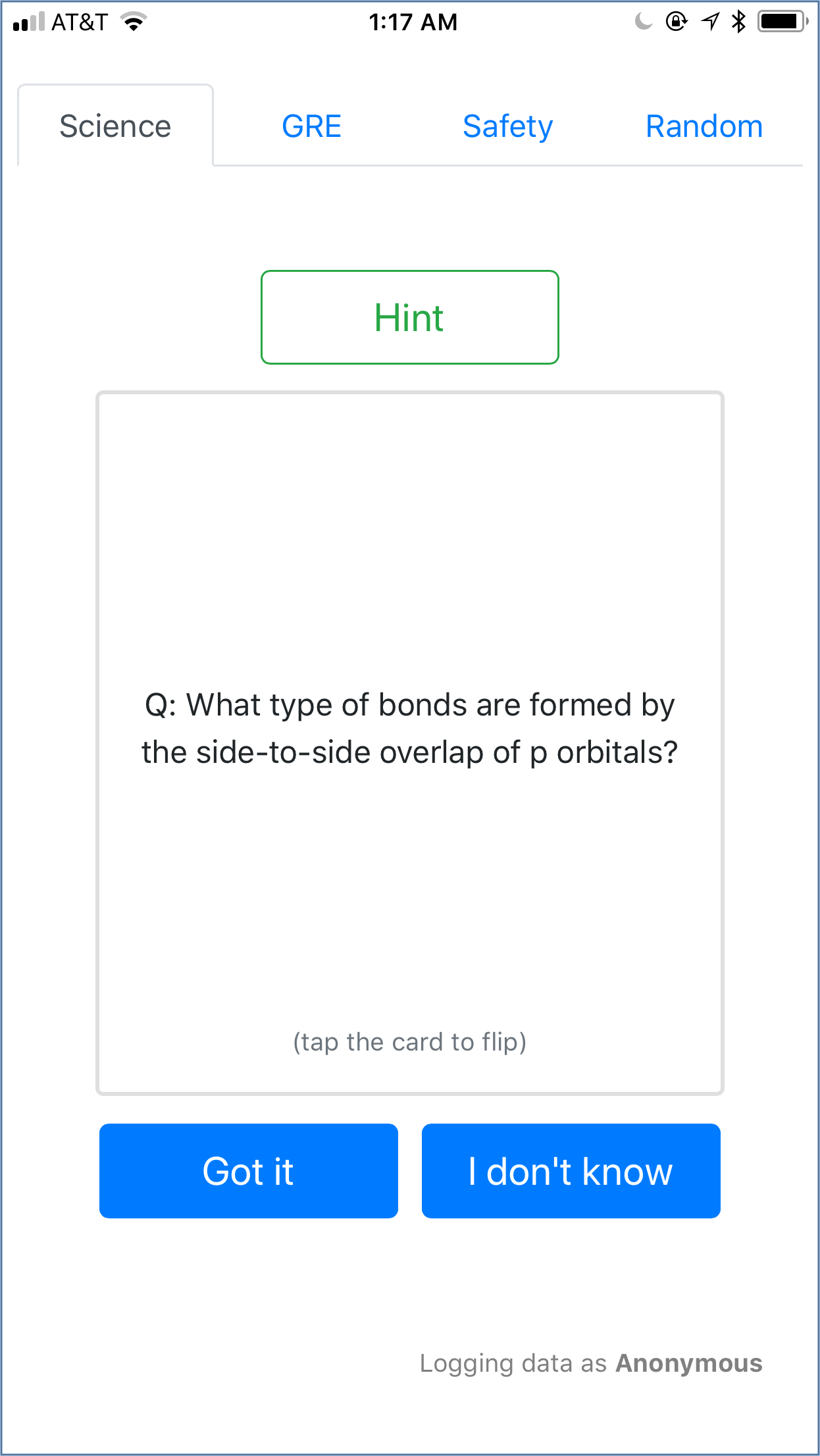

The primary method of interacting with QuizBot is to have Frosty “quiz” the user. Frosty begins by asking a factual question, and the user responds by typing their answers using natural language. QuizBot then evaluates the user input against the correct answer (via the Smooth Inverse Frequency algorithm2 – see Section 3.2 in our paper) and responds accordingly. In addition, the user can also ask for hints if they get stuck or explanations to further their understanding.

QuizBot’s design follows closely on what one might expect from a human tutor, with conversational elements taken from typical human interactions. This includes branching dialog options, positive reinforcement feedback, as well as frequent use of casual language and emojis (see Figure 2). In doing so, QuizBot begins to feel more like a person and less like a computer program.

Studies on Effectiveness and Engagement

When studying educational systems for learning, we often look at two metrics: effectiveness and engagement. They go hand in hand, and a successful system is usually good at both. These metrics are broadly defined as follows.

- Effectiveness: how good the system is at helping the user recognize and recall knowledge.

- Engagement: how successful the system is at keeping the attention of the user.

You might imagine that flashcards are highly effective since years of research have been dedicated to improving that aspect alone. But they’re probably not all that engaging, especially given the short attention spans of students. We wanted to know if QuizBot can make factual knowledge learning not only effective but also engaging.

Study Procedure

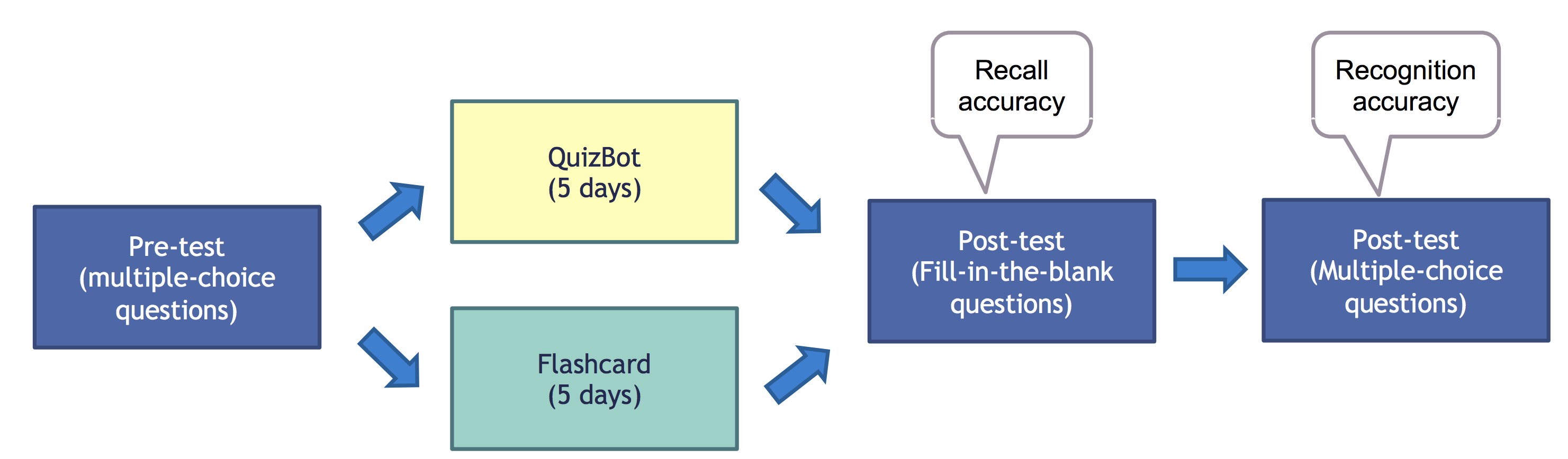

To put this question to the test, QuizBot was evaluated against a carefully designed flashcard application that mimics many of the same techniques used in QuizBot (see Figure 3). We then performed two within-subject user studies, both sharing the same procedure below.

In the first study, each question was practiced by every user exactly twice. Participants for this study were 40 university students from 11 different universities, with each participant using both QuizBot and the flashcard app over the course of the study. In the second study, users were asked to use both apps for 5 days of their own volition. Participants for this second study were 36 university students from 8 different universities.

To measure knowledge gain, we defined the following two metrics.

- Recall accuracy: percentage of questions correctly answered on the fill-in-the-blank post-test.

- Recognition accuracy: percentage of questions correctly answered on the multiple choice post-test.

Study Results

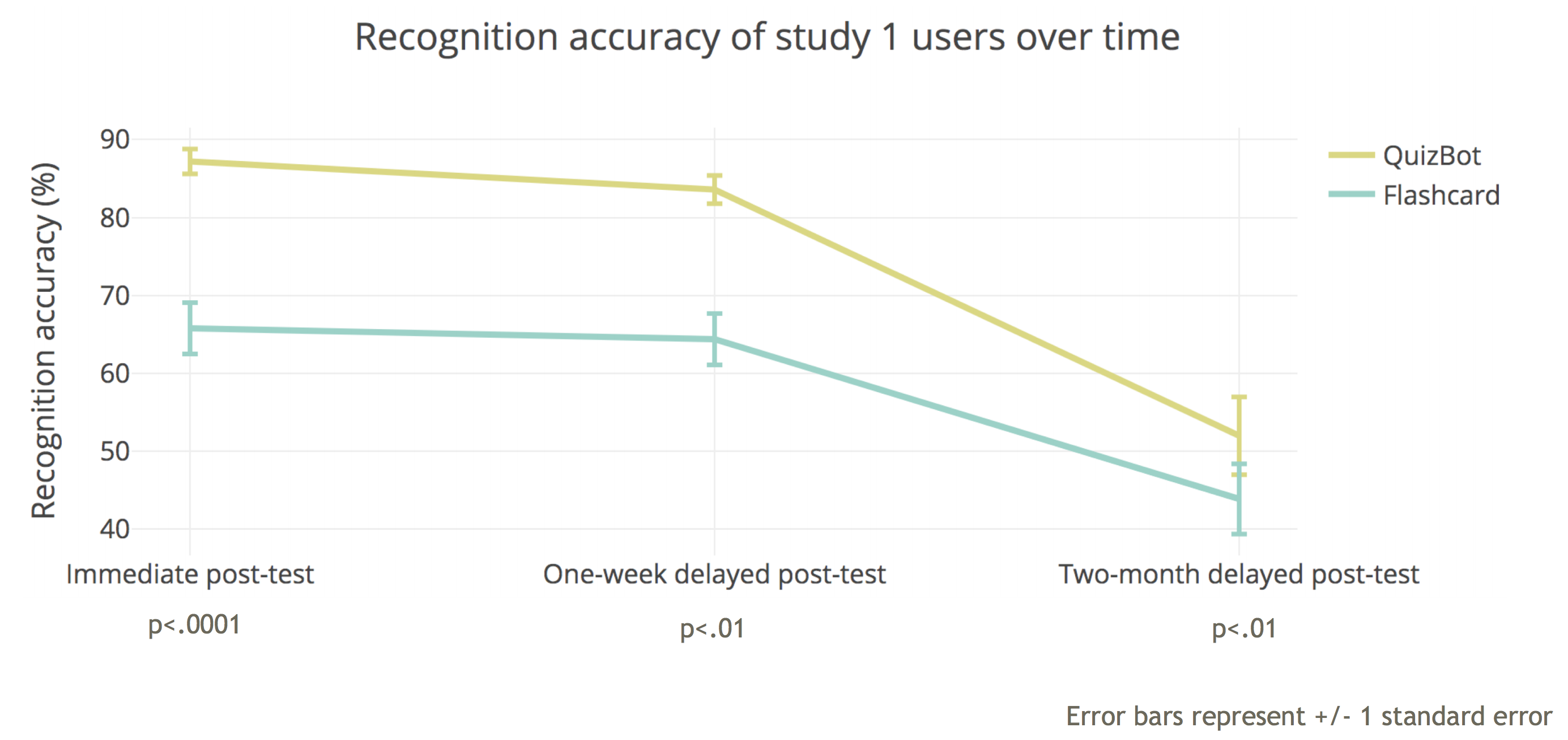

In the first study, students who studied with QuizBot were able to recognize and recall over 20% more correct answers (or 32% relative increase in recognition and 46% relative increase in recall) than students who studied using the flashcard app. These differences were statistically significant and demonstrate that chatbots can be even more effective than flashcards.

Furthermore, learning improvement persisted over time. Even after two months, students who used QuizBot were still able to recall more answers correctly than students with flashcards!

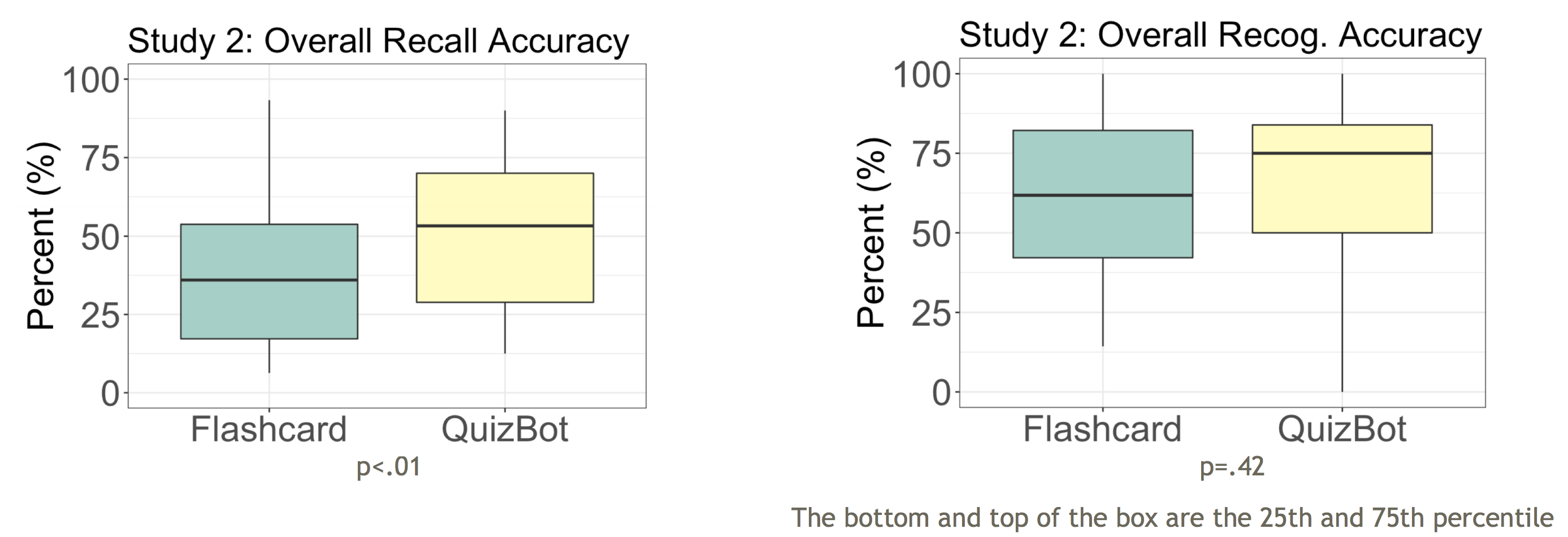

In the second study, even when students were allowed to use each app voluntarily, those who studied with QuizBot still recalled over 12% more correct answers (or 31% relative increase) and recognized a similar amount of correct answers as those who studied with the flashcard app. Again, this was a statistically significant difference.

The results from the second study also reveal that students on average spent over 2.6x more time with Quizbot than with the flashcard app, noting the increased level of interactivity and feedback from QuizBot, as well as the friendly nature of Frosty, as key factors. This indicates that chatbots can be far more engaging than traditional methods, especially in the context of casual learning.

Teaching a New Generation of Students

As artificial intelligence continues to play a bigger role in our lives, so will interest increase in its uses in the field of education. QuizBot demonstrates that chatbots not only have a place in the future of education, but they also have the potential to surpass the efficacy of traditional methods while boosting engagement in learning.

QuizBot is part of a larger AI + Education research project called Smart Primer led by Professor Emma Brunskill and Professor James Landay. The project, inspired by Neal Stephenson’s novel, “The Diamond Age,” is a step towards an adaptive, intelligent tutoring system that leverages compelling narratives, intelligent tutoring chatbots, real-world activities, and a child’s physical and educational context. QuizBot is but one of many ways the future of education can be advanced using artificial intelligence.

To learn more about QuizBot, we recommend reading our CHI paper and taking a look at Stanford’s press release on the project. Information about Smart Primer can be found on the Smart Primer website.

References

Mozer, M. C., & Lindsey, R. V. (2017). “Predicting and Improving Memory Retention: Psychological Theory Matters in the Big Data Era.” In M. N. Jones (Ed.), Frontiers of cognitive psychology. Big data in cognitive science (pp. 34-64). Psychology Press.

Arora, S., Liang, Y., & Ma, T. (2016). “A Simple but Tough-to-Beat Baseline for Sentence Embeddings.” International Conference on Learning Representations (ICLR) 2017.

Full Citation

Sherry Ruan, Liwei Jiang, Justin Xu, Bryce Joe-Kun Tham, Zhengneng Qiu, Yeshuang Zhu, Elizabeth L. Murnane, Emma Brunskill, and James A. Landay. 2019. QuizBot: A Dialogue-based Adaptive Learning System for Factual Knowledge. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ‘19). ACM, New York, NY, USA, Paper 357, 13 pages. DOI: https://doi.org/10.1145/3290605.3300587

You can also access our paper at https://hci.stanford.edu/publications/2019/quizbot/quizbot-chi2019.pdf.

-

Mozer, M. C., & Lindsey, R. V. (2017). “Predicting and Improving Memory Retention: Psychological Theory Matters in the Big Data Era.” ↩

-

Arora, S., Liang, Y., & Ma, T. (2016). “A Simple but Tough-to-Beat Baseline for Sentence Embeddings.” ↩