The Conference on Robot Learning (CoRL 2021) will take place next week. We’re excited to share all the work from SAIL that will be presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

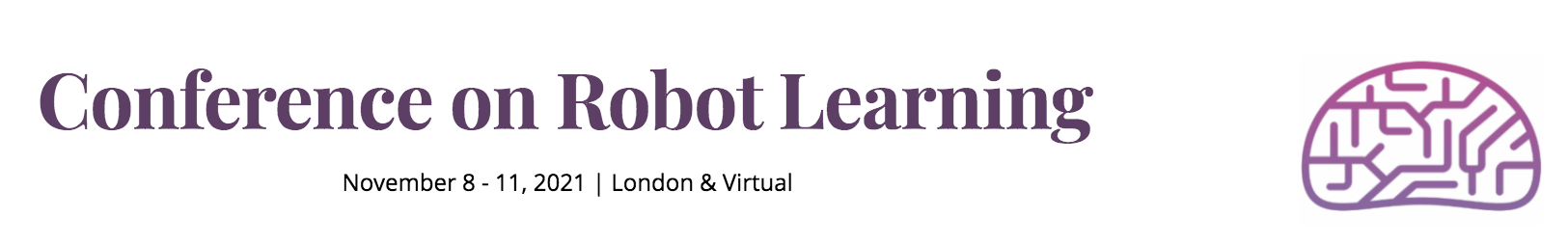

LILA: Language-Informed Latent Actions

Authors: Siddharth Karamcheti*, Megha Srivastava*, Percy Liang, Dorsa Sadigh

Authors: Siddharth Karamcheti*, Megha Srivastava*, Percy Liang, Dorsa Sadigh

Contact: skaramcheti@cs.stanford.edu, megha@cs.stanford.edu

Keywords: natural language, shared autonomy, human-robot interaction

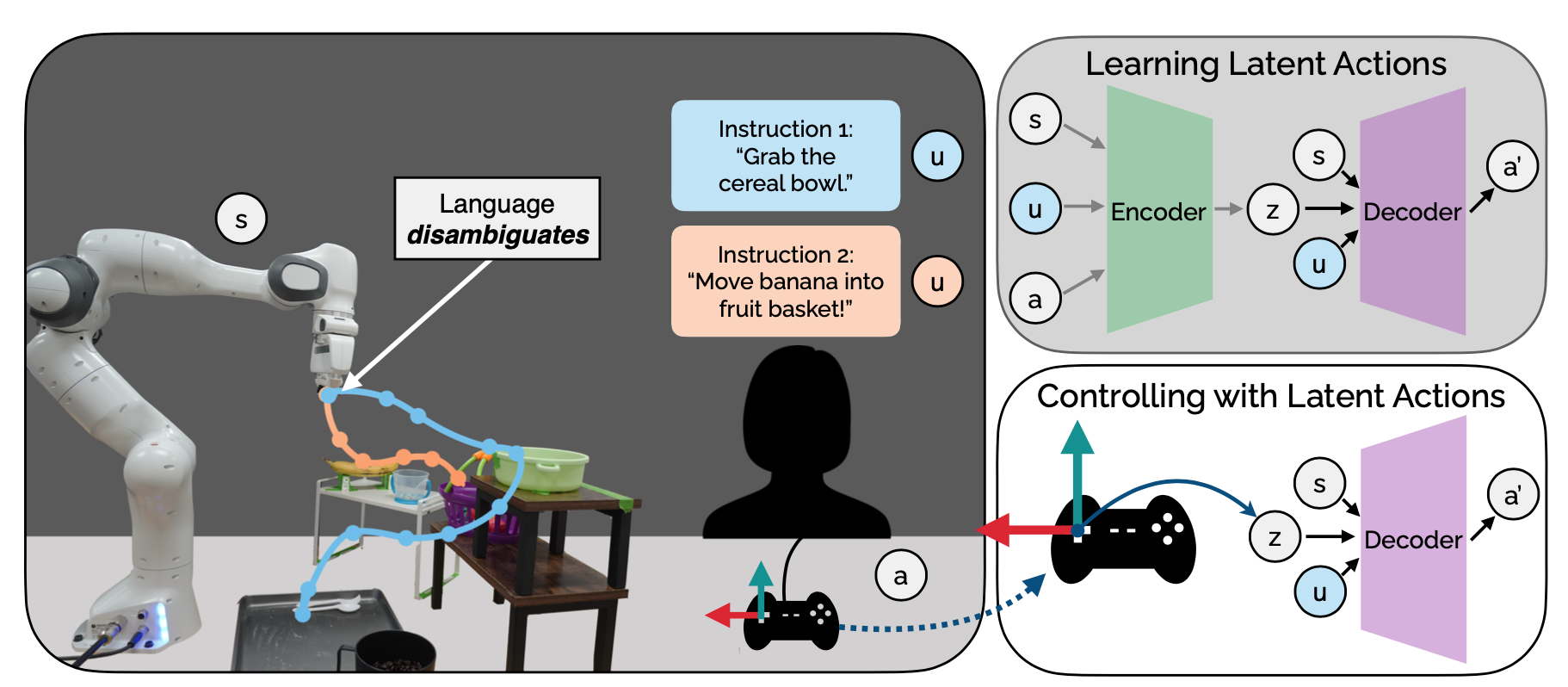

BEHAVIOR: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments

Authors: Sanjana Srivastava*, Chengshu Li*, Michael Lingelbach*, Roberto Martín-Martín*, Fei Xia, Kent Vainio, Zheng Lian, Cem Gokmen, Shyamal Buch, C. Karen Liu, Silvio Savarese, Hyowon Gweon, Jiajun Wu, Li Fei-Fei

Authors: Sanjana Srivastava*, Chengshu Li*, Michael Lingelbach*, Roberto Martín-Martín*, Fei Xia, Kent Vainio, Zheng Lian, Cem Gokmen, Shyamal Buch, C. Karen Liu, Silvio Savarese, Hyowon Gweon, Jiajun Wu, Li Fei-Fei

Contact: sanjana2@stanford.edu

Links: Paper | Website

Keywords: embodied ai, benchmarking, household activities

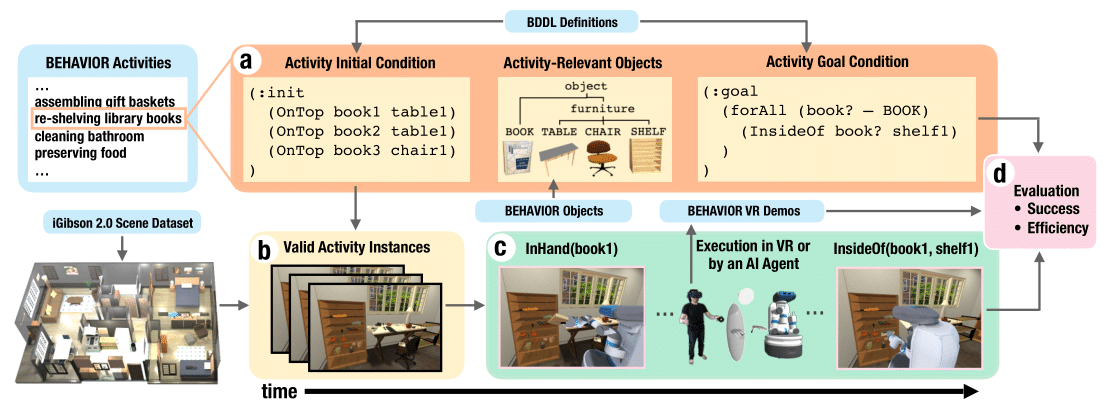

Co-GAIL: Learning Diverse Strategies for Human-Robot Collaboration

Authors: Chen Wang, Claudia Pérez-D’Arpino, Danfei Xu, Li Fei-Fei, C. Karen Liu, Silvio Savarese

Authors: Chen Wang, Claudia Pérez-D’Arpino, Danfei Xu, Li Fei-Fei, C. Karen Liu, Silvio Savarese

Contact: chenwj@stanford.edu

Links: Paper | Website

Keywords: learning for human-robot collaboration, imitation learning

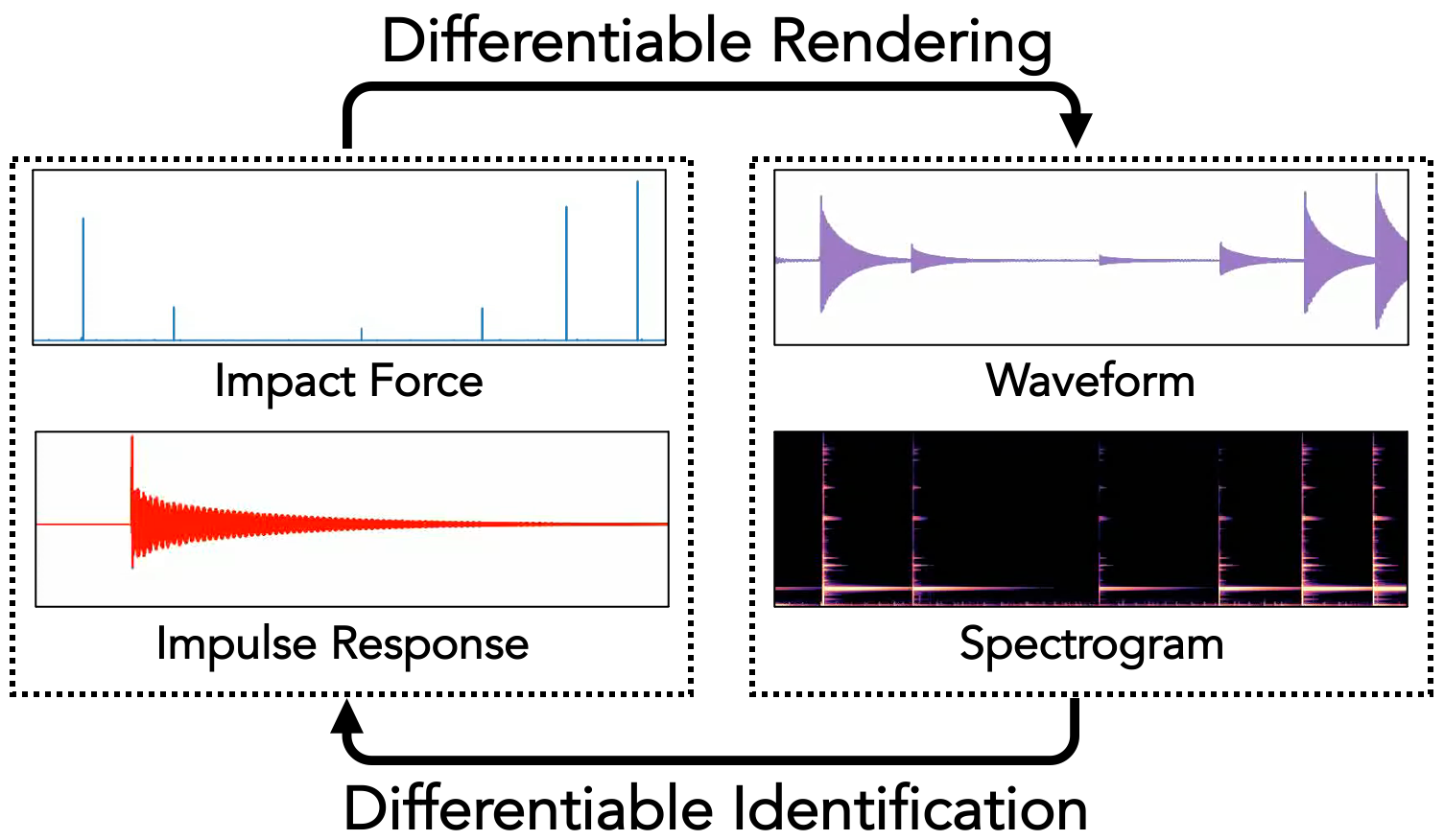

DiffImpact: Differentiable Rendering and Identification of Impact Sounds

Authors: Samuel Clarke, Negin Heravi, Mark Rau, Ruohan Gao, Jiajun Wu, Doug James, Jeannette Bohg

Authors: Samuel Clarke, Negin Heravi, Mark Rau, Ruohan Gao, Jiajun Wu, Doug James, Jeannette Bohg

Contact: spclarke@stanford.edu

Links: Paper | Website

Keywords: differentiable sound rendering, auditory scene analysis

Example-Driven Model-Based Reinforcement Learning for Solving Long-Horizon Visuomotor Tasks

Authors: Bohan Wu, Suraj Nair, Li Fei-Fei*, Chelsea Finn*

Authors: Bohan Wu, Suraj Nair, Li Fei-Fei*, Chelsea Finn*

Contact: bohanwu@cs.stanford.edu

Links: Paper

Keywords: model-based reinforcement learning, long-horizon tasks

GRAC: Self-Guided and Self-Regularized Actor-Critic

Authors: Lin Shao, Yifan You, Mengyuan Yan, Shenli Yuan, Qingyun Sun, Jeannette Bohg

Authors: Lin Shao, Yifan You, Mengyuan Yan, Shenli Yuan, Qingyun Sun, Jeannette Bohg

Contact: harry473417@ucla.edu

Links: Paper | Website

Keywords: deep reinforcement learning, q-learning

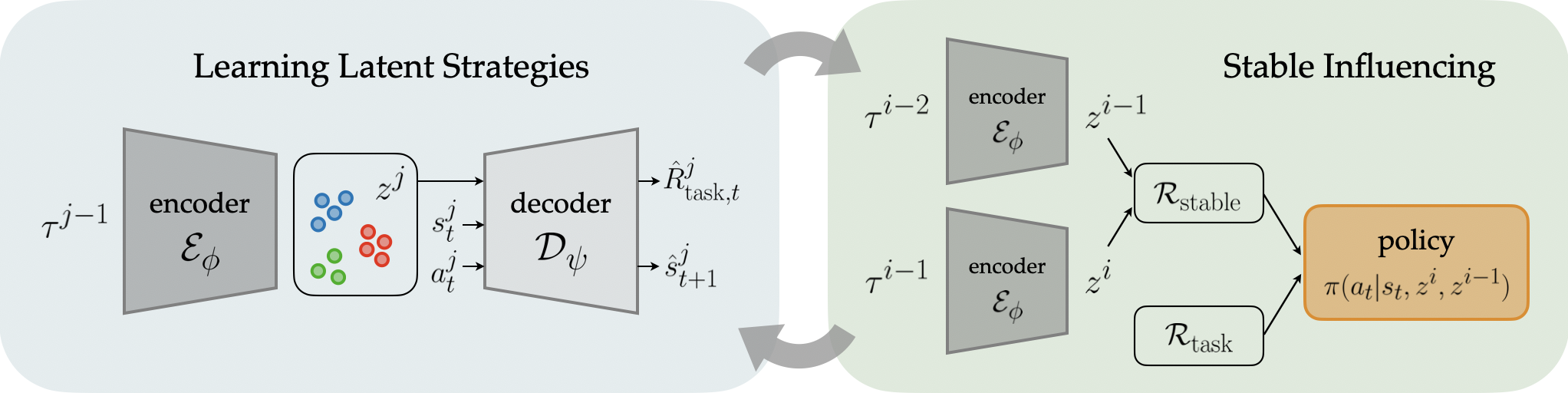

Influencing Towards Stable Multi-Agent Interactions

Authors: Woodrow Z. Wang, Andy Shih, Annie Xie, Dorsa Sadigh

Authors: Woodrow Z. Wang, Andy Shih, Annie Xie, Dorsa Sadigh

Contact: woodywang153@gmail.com

Award nominations: Oral presentation

Links: Paper | Website

Keywords: multi-agent interactions, human-robot interaction, non-stationarity

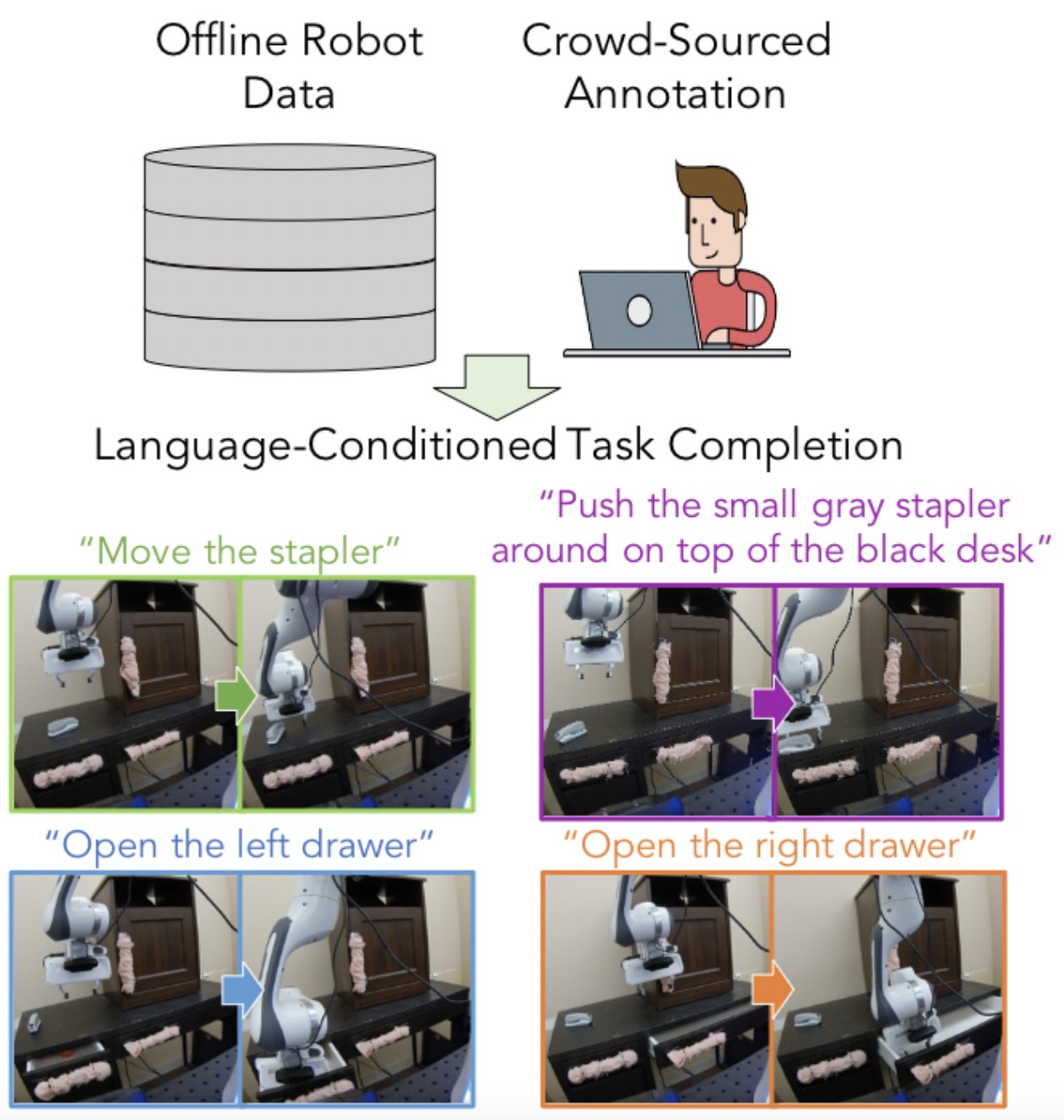

Learning Language-Conditioned Robot Behavior from Offline Data and Crowd-Sourced Annotation

Authors: Suraj Nair, Eric Mitchell, Kevin Chen, Brian Ichter, Silvio Savarese, Chelsea Finn

Authors: Suraj Nair, Eric Mitchell, Kevin Chen, Brian Ichter, Silvio Savarese, Chelsea Finn

Contact: surajn@stanford.edu

Links: Paper | Website

Keywords: natural language, offline rl, visuomotor manipulation

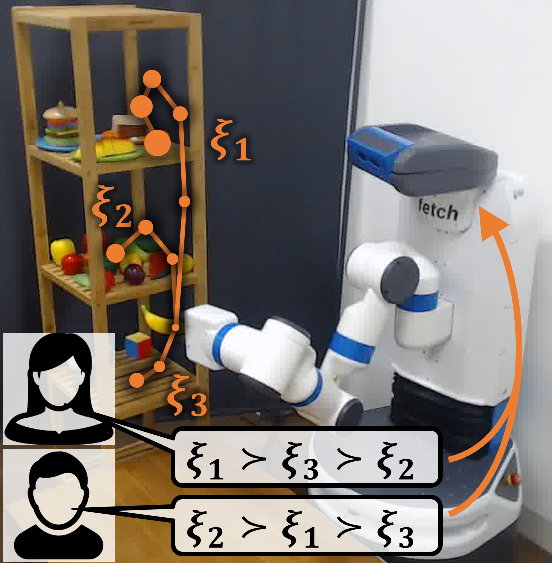

Learning Multimodal Rewards from Rankings

Authors: Vivek Myers, Erdem Bıyık, Nima Anari, Dorsa Sadigh

Authors: Vivek Myers, Erdem Bıyık, Nima Anari, Dorsa Sadigh

Contact: ebiyik@stanford.edu

Links: Paper | Video | Website

Keywords: reward learning, active learning, learning from rankings, multimodality

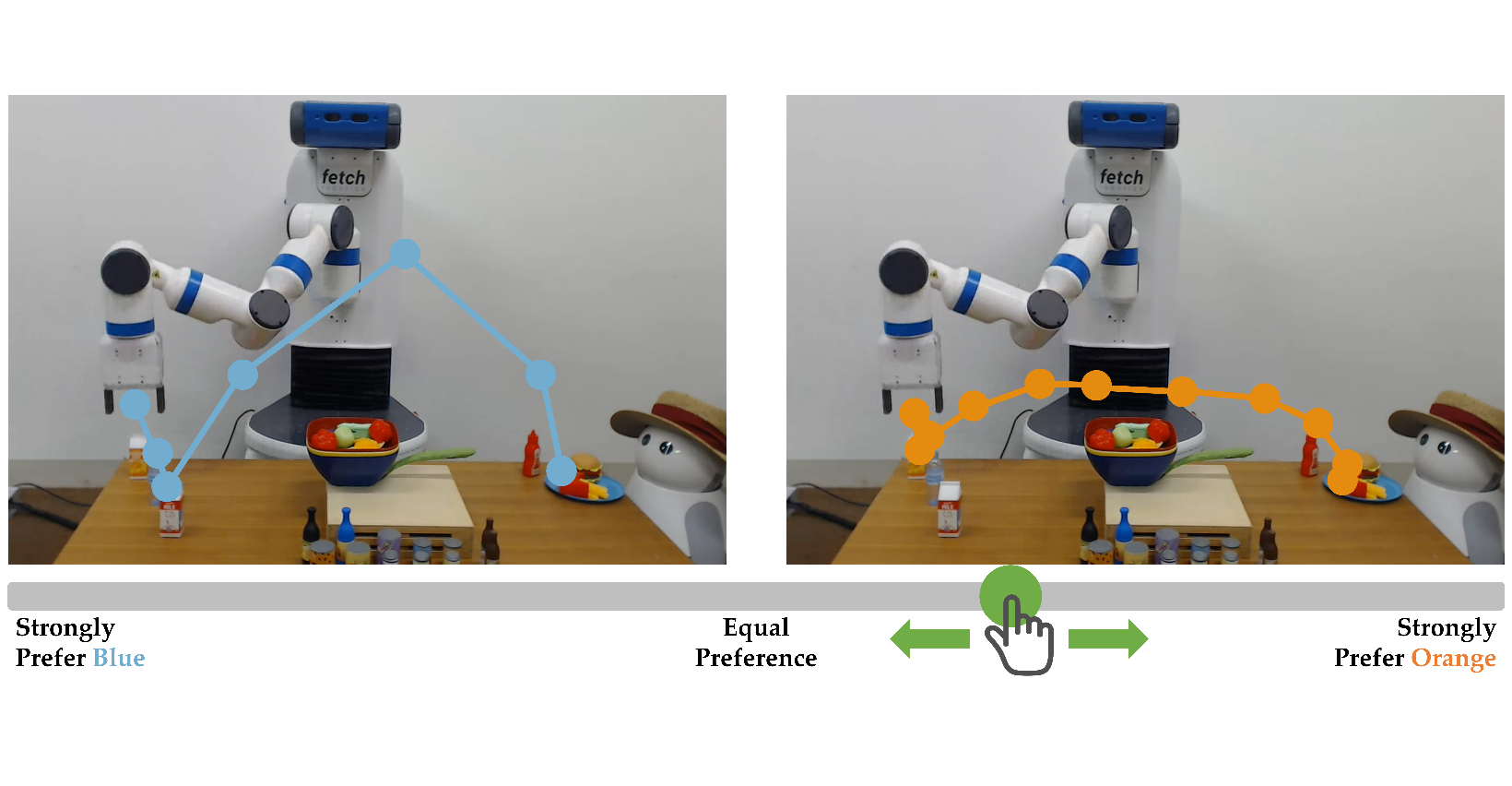

Learning Reward Functions from Scale Feedback

Authors: Nils Wilde*, Erdem Bıyık*, Dorsa Sadigh, Stephen L. Smith

Authors: Nils Wilde*, Erdem Bıyık*, Dorsa Sadigh, Stephen L. Smith

Contact: ebiyik@stanford.edu

Links: Paper | Video | Website

Keywords: preference-based learning, reward learning, active learning, scale feedback

Learning to Regrasp by Learning to Place

Authors: Shuo Cheng, Kaichun Mo, Lin Shao

Authors: Shuo Cheng, Kaichun Mo, Lin Shao

Contact: lins2@stanford.edu

Links: Paper | Website

Keywords: regrasping, object placement, robotic manipulation

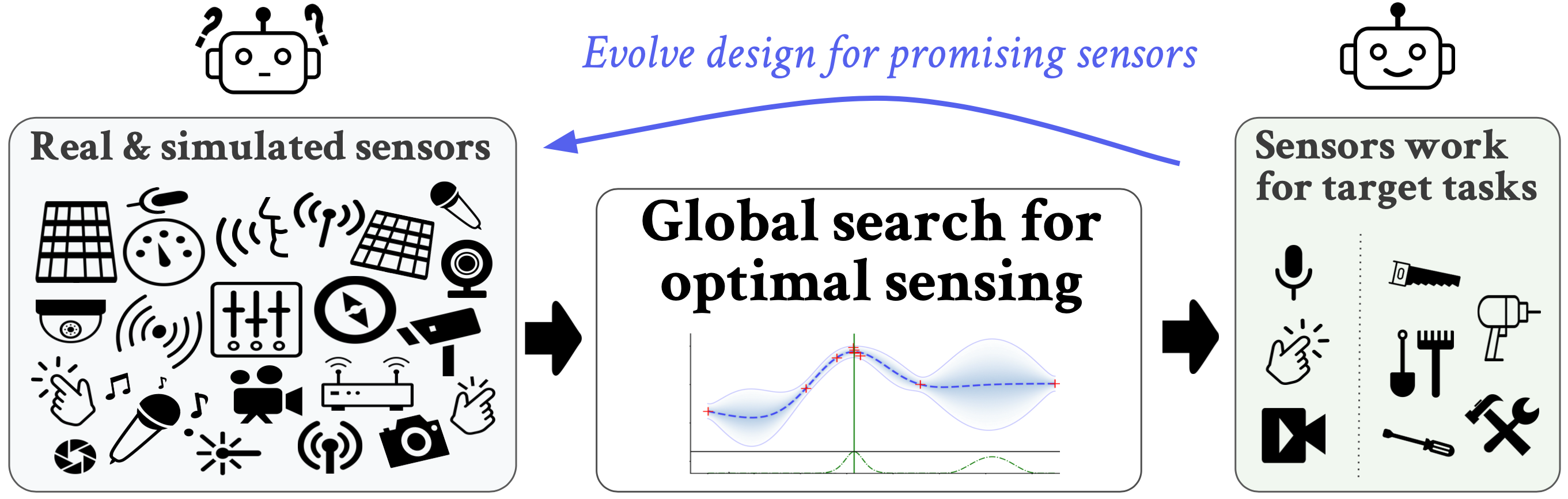

Learning to be Multimodal : Co-evolving Sensory Modalities and Sensor Properties

Authors: Rika Antonova, Jeannette Bohg

Authors: Rika Antonova, Jeannette Bohg

Contact: rika.antonova@stanford.edu

Links: Paper

Keywords: co-design, multimodal sensing, corl blue sky track

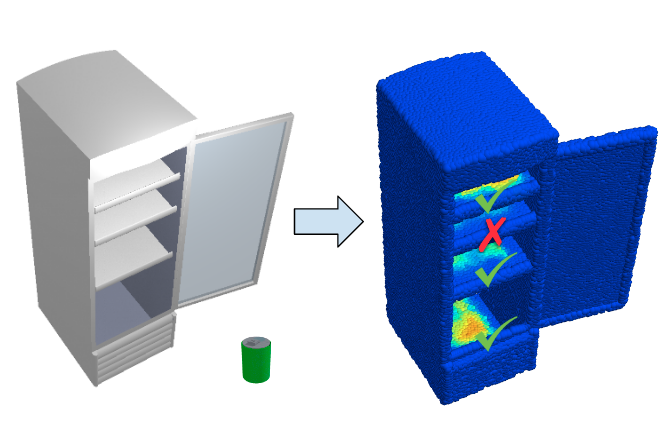

O2O-Afford: Annotation-Free Large-Scale Object-Object Affordance Learning

Authors: Kaichun Mo, Yuzhe Qin, Fanbo Xiang, Hao Su, Leonidas J. Guibas

Authors: Kaichun Mo, Yuzhe Qin, Fanbo Xiang, Hao Su, Leonidas J. Guibas

Contact: kaichun@cs.stanford.edu

Links: Paper | Video | Website

Keywords: robotic vision, object-object interaction, visual affordance

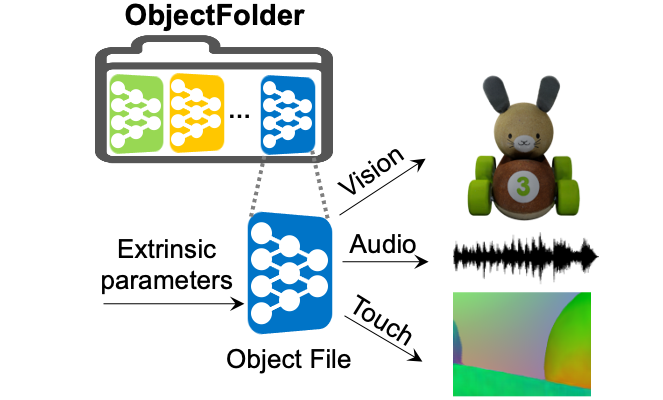

ObjectFolder: A Dataset of Objects with Implicit Visual, Auditory, and Tactile Representations

Authors: Ruohan Gao, Yen-Yu Chang, Shivani Mall, Li Fei-Fei, Jiajun Wu

Authors: Ruohan Gao, Yen-Yu Chang, Shivani Mall, Li Fei-Fei, Jiajun Wu

Contact: rhgao@cs.stanford.edu

Links: Paper | Video | Website

Keywords: object dataset, multisensory learning, implicit representations

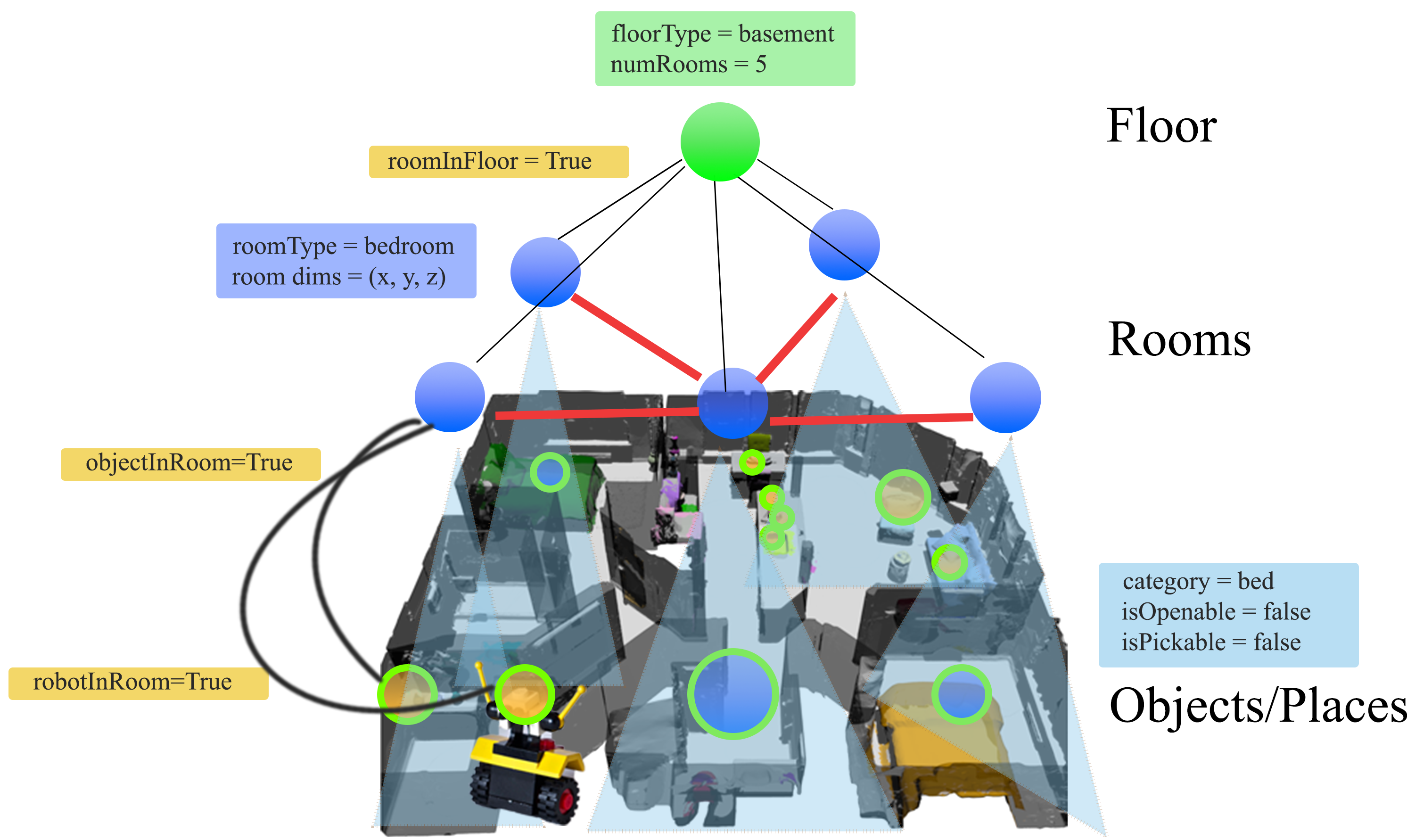

Taskography: Evaluating robot task planning over large 3D scene graphs

Authors: Christopher Agia, Krishna Murthy Jatavallabhula, Mohamed Khodeir, Ondrej Miksik, Vibhav Vineet, Mustafa Mukadam, Liam Paull, Florian Shkurti

Authors: Christopher Agia, Krishna Murthy Jatavallabhula, Mohamed Khodeir, Ondrej Miksik, Vibhav Vineet, Mustafa Mukadam, Liam Paull, Florian Shkurti

Contact: cagia@stanford.edu

Links: Paper | Website

Keywords: robot task planning, 3d scene graphs, learning to plan, benchmarks

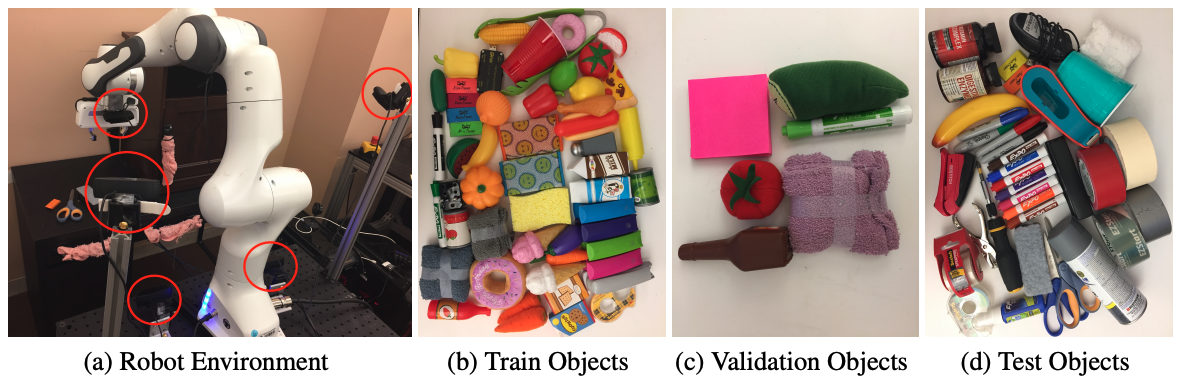

What Matters in Learning from Offline Human Demonstrations for Robot Manipulation

Authors: Ajay Mandlekar, Danfei Xu, Josiah Wong, Soroush Nasiriany, Chen Wang, Rohun Kulkarni, Li Fei-Fei, Silvio Savarese, Yuke Zhu, Roberto Martín-Martín

Authors: Ajay Mandlekar, Danfei Xu, Josiah Wong, Soroush Nasiriany, Chen Wang, Rohun Kulkarni, Li Fei-Fei, Silvio Savarese, Yuke Zhu, Roberto Martín-Martín

Contact: amandlek@cs.stanford.edu

Award nominations: Oral

Links: Paper | Blog Post | Video | Website

Keywords: imitation learning, offline reinforcement learning, robot manipulation

XIRL: Cross-embodiment Inverse Reinforcement Learning

Authors: Kevin Zakka, Andy Zeng, Pete Florence, Jonathan Tompson, Jeannette Bohg, Debidatta Dwibedi

Authors: Kevin Zakka, Andy Zeng, Pete Florence, Jonathan Tompson, Jeannette Bohg, Debidatta Dwibedi

Contact: zakka@berkeley.edu

Links: Paper | Website

Keywords: inverse reinforcement learning, imitation learning, self-supervised learning

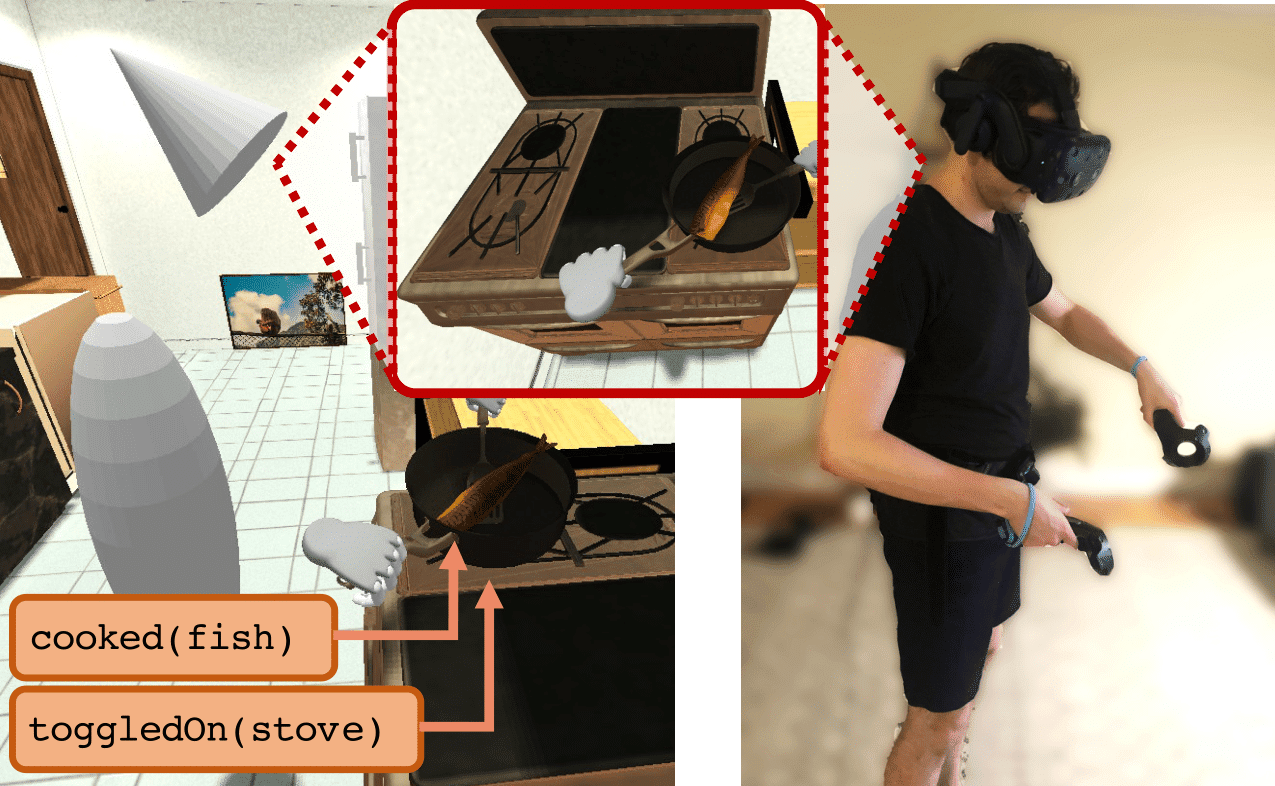

iGibson 2.0: Object-Centric Simulation for Robot Learning of Everyday Household Tasks

Authors: Chengshu Li*, Fei Xia*, Roberto Martín-Martín*, Michael Lingelbach, Sanjana Srivastava, Bokui Shen, Kent Vainio, Cem Gokmen, Gokul Dharan, Tanish Jain, Andrey Kurenkov, C. Karen Liu, Hyowon Gweon, Jiajun Wu, Li Fei-Fei, Silvio Savarese

Authors: Chengshu Li*, Fei Xia*, Roberto Martín-Martín*, Michael Lingelbach, Sanjana Srivastava, Bokui Shen, Kent Vainio, Cem Gokmen, Gokul Dharan, Tanish Jain, Andrey Kurenkov, C. Karen Liu, Hyowon Gweon, Jiajun Wu, Li Fei-Fei, Silvio Savarese

Contact: chengshu@stanford.edu

Links: Paper | Website

Keywords: simulation environment, embodied ai, virtual reality interface

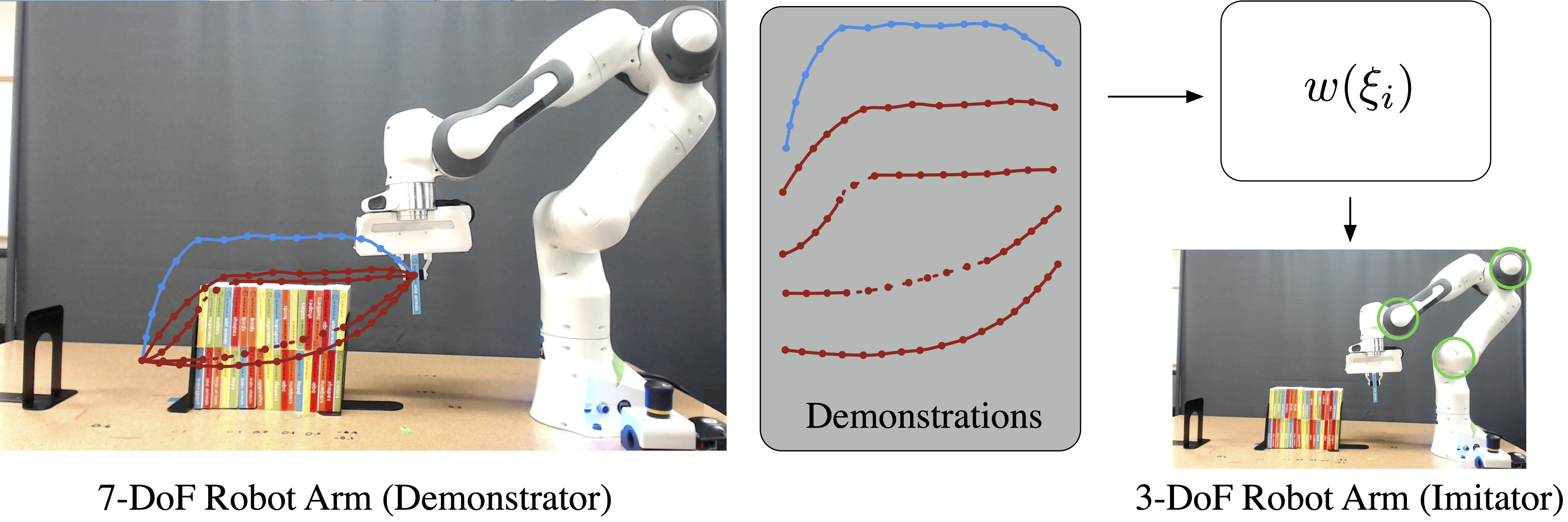

Learning Feasibility to Imitate Demonstrators with Different Dynamics

Authors: Zhangjie Cao, Yilun Hao, Mengxi Li, Dorsa Sadigh

Authors: Zhangjie Cao, Yilun Hao, Mengxi Li, Dorsa Sadigh

Contact: caozj@cs.stanford.edu

Keywords: imitation learning, learning from agents with different dynamics

We look forward to seeing you at CoRL 2021!